NVIDIA has released NeMo Guardrails, an open-source software designed to help developers create safe and reliable AI applications that generate text while staying on topic and secure. As industries increasingly adopt large language models (LLMs) to answer questions, summarize documents, and even write software or accelerate drug design, the need for safety measures in AI-powered applications has become critical.

NeMo Guardrails aims to address this industry-wide concern by working with all LLMs, including OpenAI's ChatGPT. The software allows developers to align LLM-powered applications with their company's domain expertise and ensure the applications remain safe and secure.

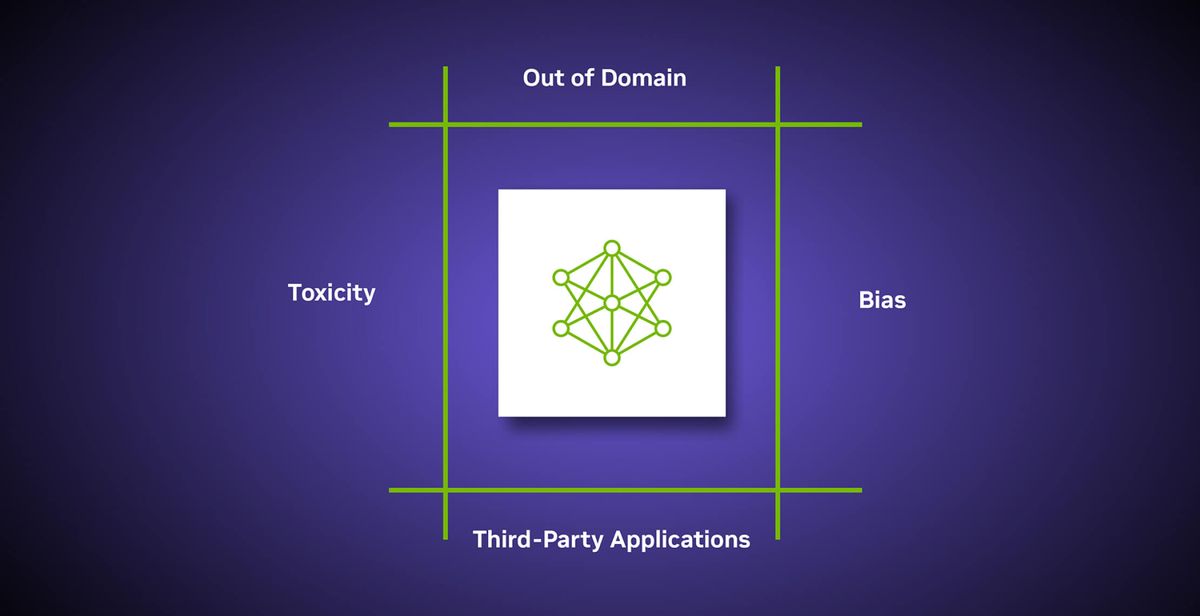

The software empowers developers to establish three types of guardrails:

- Topical guardrails prevent apps from diverging into unrelated areas, ensuring customer service assistants stay focused on their intended domain.

- Safety guardrails guarantee apps provide accurate and appropriate information, filtering out unwanted language and enforcing references to credible sources.

- Security guardrails restrict apps to connect only with known and safe external third-party applications.

Developers can use NeMo Guardrails without being machine learning experts or data scientists, and new rules can be created with just a few lines of code. As an open-source solution, NeMo Guardrails is compatible with the tools used by enterprise app developers, such as LangChain, an open-source toolkit rapidly gaining adoption for integrating third-party applications with LLMs.

The toolkits provide composable, easy-to-use templates and patterns to build LLM-powered applications by gluing together LLMs, APIs, and other software packages.

Reid Robinson, Lead Product Manager of AI at Zapier, praised NVIDIA's proactive approach, stating, "Safety, security, and trust are the cornerstones of responsible AI development, and we’re excited about NVIDIA’s proactive approach to embed these guardrails into AI systems.”

NVIDIA is incorporating NeMo Guardrails into the NVIDIA NeMo framework, which provides everything needed to train and tune language models using a company's proprietary data. It is also part of NVIDIA AI Foundations, a family of cloud services for businesses wanting to create and run custom generative AI models based on their datasets and domain knowledge.

NVIDIA's decision to make NeMo Guardrails open-source is aimed at contributing to the ongoing research on AI safety within the developer community. By working together on guardrails, companies can keep their smart services in line with safety, privacy, and security requirements, ensuring these engines of innovation stay on track.