NVIDIA announced the launch of its latest data center GPU, the HGX H200, during its keynote at the annual supercomputing conference SC23. Built on the company's advanced Hopper architecture, the H200 offers significant performance improvements that will drive the next wave of generative AI and high-performance computing.

The standout feature of the NVIDIA H200 is its integration of HBM3e memory technology. This marks the first instance of a GPU offering HBM3e, which provides faster and larger memory capacity. The H200 boasts a remarkable 141GB of memory at a throughput of 4.8 terabytes per second. This capacity nearly doubles, and the bandwidth is 2.4 times that of the NVIDIA A100, positioning the H200 as a groundbreaking component in handling complex AI and HPC workloads.

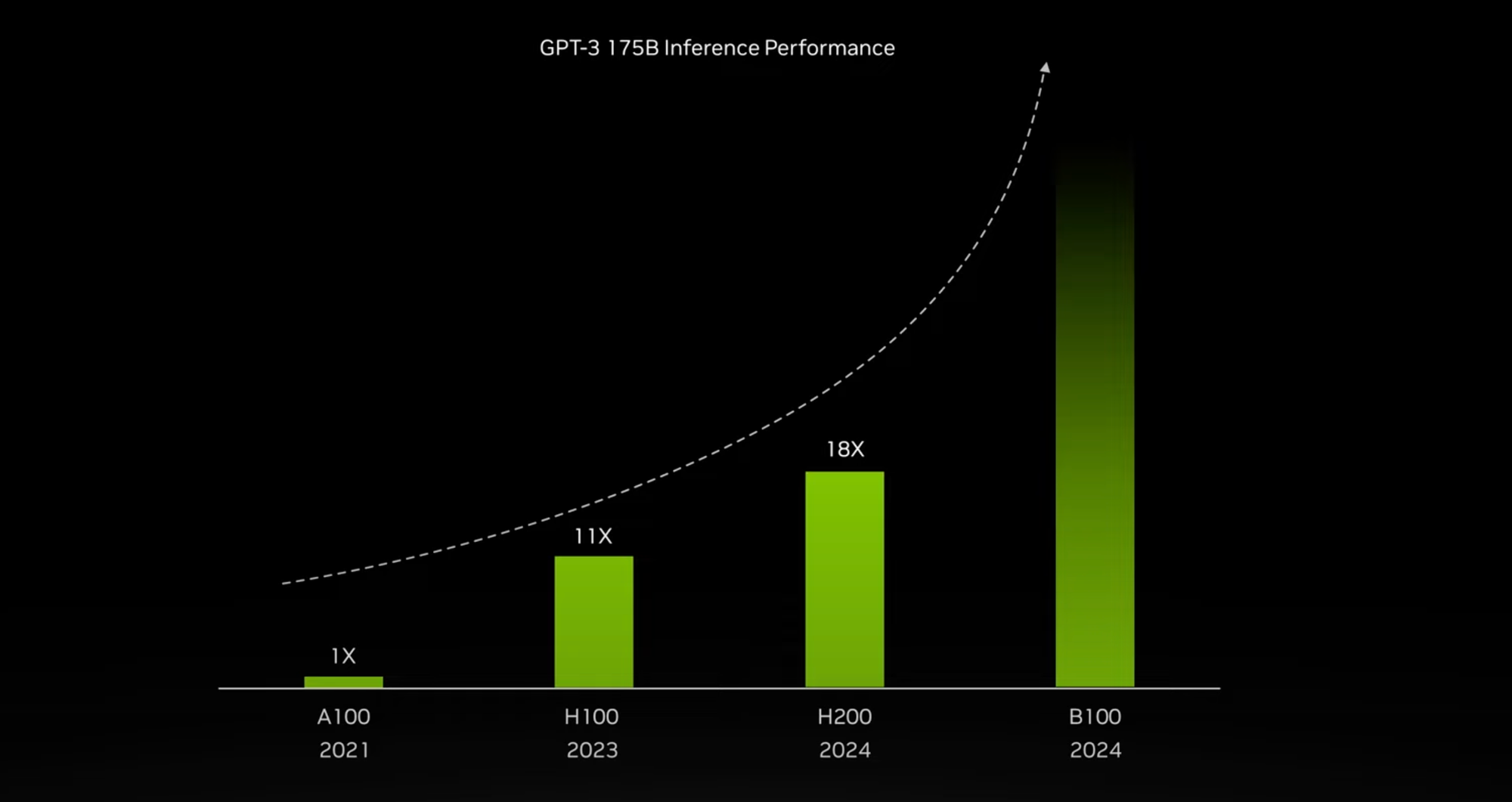

NVIDIA claims the H200 will deliver almost 2x faster inference speeds on large language models compared to the recently released H100 GPU. The company attributes these continued performance leaps to ongoing software enhancements enabled by its unified AI platform.

Ian Buck, NVIDIA's Vice President of Hyperscale and HPC, highlighted the impact of the H200's advanced memory. "The integration of faster and more extensive HBM memory serves to accelerate performance across computationally demanding tasks including generative AI models and high-performance computing applications while optimizing GPU utilization and efficiency," Buck stated in a video presentation.

The demand for NVIDIA's chips for AI has been soaring, with their GPUs being integral in efficiently processing massive data quantities needed for generative image tools and multimodal large language models. The introduction of the H200 is timely, as the industry faces a high demand for the H100 chips, highlighting NVIDIA's continued leadership and innovation in this domain.

Buck's address also delved into the broader implications of NVIDIA's technological advancements. He discussed the role of AI in transforming HPC, the sustainability of accelerated computing, and NVIDIA's continued innovation in software and hardware solutions that scale across entire data centers. He underscored the significance of AI as a tool for accelerating computing, providing an additional magnitude of acceleration for scientific domains.

NVIDIA's integrated approach, combining CPU, GPU, and DPUs into hardware platforms from edge to cloud, and their Mellanox Networking, forms the backbone of the accelerated data center. This comprehensive ecosystem connects computer makers and cloud service providers to nearly every domain of science and industry.

However, amidst this technological leap, a key question looms: the availability of these new chips. Given the high demand, existing supply constraints, and trade restrictions with the H100 chips, there is speculation about the accessibility of the H200. NVIDIA says it is working closely with global system manufacturers and cloud service providers including AWS, Google Cloud, Microsoft Azure, and Oracle Cloud to deploy H200-based instances next year.

The introduction of the H200 comes at a time when AI companies are still eagerly seeking NVIDIA's H100 chips. NVIDIA's GPUs are renowned for their efficiency in processing the vast quantities of data required for training and operating generative image tools and large language models. The demand for these chips is so high that companies have been using them as collateral for loans, and their possession has become a topic of intense interest in Silicon Valley.

NVIDIA's integrated approach, combining CPU, GPU, and DPUs into hardware platforms from edge to cloud, and their Mellanox Networking, forms the backbone of the accelerated data center. This comprehensive ecosystem connects computer makers and cloud service providers to nearly every domain of science and industry.

The HGX H200's introduction is not just a testament to NVIDIA's current dominance in AI infrastructure innovation, but also underscores their focus on accelerating high performance computing. As the industry anticipates the arrival of the HGX H200, its potential impact on the AI and HPC landscapes is substantial. With NVIDIA's commitment to tripling its production of H100s in 2024, the availability of advanced GPUs like the H200 could mark a new era in AI and HPC development.