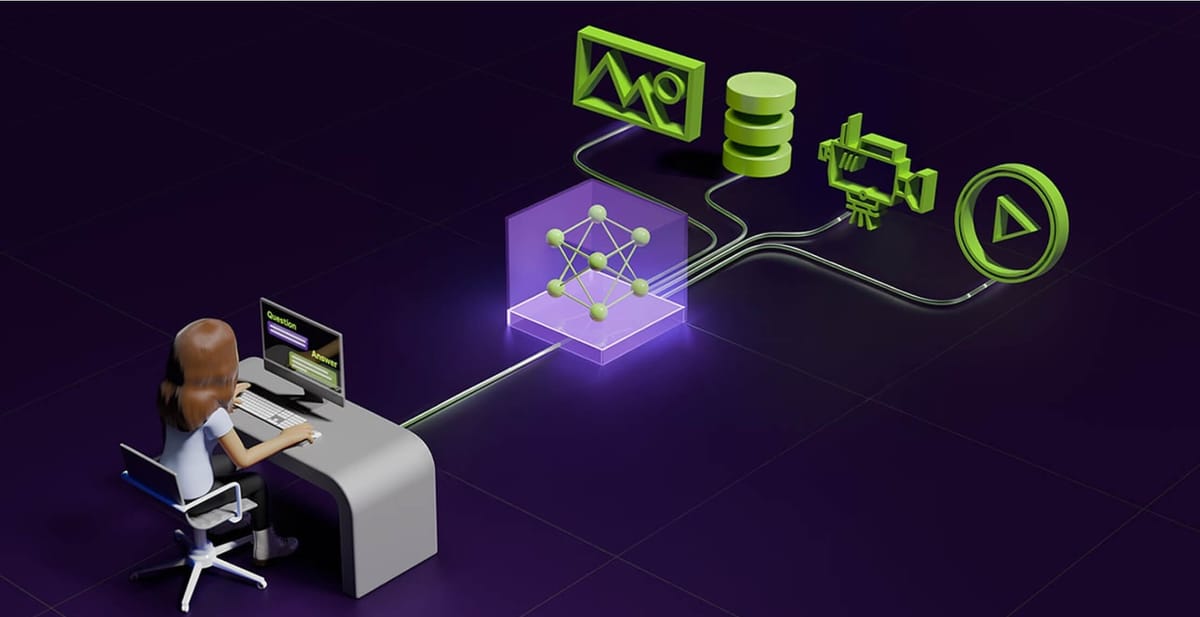

NVIDIA has released a new AI Blueprint for Video Search and Summarization. These AI agents, built using cutting-edge vision language models (VLMs), large language models (LLMs), and NVIDIA NIM microservices, can ingest massive volumes of live or archived videos and extract insights for summarization and interactive Q&A—delivering summaries, answering user questions, and even triggering alerts for specific events.

If you aren't already familiar with them, NVIDIA AI Blueprints are customizable reference workflows that combines NVIDIA computer vision and generative AI technologies. They are powered by NVIDIA NIM, a set of microservices that includes industry-standard APIs, domain-specific code, optimized inference engines, and enterprise runtime.

Imagine security systems that can summarize hours of surveillance footage in minutes, or agents that can respond in real time to traffic incidents—all achievable using natural language prompts rather than complex coding. For example, a warehouse manager could ask the system to identify safety violations, or city officials could request updates about traffic conditions from surveillance feeds.

By using VLMs (like NVIDIA VILA) and LLMs (like Meta’s Llama 3.1 405B), the agents can understand and process vast amounts of visual data, allowing users to pose natural language questions about video content, generate summaries, and set alerts for specific scenarios. These visual agents can analyze live streams or video archives, enabling powerful and actionable insights across various environments.

For instance, city traffic managers in Palermo, Italy, are already working with NVIDIA's partners to implement visual AI agents for monitoring and improving street activity. By understanding these visual cues, local authorities can make data-driven decisions to enhance safety and operational efficiency.

Additionally, it also leverages retrieval-augmented generation for aggregating insights from processed video chunks, generating detailed summaries and creating a knowledge graph to visualize relationships between detected events and objects. This enriched understanding helps visual agents perform long-form video analysis—a significant leap from previous tech that only detect predefined objects.

The practical applications span numerous industries. In infrastructure maintenance, workers can use the system to analyze aerial footage for degrading roads or bridges. Sports broadcasters could automatically generate game highlights, and security teams could quickly search through hours of footage to find specific incidents.

This latest blueprint can be deployed on NVIDIA GPUs at the edge, on-premises, or in the cloud, providing immense flexibility for enterprises. NVIDIA has also partnered with global system integrators such as Dell Technologies and Lenovo.