OpenAI has established a Safety and Security Committee to evaluate its AI practices and advise its board on critical decisions, according to a blog post on Tuesday. This move comes amid scrutiny and high-profile concerns about the company's commitment to AI safety, especially following the recent departures of key personnel involved in AI safety and "superalignment" work.

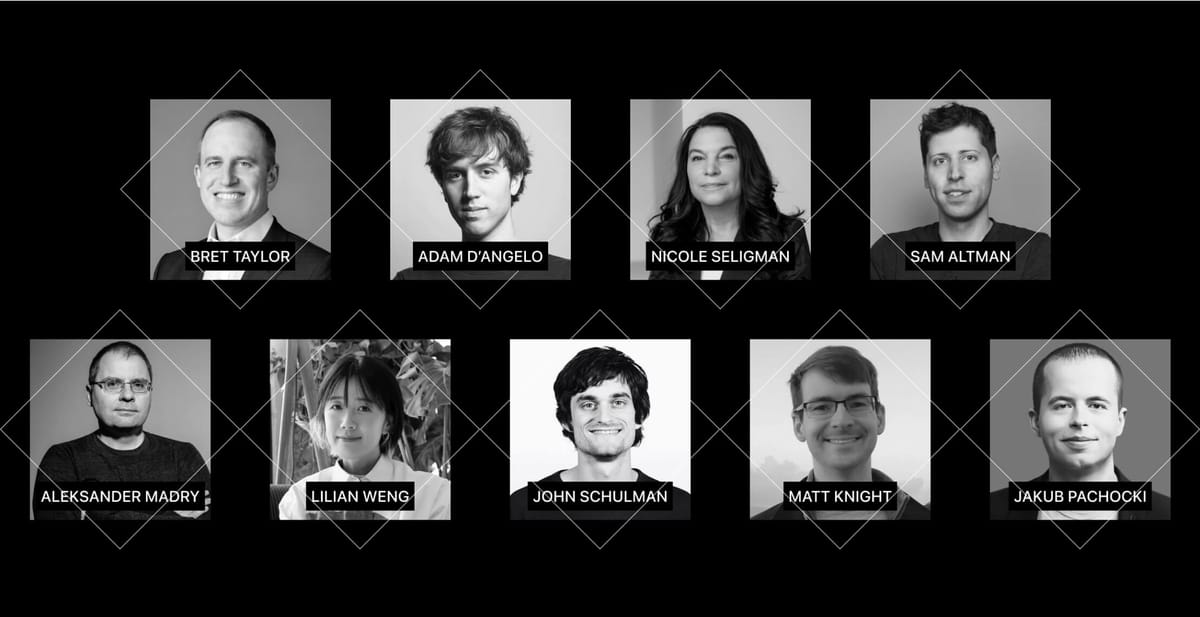

The new committee will be led by chairman Bret Taylor and include board members Adam D'Angelo, Nicole Seligman, and CEO Sam Altman. It will also include several OpenAI technical and policy experts, such as Head of Preparedness Aleksander Mądry, Head of Safety Systems Lilian Weng, co-founder and Head of Alignment Science John Schulman, Security Chief Matt Knight, and Chief Scientist Jakub Pachocki.

OpenAI's recent rapid advancements in AI have raised concerns about the management of the technology's potential dangers. These worries intensified last fall when CEO Sam Altman briefly faced a boardroom coup after clashing with co-founder and chief scientist Ilya Sutskever over the pace of AI product development and steps to limit harms.

Yesterday was my last day as head of alignment, superalignment lead, and executive @OpenAI.

— Jan Leike (@janleike) May 17, 2024

The concerns resurfaced this month following the departure of Sutskever and key deputy Jan Leike, who ran OpenAI's "superalignment" team. Leike, who resigned, later wrote on X that his division was "struggling" for computing resources within the company, a criticism echoed by other departing employees. Leike has now joined Anthropic to work on "scalable oversight, weak-to-strong generalization, and automated alignment research."

In the wake of these events, OpenAI has stated that the work previously undertaken by the "superalignment" team will continue under its research unit and John Schulman, a co-founder now serving as Head of Alignment Science. The company has also announced that it has recently begun training its next frontier model, anticipating that the resulting systems will bring them to the next level of capabilities on their path to artificial general intelligence.

To support the work of the Safety and Security Committee, OpenAI will retain and consult with additional safety, security, and technical experts, including former cybersecurity officials Rob Joyce and John Carlin.

At the conclusion of the 90-day evaluation period, the committee will share its recommendations with the full board. Following the board's review, OpenAI has committed to publicly sharing an update on adopted recommendations in a manner consistent with safety and security.