OpenAI has shared that it has been testing using GPT-4 for content moderation on its own platform. The company claims that the new system can facilitate faster policy changes, streamline labeling consistency, and significantly reduce human moderation efforts.

Content moderation is essential but challenging for digital platforms, often relying on large teams of human moderators, supported by niche-specific machine learning models to filter out harmful or inappropriate content. This process is often time-consuming, inconsistent, and mentally taxing for moderators.

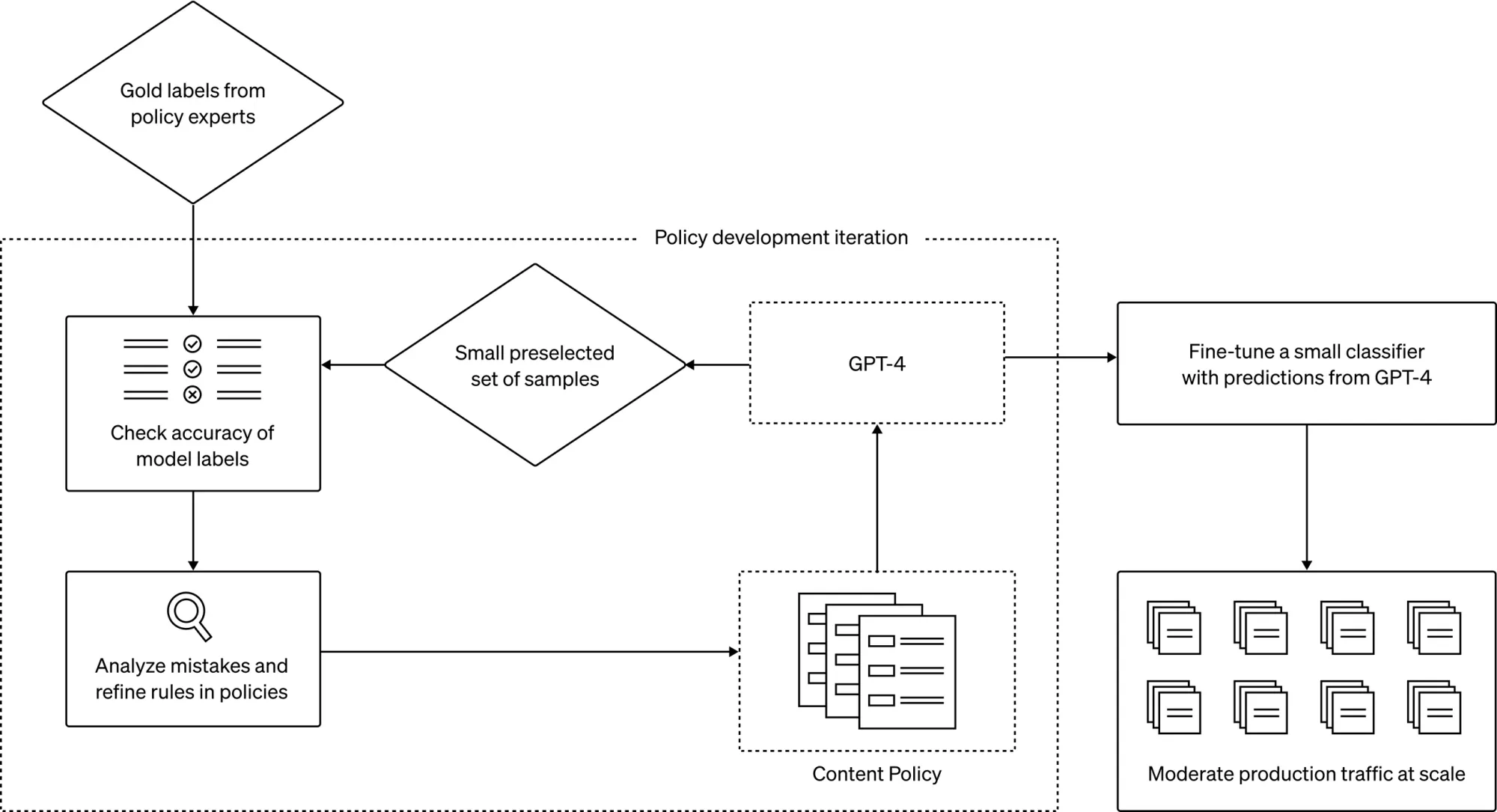

If OpenAI's recent exploration with GPT-4 turns out to be effective, if offers a revolutionary solution. With their technique, once content policy guidelines are established, first GPT-4 is used to evaluate the content against these guidelines. They then juxtapose human judgment against the model's decisions, discrepancies are identified and addressed, ambiguities clarified, and the policy further refined. OpenAI claims this process, that would typically take months, can now be completed within mere hours.

OpenAI calls out these three key advantages of their methodology:

- More consistent labels. Content policies are continually evolving and often very detailed. People may interpret policies differently or some moderators may take longer to digest new policy changes, leading to inconsistent labels. In comparison, LLMs are sensitive to granular differences in wording and can instantly adapt to policy updates to offer a consistent content experience for users.

- Faster feedback loop. The cycle of policy updates – developing a new policy, labeling, and gathering human feedback – can often be a long and drawn-out process. GPT-4 can reduce this process down to hours, enabling faster responses to new harms.

- Reduced mental burden. Continual exposure to harmful or offensive content can lead to emotional exhaustion and psychological stress among human moderators. Automating this type of work is beneficial for the wellbeing of those involved.

OpenAI says their technique is superior to the Constitutional AI approach that is used by companies like Anthropic, which relies on the model's own internalized judgment of what is safe vs not. Their model focuses on platform-specific policy iteration, is much faster, and requires less effort.

While groundbreaking, the system is not flawless. The company acknowledges that undesired biases could slip into judgments due to the model's training. Constant monitoring and human validation are imperative to ensuring accuracy. They stressed the importance of human expertise, especially for nuanced decisions, and will keep humans in the loop for such situations. Additionally, they are keen on further enhancing GPT-4, with aspirations to integrate chain-of-thought reasoning and self-critique mechanisms.

OpenAI's efforts come after various criticisms faced by the AI community for content moderation. The past saw users manipulating ChatGPT to generate inappropriate content, which then went viral on social platforms. The company has continued to address these "jailbreaking" methods to preserve the platform's integrity.

The most notable controversy was a report highlighting OpenAI's partnership with Kenyan workers for labeling offensive content. This venture exposed workers to distressing content, leading to claims of trauma.

The integration of GPT-4 into content moderation signifies a milestone in AI capability. Lilian Weng, OpenAI’s head of safety systems, expressed hope in seeing more platforms adopt this method, emphasizing the technology's societal benefits.

The approach is still in its infancy and may not yet match the expertise of seasoned human moderators. However, OpenAI believes that with advancements, it could redefine content moderation across various platforms, including social media and e-commerce.

The company has noted some clients already employing GPT-4 for content moderation but has not named any specific entities.

The ultimate vision is an AI system that not only evaluates text but also image and video content, thereby providing a comprehensive content moderation solution.

As AI continues to evolve, the hope is that it will become a trustworthy partner in creating safe digital environments. Yet, as with any technological advancement, it will need continuous refining, adaptation, and oversight to ensure its efficacy and safety.