OpenAI has launched the o3-mini model, making advanced reasoning capabilities available across ChatGPT and the API. Designed to excel in STEM fields like science, math, and coding, o3-mini offers a cost-effective and efficient solution for both developers and general users.

Key Points:

- o3-mini delivers 24% faster responses than o1-mini while offering improved accuracy.

- Priced at 93% less per token than o1, o3-mini is competitive even against Chinese alternatives like DeepSeek-R1.

- Free users can explore o3-mini in ChatGPT; Pro users enjoy unlimited access and options for higher-intelligence versions.

- Outperforms GPT-4o in safety and jailbreak tests, maintaining OpenAI’s commitment to secure AI deployment.

Available today, o3-mini replaces o1-mini in the ChatGPT model picker for paid plans, offering higher rate limits and enhanced performance. Free users can explore it through the new “Reason” button, marking OpenAI’s first step in bringing reasoning models to non-paying customers.

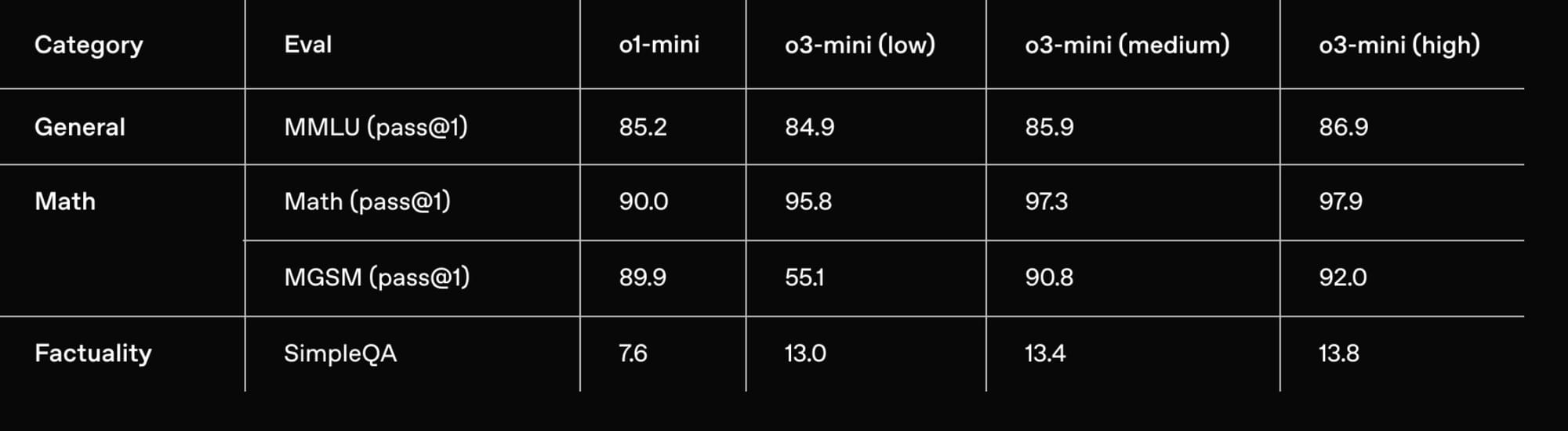

For developers, o3-mini provides full API access with support for function calling, structured outputs, and developer messages. The model offers three reasoning effort levels - low, medium, and high - allowing developers to optimize for their specific use cases.

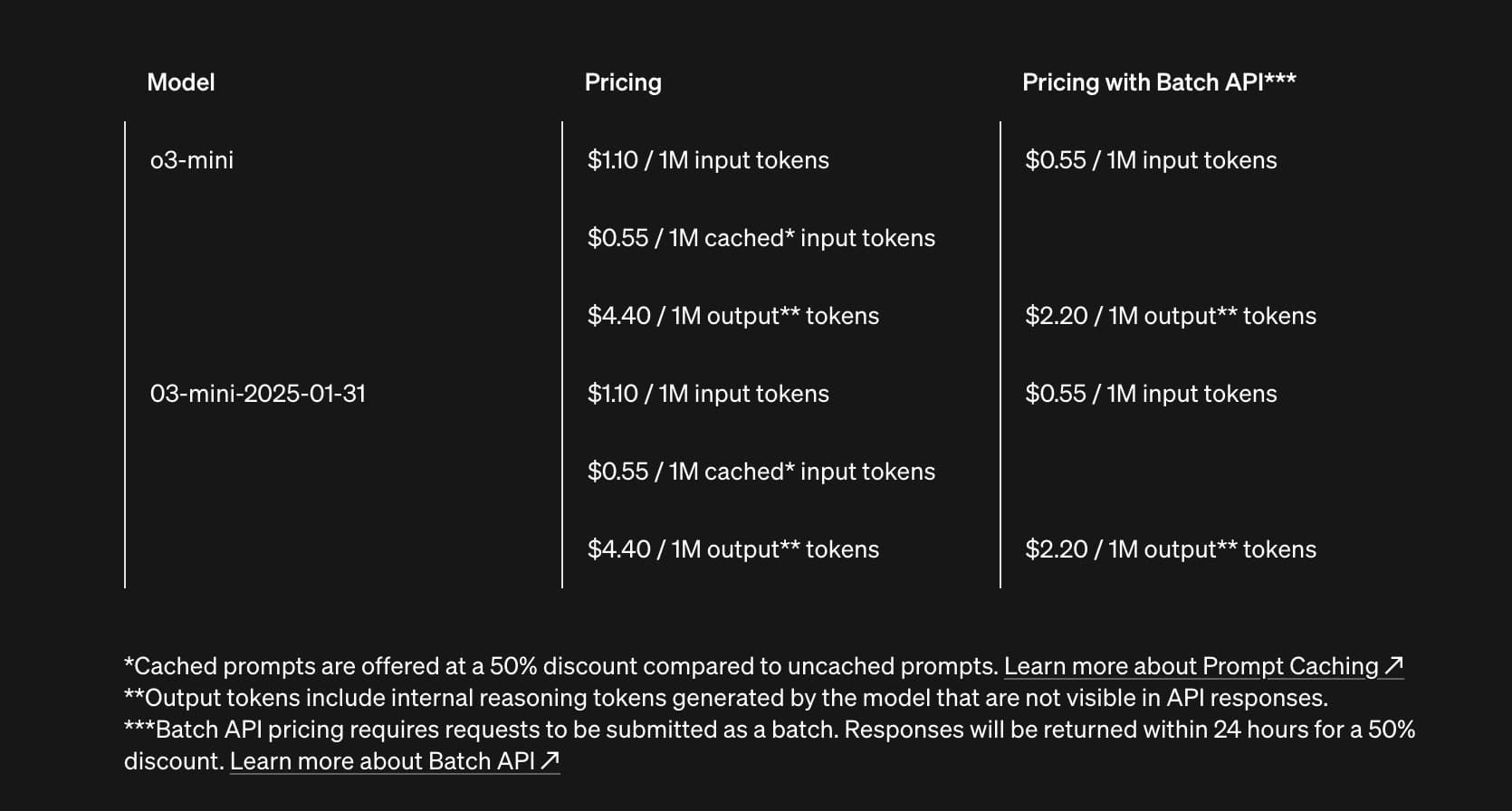

Priced at $1.10 per million input tokens (with a 50% discount for cached tokens) and $4.40 per million output tokens, o3-mini is 63% cheaper than o1-mini and 93% cheaper than o1 on a per-token basis.

External testers have highlighted o3-mini’s accuracy and clarity, preferring it over o1-mini in 56% of evaluations. With capabilities that match or exceed o1 in STEM benchmarks at reduced latency, o3-mini emerges as a key tool for those prioritizing technical precision and efficiency.

The Whale in the Room...

The launch comes amid intense scrutiny of AI development costs and capabilities, particularly following DeepSeek's impressive technical achievement with R1. DeepSeek's model has demonstrated strong performance that rivals leading U.S. systems - a significant accomplishment that even OpenAI CEO Sam Altman publicly praised as "clearly a great model." This rapid progress, achieved despite U.S. chip export restrictions, showcases China's growing AI capabilities.

However, the initial narrative around DeepSeek's reported $5.6 million training cost requires context. New research from SemiAnalysis estimates the company's actual hardware spend at "well higher than $500M," with additional R&D costs and computing resources needed for synthetic data generation and experimentation. While this doesn't diminish DeepSeek's technical achievement, it suggests the gap in development efficiency may be less dramatic than initially reported.

Security researchers have identified important considerations for potential users. NewsGuard documented that 80% of DeepSeek's responses align with Chinese government positions on sensitive topics, while cybersecurity experts note all user data routes to Chinese servers. These findings have prompted action from regulators and institutions, including an outright ban by the U.S. Navy and investigations by European privacy authorities.

In contrast, o3-mini maintains OpenAI's established security standards while matching or exceeding DeepSeek's pricing when accounting for caching and hosting costs. The model's deployment reflects OpenAI's balanced approach: advancing AI accessibility without compromising on safety or capability.

Technical Capabilities

The o3-mini model demonstrates particular strength in STEM applications. In difficult mathematical problems like the American Invitational Mathematics Examination (AIME), o3-mini with high reasoning effort outperforms both o1-mini and o1. In software engineering tasks, it achieved the highest performance yet on SWE-bench Verified evaluations.

Integration with search capabilities (in beta) is another key advancement that users will welcome. The model can find up-to-date answers with links to relevant web sources, marking an early step in OpenAI's broader vision of incorporating search across its reasoning models.

By prioritizing both cost-effectiveness and advancing intelligence, o3-mini reflects OpenAI's broader mission of making advanced AI tools more accessible while maintaining rigorous safety standards. As the AI landscape becomes increasingly competitive, this balanced approach to development and deployment may prove crucial for sustainable progress in the field.