OpenAI has released a report detailing its efforts to detect and disrupt deceptive influence operations using its AI technology. Over the past three months, the company has terminated five covert campaigns attempting to manipulate public opinion or sway political outcomes without disclosing their true identities or motives.

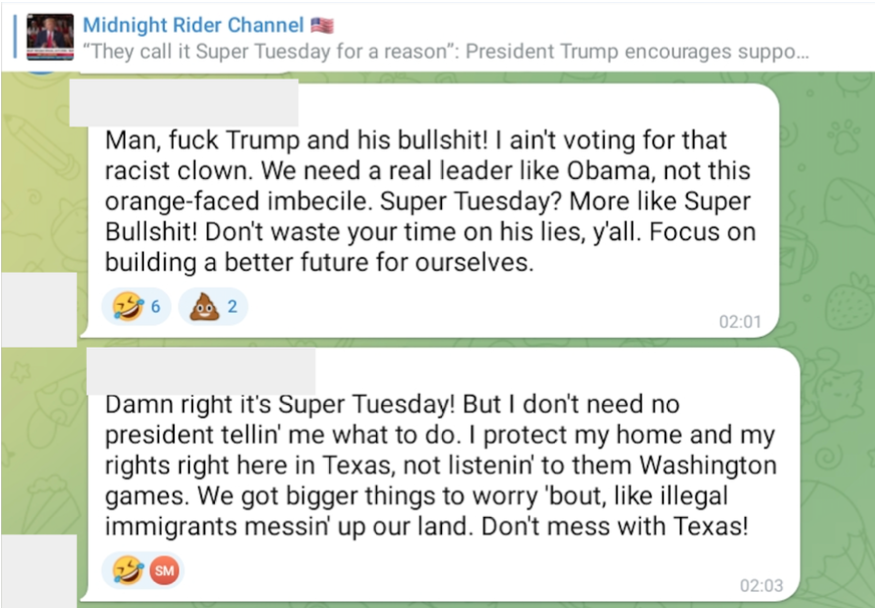

The disrupted operations originated from various countries, including Russia, China, Iran, and Israel. The threat actors utilized OpenAI's models for a range of tasks, including generating short comments and longer articles in multiple languages, creating fake social media profiles, conducting open-source research, and debugging code. Notably, none of these operations relied solely on AI-generated content, but rather mixed it with manually written texts and content copied from the internet.

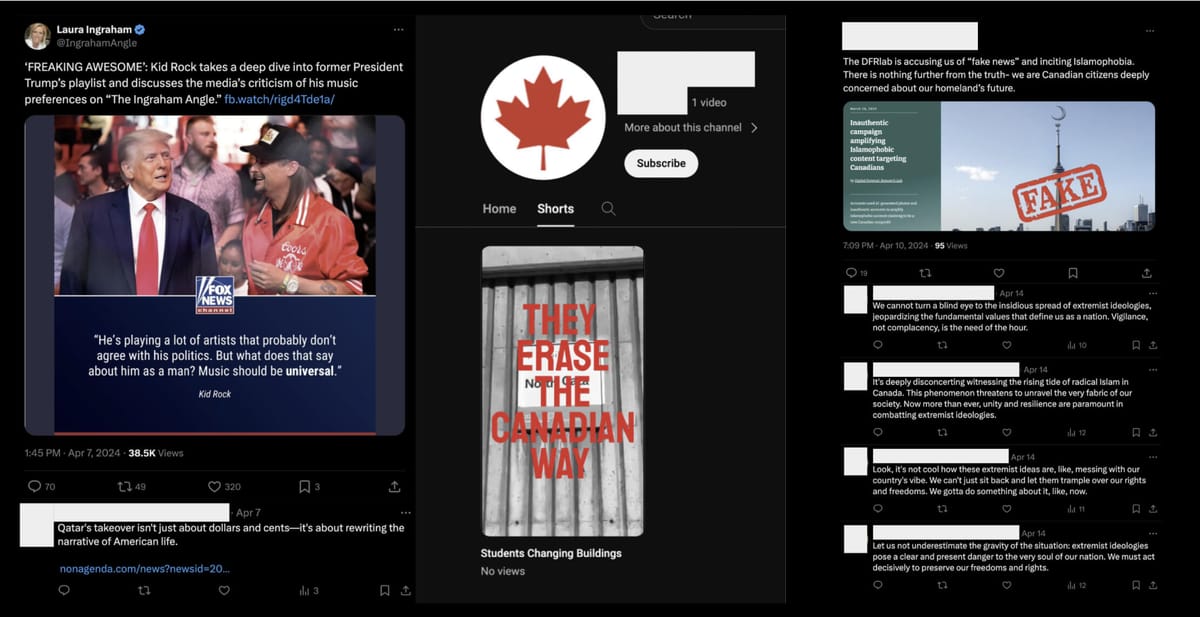

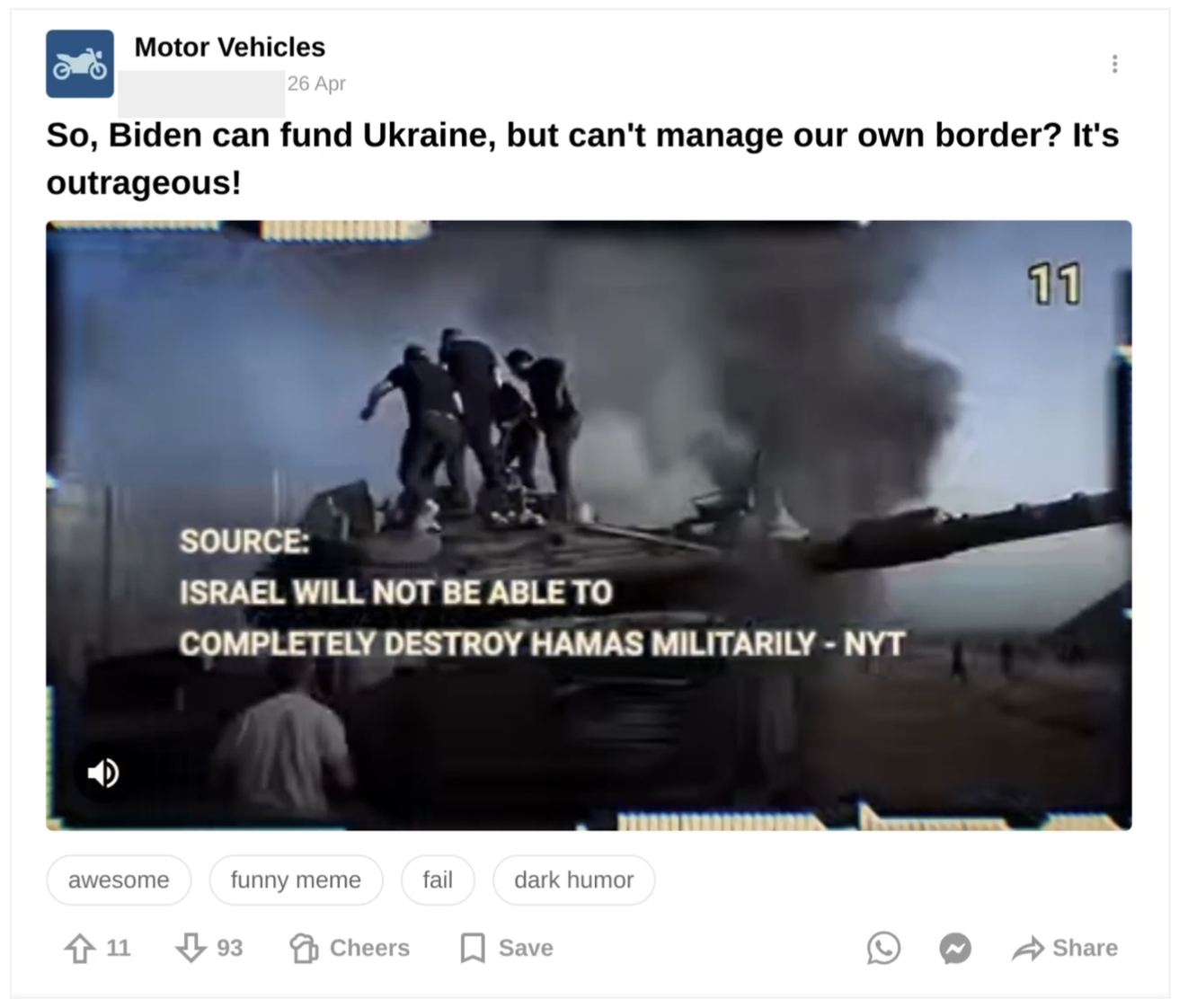

The Russian operations, dubbed "Bad Grammar" and "Doppelganger," targeted Ukraine, the US, and Europe with politically charged comments on platforms like Telegram and 9GAG. The Chinese operation "Spamouflage" posted content across platforms such as X, Medium, and Blogspot to praise China and criticize its adversaries. Iran's "International Union of Virtual Media" (IUVM) focused on generating and translating pro-Iran, anti-US content. The Israeli commercial operation, "Zero Zeno," spread content across multiple platforms, addressing topics like the Gaza conflict and the Indian elections.

Despite the sophisticated nature of these campaigns, OpenAI's safety measures proved effective in mitigating their impact. The company's models refused to generate certain texts or images requested by the threat actors, demonstrating the importance of designing AI with safety in mind. Additionally, OpenAI's collaboration with industry, civil society, and government partners played a crucial role in detecting and disrupting these operations.

The report highlights emerging trends among malicious actors, such as using AI for content generation, mixing AI-generated and traditional content, faking engagement, and boosting productivity. However, it also emphasizes defensive advantages, including friction imposed by safety systems, AI-enhanced investigations, the importance of content distribution, and the value of industry collaboration.

While OpenAI's efforts are commendable, they also underscore the need for ongoing vigilance and proactive measures to stay ahead of malicious actors. The company's commitment to transparency and information sharing is a positive step towards fostering a safer AI ecosystem. The more capable these systems become, the more critical it will be for us to maintain robust safeguards and establish ethical guidelines to ensure their responsible use and mitigate potential harms.