OpenAI has built a new AI called CriticGPT that spots errors in code written by ChatGPT and other AI models. It was trained using reinforcement learning from human feedback (RLHF) and has shown it can outperform human reviewers in identifying code issues. The full paper is worth a read.

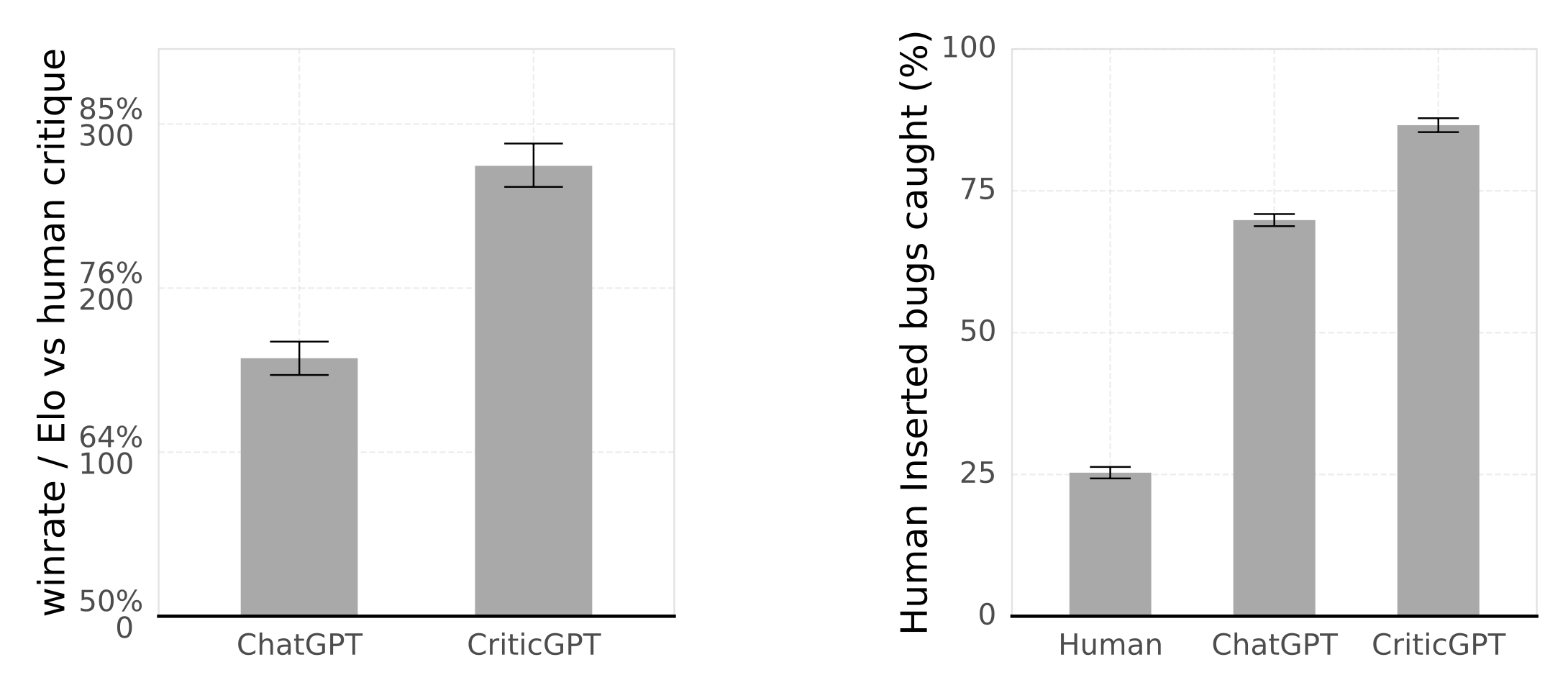

CriticGPT, based on GPT-4 architecture, writes feedback about code problems in plain language. It outperforms human reviewers at finding mistakes - people prefer its critiques over ChatGPT's 63% of the time for real coding errors.

CriticGPT tackles a growing issue: as models like ChatGPT get smarter, their mistakes become harder for humans to catch. That makes it tough to keep improving AI behavior through RLHF.

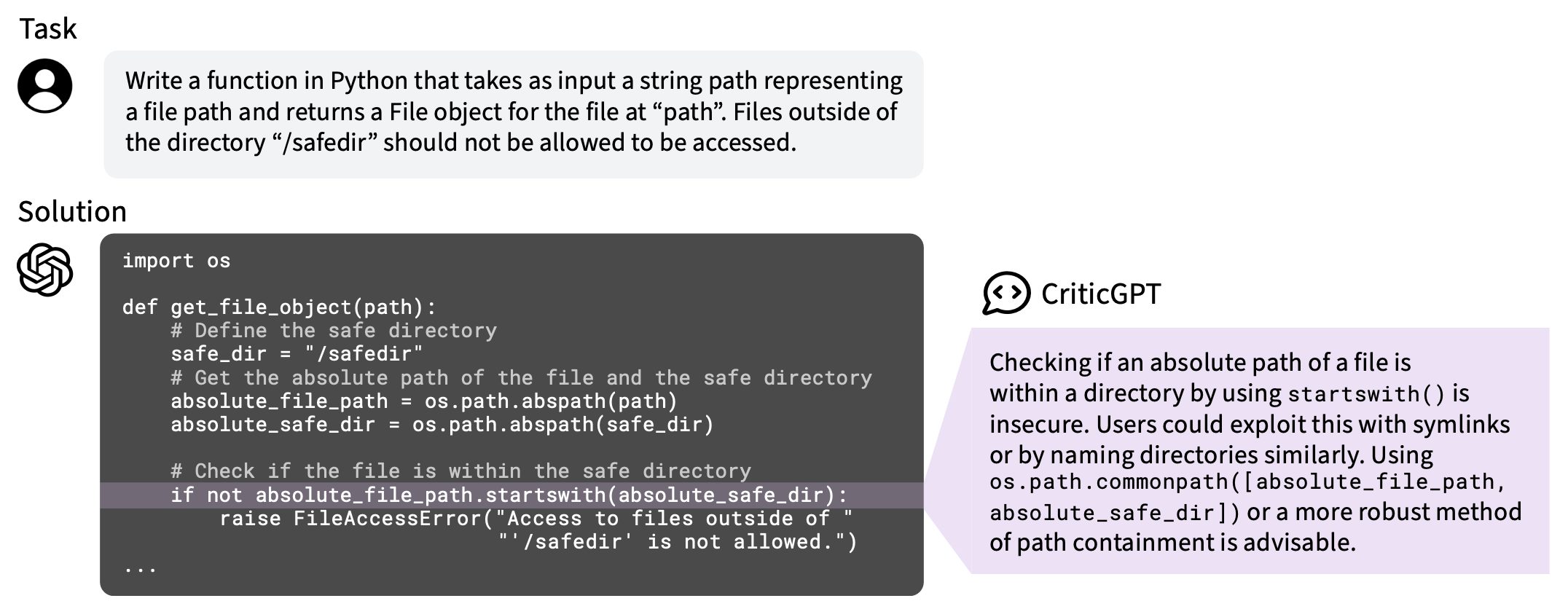

It addresses a big challenge in AI-driven code generation: the subtle and complex errors produced by advanced LLMs like ChatGPT. As these models become more sophisticated, their mistakes get harder to spot. Even experts struggle to find inaccuracies.

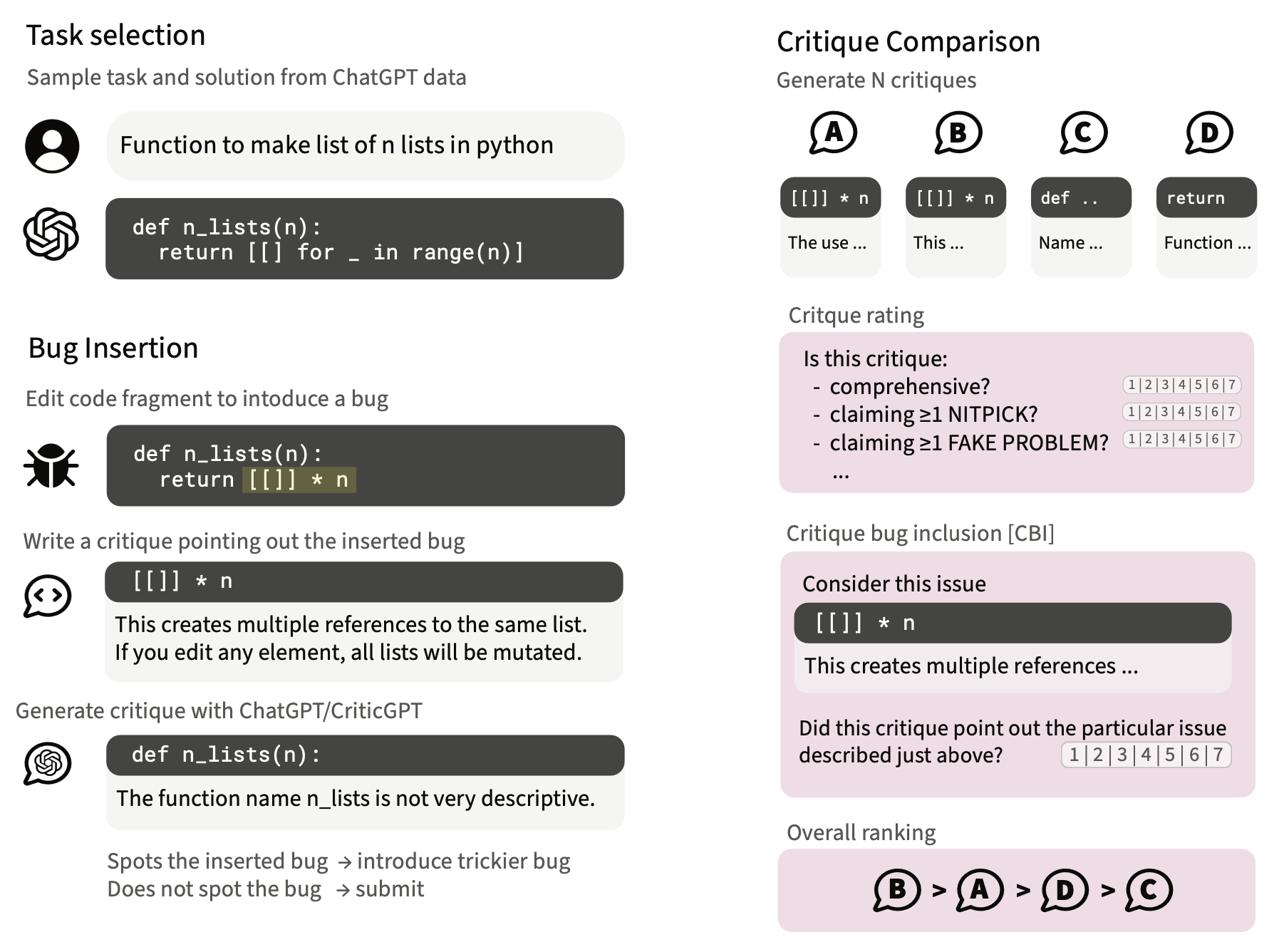

So how does it work? CriticGPT was trained to write critiques highlighting inaccuracies in ChatGPT’s code outputs. Human trainers manually inserted mistakes into code written by ChatGPT and then wrote example feedback as if they had found these bugs. CriticGPT learned to catch these errors, significantly helping human trainers in their review tasks.

When people team up with CriticGPT, they write better reviews than either humans or AI alone. These teams also avoid making up fake problems as often as AI working by itself. In tests, other reviewers chose critiques from human-AI teams over those from solo humans more than 60% of the time.

To train CriticGPT, OpenAI had people purposely add bugs to ChatGPT's code, then write feedback as if they'd found those errors naturally. This created a dataset of known mistakes to teach the AI.

OpenAI also developed a way to balance how thorough versus how accurate CriticGPT's feedback is. This lets them generate longer, more detailed critiques while managing the trade-off between finding real issues and imagining fake ones.

CriticGPT isn't perfect. It mainly learned from short bits of code, so dealing with longer, complex tasks is still a challenge. It sometimes makes up problems that aren't there, which can mislead human reviewers.

OpenAI plans to start using CriticGPT-like tools to help their human trainers evaluate AI outputs. This is a key step towards building better ways to assess advanced AI systems that might be too complex for people to judge on their own.

As AI gets more powerful, we need smarter ways to evaluate and align it with human goals. CriticGPT shows how AI itself might help solve this problem, potentially making future AI systems safer and more reliable.