OpenAI has just lifted the lid on GPT-4o, a new state-of-the-art AI model that can understand and generate content across text, images, and audio. GPT-4o (o is for omnimodel) builds upon the capabilities of its predecessor, GPT-4, while introducing powerful new multimodal features to everyone, including free users.

Yup, you read that right. OpenAI is making GPT-4o available to all users, including those who don't pay. This means that anyone can now access OpenAI's most advanced AI technology. Paid users will continue to enjoy higher capacity limits, with up to five times the capacity of free users.

According to OpenAI CTO Muri Murati, GPT-4o "reasons across voice, text and vision" in real-time. It can engage in voice conversations, responding to audio prompts in as little as 232 milliseconds on average. This enables more natural human-AI interactions.

During the launch event, OpenAI showed off GPT-4o's real-time audiovisual capabilities, with ChatGPT assisting with solving a math problem, interpreting code, and sensing the presenter's emotions. The new ChatGPT can also be interrupted and will respond in real-time, making the conversation feel more natural and intuitive.

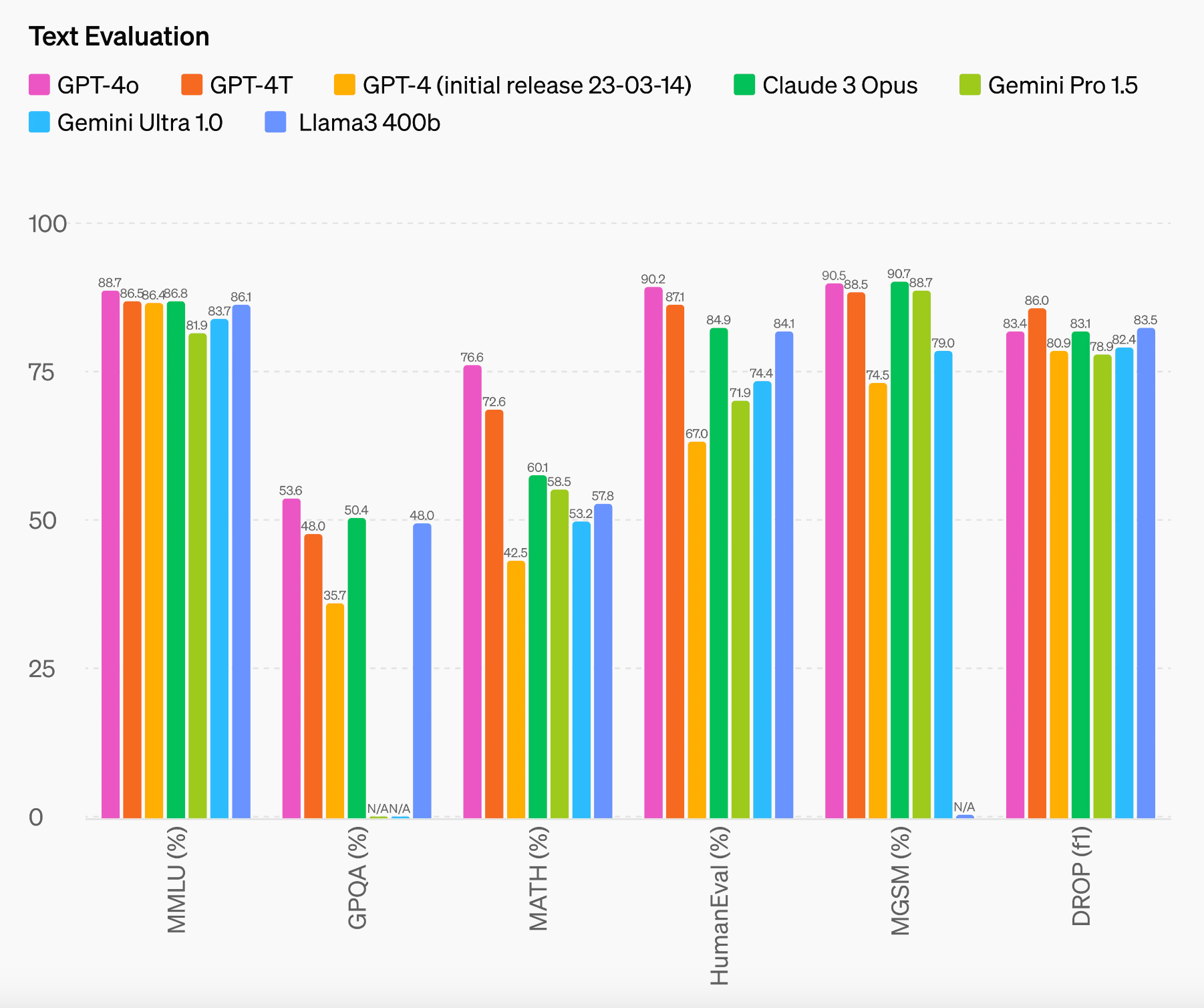

On the performance front, OpenAI says GPT-4o matches GPT-4's capabilities on English text and coding tasks while significantly improving multilingual performance at half the cost. It sets a new high-score of 87.2% on 5-shot MMLU (general knowledge questions), dramatically improves speech recognition performance over Whisper-v3 across all languages, sets a new state-of-the-art performance on speech translation and visual perception benchmarks.

Examples of GPT-4o in Action

GPT-4o will also be available via the API. It is half the price and twice as fast as GPT-4 Turbo, with five times the rate limit. This improved performance is thanks to efficiency improvements at every layer of the stack, according to OpenAI.

In addition to the new GPT-4o model, OpenAI also announced a new desktop version of ChatGPT and an updated user interface aimed at simplifying interactions. According to Murati, as these models become increasingly complex, the goal is to make the user experience more intuitive and seamless, focusing more on the interaction rather than the interface.

OpenAI is taking a measured approach to rolling out GPT-4o. Text and image features are being integrated into ChatGPT and the API today, with audio and video capabilities to follow in the coming weeks after further testing. Safety remains a key priority, with the model undergoing extensive red teaming.

GPT-4o marks an exciting step towards more intelligent, user-friendly AI assistants. We love that OpenAI is taking another step forward in making AI more accessible and powerful. OpenAI says the roll-out will be gradual, appearing in its products over the coming weeks. Once we get our hands on the new model put it through its paces and provide a full review.