OpenAI has announced a significant restructuring of its safety and security practices, marking a pivotal shift in the company's approach to AI governance. This latest move builds upon a series of initiatives the company has implemented over the past year to strengthen its governance and safety protocols.

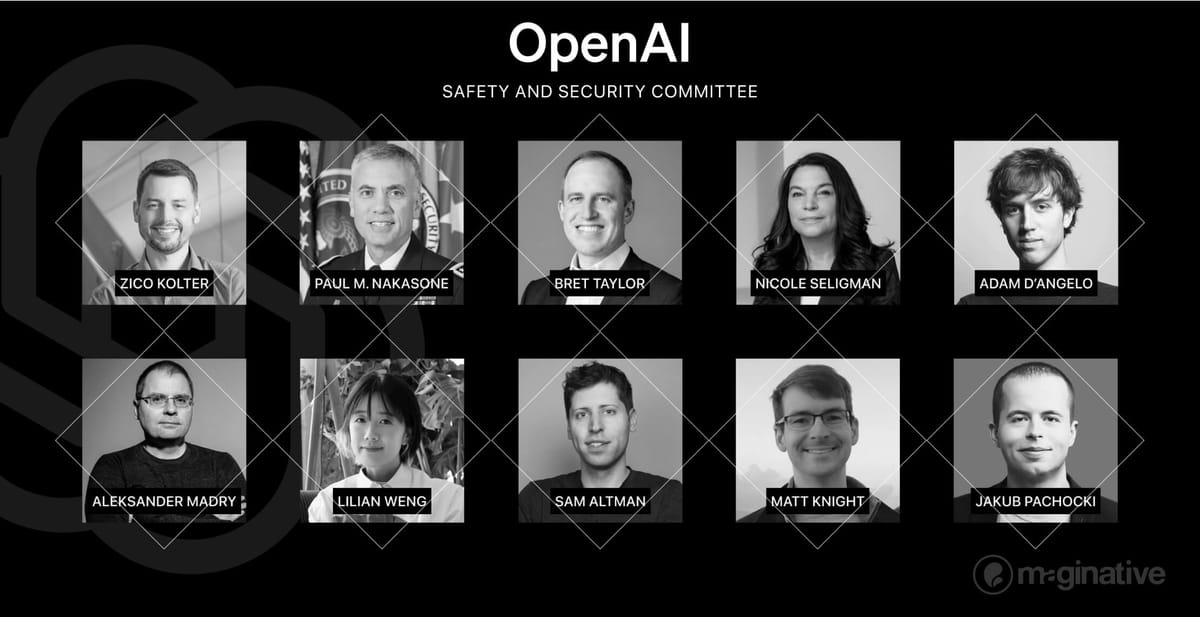

The cornerstone of these changes is the elevation of the Safety and Security Committee (SSC) to an independent Board oversight body. Initially formed in May 2024 to evaluate AI practices and advise on critical decisions, the SSC will now have broader powers and independence. Zico Kolter, Director of the Machine Learning Department at Carnegie Mellon University and a renowned expert in AI safety and alignment, will chair the committee. Kolter, who joined OpenAI's board in August, brings crucial technical expertise to the governance structure.

Other key members of the SSC include Quora CEO Adam D'Angelo, retired US Army General Paul Nakasone, and former Sony Corporation EVP Nicole Seligman. Nakasone's appointment in June 2024 was particularly notable, bringing world-class cybersecurity expertise to the board at a time when OpenAI was facing increased scrutiny over its security practices.

The newly empowered SSC will have the authority to delay model releases if safety concerns are not adequately addressed. This move is seen as a direct response to internal conflicts that came to a head in late 2023, when CEO Sam Altman briefly faced a boardroom coup over disagreements about the pace of AI development and risk mitigation strategies.

OpenAI is also significantly enhancing its cybersecurity efforts. The company plans to implement expanded internal information segmentation, increase staffing for round-the-clock security operations, and continue investing in research and product infrastructure security. These measures align with the expertise brought by General Nakasone and reflect the growing recognition of cybersecurity as a critical component of AI safety.

Transparency remains a key focus, with OpenAI committing to more comprehensive explanations of its safety work. This commitment to openness comes in the wake of criticisms following the departures of key personnel involved in AI safety and "superalignment" work earlier this year.

The company is also expanding its collaborations with external organizations. OpenAI is developing new partnerships with third-party safety organizations and non-governmental labs for independent model assessments. Additionally, agreements with the U.S. and U.K. AI Safety Institutes have been struck to research emerging AI safety risks and establish standards for trustworthy AI.

Internally, OpenAI is unifying its safety frameworks for model development and monitoring. This integrated approach aims to establish clear success criteria for model launches, with risk assessments approved by the SSC. The reorganization of research, safety, and policy teams is designed to improve collaboration across departments, addressing concerns raised by departing employees about resource allocation and prioritization of safety work.

These comprehensive changes reflect OpenAI's response to the growing complexity and potential risks associated with advanced AI systems. As the company continues its rapid advancement towards more capable AI models, including the recent announcement of training its next frontier model, these enhanced safety and security measures are crucial steps in managing the evolving challenges in the field of artificial intelligence.

You can already see the impact of some of these changes. The company's recent release of the o1 model family was accompanied by a comprehensive 43-page System Card. This document meticulously outlined the extensive safety work carried out, including detailed safety evaluations, external red teaming exercises, and rigorous Preparedness Framework evaluations. This level of disclosure sets a new standard for transparency in the AI industry.

Many people, including us at Maginative, have been very critical of OpenAI's actions. However, the restructuring of their safety and security practices is a welcomed change that should be applauded. The elevation of the SSC's role represents a significant step in the company's ongoing efforts to ensure robust safety measures are in place as AI capabilities continue to advance. It also shows the company's responsiveness to the evolving challenges in the field of AI and its commitment to earning and maintaining public trust.