OpenAI today announced the release of fine-tuning capabilities for its GPT-3.5 Turbo large language model. This update allows developers to bring their specific data and tailor the model for individual use cases, fostering distinct experiences for user.

The essence of this update revolves around customization. Fine-tuning gives developers the ability to tailor GPT-3.5 Turbo to better suit specific use cases. Developers can now optimize the model to yield results better suited for distinct tasks, and run these custom models at an amplified scale.

Importantly, OpenAI notes that as with all their APIs, data sent in and out of the fine-tuning API is owned by the customer and is not used by OpenAI, or any other organization, to train other models.

Preliminary testing indicates that a fine-tuned GPT-3.5 Turbo could rival or even surpass base GPT-4 models in specialized tasks. Customization also reduced the required prompt length by up to 90%, enabling faster and cheaper API calls.

In OpenAI's private beta testing, common fine-tuning use cases included:

- Improving instruction following and control over model outputs

- Formatting responses reliably for code completion or API calls

- Aligning the model's tone and voice with a business's brand identity

OpenAI says that fine-tuning with GPT-3.5-Turbo can handle 4k tokens—double its previous fine-tuned models. Additionally, combining it with other techniques like prompt engineering can further enhance capabilities. Later this year, the company says it will offer support for fine-tuning with function calling, as well as fine-tuning gpt-3.5-turbo-16k and GPT-4.

The fine-tuning process involves preparing suitable data, uploading it, then creating and monitoring training jobs. Once complete, the customized model can be directly deployed and accessed via OpenAI's API. The company is working on a visual interface that will simplify tracking of in-progress jobs and provide additional relevant information.

Prioritizing safety remains paramount for OpenAI. The commitment to safety is evidenced in the stringent measures adopted during the fine-tuning process. All fine-tuning data undergoes meticulous scrutiny through OpenAI's Moderation API and a GPT-4-backed moderation mechanism to flag and filter out any unsafe content. Users fully own their data throughout the process.

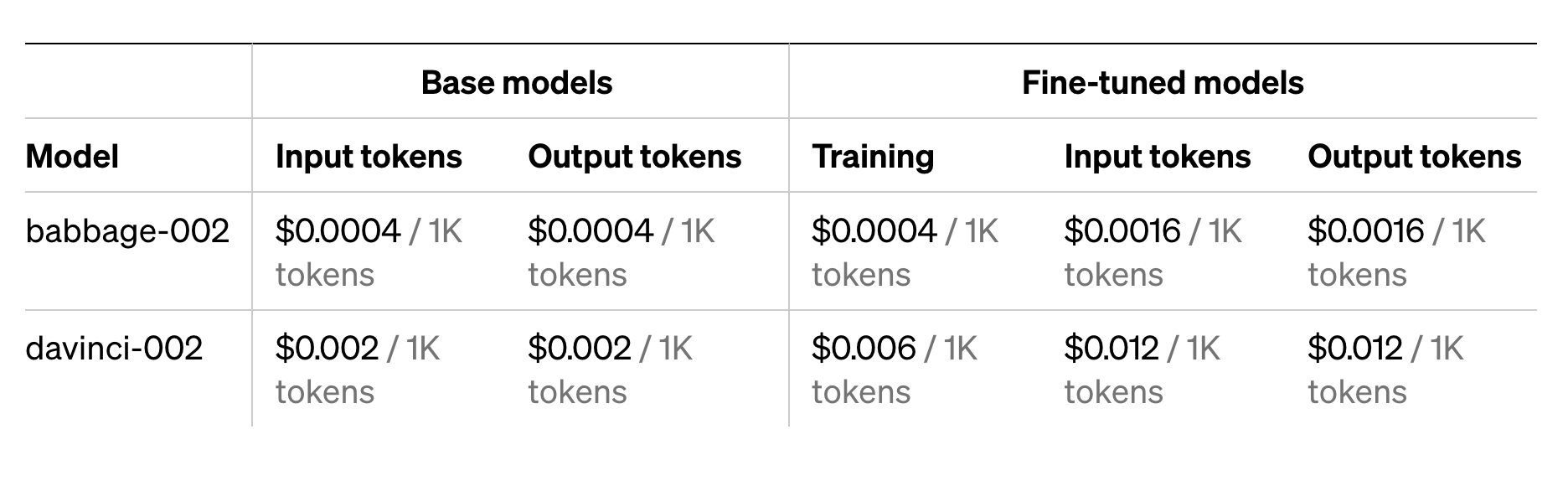

Pricing for base and fine-tuned GPT-3 models is as follows:

This launch signifies an important step in OpenAI's roadmap towards highly customizable AI systems. Unlocking fine-tuning paves the way for developers and businesses to tap into GPT's capabilities while tailoring it to their unique needs.