The enthusiasm for AI agents has consistently outpaced actual deployments. OpenAI's AgentKit, announced at Dev Day 2025, tries to close that gap with a visual workflow builder, embeddable chat interfaces, and evaluation tools—betting that making agents easier to build will finally move them from demos into daily operations.

Key Points:

- Production bottleneck: Despite excitement, few AI agents reach deployment due to orchestration complexity, evaluation challenges, and UI development overhead

- Visual-first approach: Agent Builder provides drag-and-drop workflow creation, directly competing with Zapier, n8n, and Make

- Enterprise focus: Connector Registry and granular controls target large organizations hesitant to deploy agents without governance frameworks

Thousands of teams have built agent prototypes in the past year. A fraction have shipped them to production. The gap between proof-of-concept and deployed system remains stubbornly wide, and OpenAI's analysis suggests tooling fragmentation is the primary culprit.

Building an agent that works once in a controlled environment requires orchestrating multiple components: connecting to data sources, defining multi-step workflows, handling errors gracefully, building a user interface, and establishing evaluation frameworks to catch when things go wrong. Each layer adds complexity and potential failure points, and developers often find themselves writing custom code to bridge gaps between tools that weren't designed to work together.

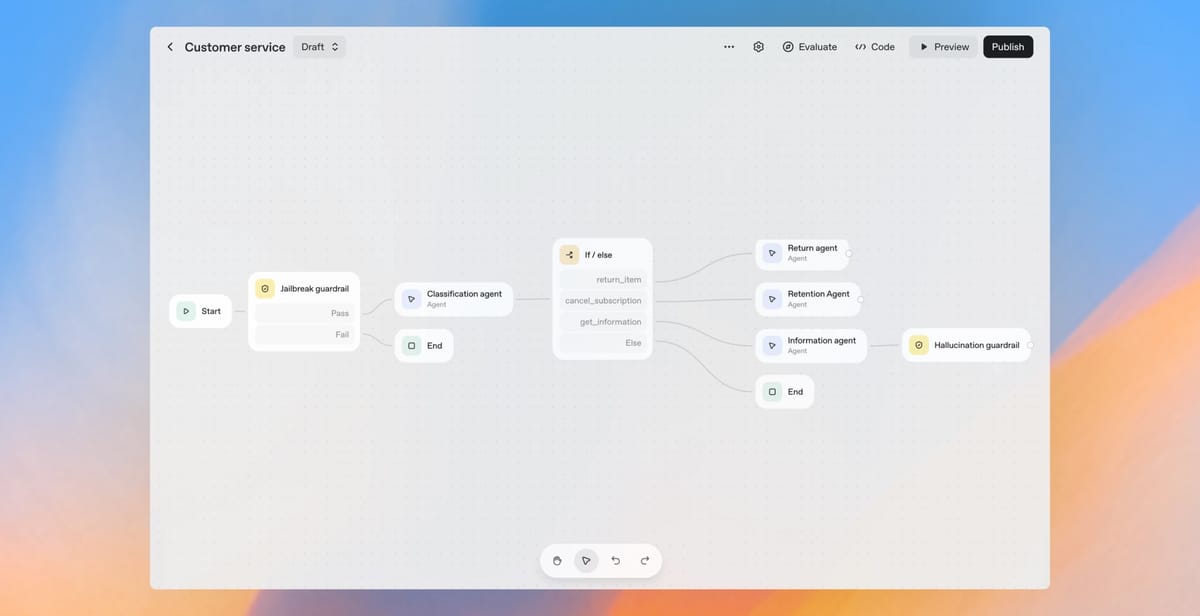

AgentKit consolidates those pieces into an integrated platform. Agent Builder provides a visual canvas where you can design multi-agent workflows using drag-and-drop nodes, preview behavior, configure guardrails, and version everything. ChatKit gives you embeddable chat interfaces so you're not building UI from scratch. The Connector Registry manages data access across your organization. Enhanced evaluation tools let you assess performance, trace decision-making step by step, and automatically optimize prompts.

The testimonials OpenAI shared carry weight. Ramp reduced their agent development cycle from months to hours. LY Corporation built a work assistant in under two hours. These aren't aspirational claims from startups—they're established companies with rigorous engineering standards describing measurable productivity gains.

What AgentKit really represents is OpenAI's entry into the workflow automation market currently dominated by platforms like Zapier, n8n, and Make. The visual workflow builder, in particular, puts OpenAI in direct competition with tools that millions of teams already use to connect business applications and automate tasks.

The distinction OpenAI emphasizes is that AgentKit is purpose-built for agentic behavior—systems that can reason, make decisions, and handle uncertainty—rather than deterministic automation that follows fixed if-then rules. Where traditional workflow tools excel at reliably executing predictable sequences, AgentKit is optimized for scenarios where the right action depends on context, previous outcomes, and dynamic assessment of multiple options.

That philosophical difference matters less than the practical capabilities. Developers choosing between platforms will evaluate concrete features: how many integrations are available, how flexible the workflow engine is, whether the evaluation tools actually help improve performance, and whether the pricing makes sense for their use case.

AgentKit's integration story is narrower but deeper than competitors. Rather than offering thousands of pre-built connectors like Zapier, it focuses on MCP (Model Context Protocol) support and first-class integrations with Google Drive, SharePoint, Microsoft Teams, and Dropbox. The bet is that organizations need fewer integrations if they're higher quality and properly secured for enterprise deployment.

The Connector Registry reflects that enterprise focus. It gives administrators centralized control over what data sources and tools agents can access across an organization, with version management and permission controls. That governance layer addresses one of the primary reasons enterprises haven't deployed agents more widely—they don't trust that unauthorized data access won't occur.

Guardrails, another AgentKit component, provides a safety layer that can mask personally identifiable information, detect jailbreak attempts, and apply custom safeguards. The fact that OpenAI made this open source and available as both a standalone tool and an integrated feature suggests they understand that safety concerns are deployment blockers, not just compliance checkboxes.

The evaluation capabilities matter more than they might initially appear. Datasets let you build test suites for agent behavior. Trace grading runs end-to-end assessments so you can see exactly where workflows break down. Automated prompt optimization uses those results to improve performance without manual tuning. Support for third-party models means you can benchmark OpenAI's agents against alternatives.

That last feature is particularly telling. By letting developers evaluate external models directly within OpenAI's platform, the company signals confidence that their models will perform competitively even when measured against Anthropic's Claude or Google's Gemini.

Early adopters report significant improvements. Carlyle, the private equity firm, cut development time on their due diligence agent by half and increased accuracy by 30 percent. HubSpot deployed Breeze, their AI assistant, with AgentKit and found it could navigate complex knowledge bases to deliver contextually appropriate responses to customer questions.

The market reaction to AgentKit will depend less on technical capabilities than on whether it actually solves the deployment problem. If agents built with these tools reliably make it to production and deliver business value, developers will adopt the platform regardless of competitive alternatives. If the complexity just shifts from custom code to configuring nodes and managing connectors, the fundamental obstacle remains.

What's clear is that OpenAI views agents as strategically critical and believes the limiting factor isn't model capability—it's the supporting infrastructure that turns promising prototypes into dependable systems. AgentKit represents their theory about what that infrastructure should look like.