Sam Altman, CEO of OpenAI, has repeatedly stated that the company is not currently working on GPT-5. While this has come as a surprise to many, once you step back from the current AI hype cycle, it begins to make a bit more sense. Unlike many of their rivals, OpenAI already knows how to train state-of-the-art models that excel at natural language. This also makes them keenly aware of the many current limitations of large language models (LLMs), and intimately familiar with the scaling laws that govern them.

In general, an LLM can be characterized by 4 parameters: size of the model, size of the training dataset, cost of training, performance after training. Each of these four variables can be precisely defined into a real number, and they are empirically found to be related by simple statistical laws, called "scaling laws". (source: Wikipedia)

Rather than rushing to release GPT-5, they are instead focused on learning how to extract maximum benefit from these models and filling the gaps that need to be overcome to move closer to artificial general intelligence (AGI). To understand why they would take this approach, let me establish some context.

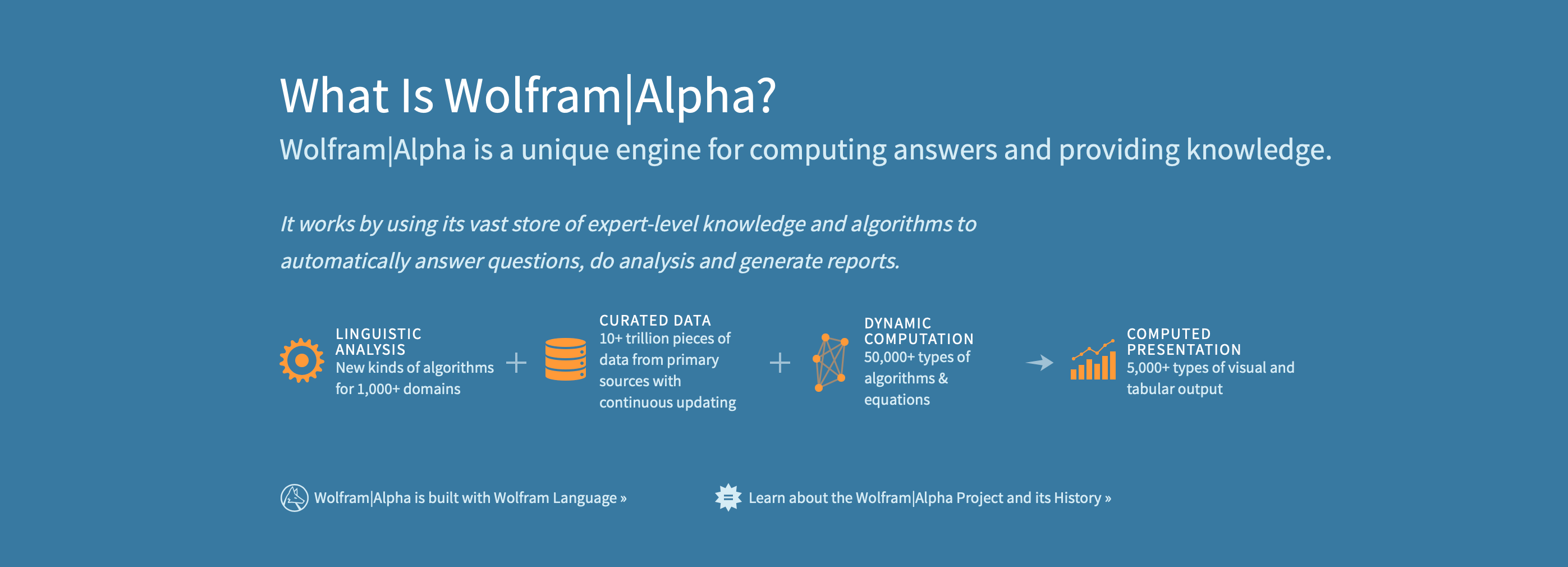

We can put AI models into two categories depending on the techniques used in their development, Neural/Connectionist AI (which is good at tasks like pattern recognition) and Symbolic AI (which is good at tasks like computation).

Neural/Connectionist AI: This approach to AI is based on artificial neural networks, which are inspired by the structure and function of the brain. Neural AIs learn from data by adjusting the weights and biases within their networks to reduce the error in their predictions. They are particularly good at tasks that involve pattern recognition, such as image or speech recognition, natural language processing, and many other tasks where there are complex patterns to be found in large amounts of data. They don't follow explicit rules but learn from examples in a kind of implicit way. LLMs like GPT fall into this category.

Symbolic AI: This is the classical approach to AI, dating back to the middle of the 20th century. Symbolic AI is based on the manipulation of symbols—abstract entities used to represent various aspects of the real world. It includes expert systems that apply rules to a knowledge base to infer new information, and it's often used for tasks that involve explicit reasoning, such as solving a mathematical equation or playing chess. Symbolic AI systems follow explicit rules and decision trees defined by programmers, and they are excellent for tasks where the logic is clear, and the scope is well defined. Google's Knowledge Graph for example uses symbolic AI.

Both techniques have their strengths and weaknesses. Most likely, the path to creating AGI will involve a combination of techniques, including both symbolic AI and novel approaches like LLMs.

So what is OpenAI working on?

Headlining the latest updates to their popular GPT-3.5 Turbo and GPT-4 models is a new function calling capability that could herald a key change in how we interact with their LLMs.

It's important to see this development as part of a necessary paradigm shift in the current AI landscape. This shift involves treating LLMs not just as standalone tools in and of themselves, but as either:

- Intelligent components within a bigger system that can accomplish complex tasks with precision and efficiency.

- As orchestrators that can manage, coordinate, and interface with other tools to accomplish complex tasks.

By utilizing GPT as part of a larger system or as an orchestrator managing other tools, we can compensate for its inherent limitations while maximizing its strengths. Yes, GPT models have shown remarkable performance in understanding and generating human-like text, enabling a wide range of applications, from drafting emails to creating written content, answering queries, and even programming assistance. However, they struggle with:

- Explicit Reasoning & Computation: GPT does not have a built-in computation engine, and lacks explicit computation ability. It doesn't understand mathematical operations in the way a calculator or a human would. When it appears to perform calculations, it's simply because it has seen similar patterns in its training data and learned to replicate them.

- No Error Correction Mechanism: GPT doesn't have a mechanism to correct its errors or to verify its results against a source of truth. If it makes a mistake in a computation, it has no way of recognizing or correcting it.

- Understanding of the World: GPT doesn't have a consistent or coherent model of the world. It generates outputs based on patterns it has seen in its training data, not on an understanding of how the world works.

- Explanation and Interpretability: GPT's workings can be quite opaque—it's often hard to understand why it made a particular prediction.

- Consistency: Because GPT generates outputs based on patterns in its training data, it can produce inconsistent answers to similar questions, or even the same question asked at different times.

But, what if we could add capabilities like these by giving GPT tools to use, or by integrating it into a bigger system with a dedicated computation engine for example?

After all, the genius of human intelligence isn't that we know everything, but that we have been able to extend our cognition with tools (e.g. writing, computers, and language itself).

In fact, this way of thinking about using LLMs in future AI systems mirrors how humans naturally operate.

Just as humans excel at tasks involving abstract thinking, intuition, and language, so do LLMs excel at similar tasks in the realm of AI. However, when it comes to complex calculations or logical reasoning tasks, we often rely on external tools - calculators for complex math, computers for data analysis, or even pen and paper for working out logical problems.

Similarly, while LLMs can handle tasks involving language and pattern recognition, they might benefit from 'outsourcing' tasks involving explicit reasoning or complex computations to other systems that are better equipped for these tasks. This approach would parallel our human propensity to utilize the best tool for the task at hand, leading to systems that can more effectively and efficiently handle a wide range of tasks.

This seems to be the vision that OpenAI hopes to move toward with GPT's new function calling capabilities.

With the new update, the company's latest models can now interpret user-defined functions and subsequently produce a JSON object. This JSON object, molded from the user's input, acts as a 'call to action' for the function, thus allowing a smooth and effective integration with external tools and APIs. GPT can now choose to call upon specific APIs to gather data, interact with databases to retrieve information, or interface with other digital tools to perform various tasks.

One of the most exciting potential applications of this new approach is integrating GPT with symbolic AI systems or powerful computational tools like the Wolfram Engine.

First, imagine a user asking GPT-4 a complex mathematical problem, a task for which GPT's abilities are inherently limited. With the new function calling ability, GPT-4 can pass the mathematical problem to the Wolfram Engine via its API, receive the computed answer, and deliver it back to the user in a human-friendly language. The user doesn't need to know how to write Wolfram language or even know that Wolfram was involved in the process at all. GPT-4 acts as a smart, conversational interface, hiding the complexity of interacting with other systems and APIs.

This is just a single example. In principle, the function calling capability could be used to integrate GPT-4 with any API, allowing it to interface with databases, other AI models, web services, and more. This capability dramatically expands the possibilities for what GPT-4 can do, turning it from a standalone AI model into a versatile tool capable of orchestrating other tools to perform complex tasks.

Beyond opening up new application vistas, function calling capabilities could potentially help in tackling critical issues surrounding AI ethics, safety, and alignment. For instance, GPT could interact with an external system designed to ensure content aligns with ethical guidelines, helping to combat bias. Or, before providing a potentially sensitive answer, GPT could validate its response against a trusted external database - such as a medical database for health-related queries.

This approach could have profound implications for the future of AI. It suggests a model of AI development, where instead of trying to build a single, monolithic AI that can do everything, we create a network of specialized tools and AIs, each optimized for a specific task. In this model, LLMs like GPT-4 serve as the glue that binds these tools together, turning them into a cohesive whole that's more than the sum of its parts.

We can think of this as an "ecosystem" approach to AI development. Just as in a natural ecosystem, each organism plays a specific role, and the overall health of the system depends on the balance and interaction between these roles. Similarly, in an AI ecosystem, each tool or model would perform the tasks it's best suited for, and LLMs would serve as the interface between these tools, allowing them to work together seamlessly.

AGI is often envisioned as a single AI system that can perform any intellectual task that a human being can. But another way to look at it is as a system that can leverage a wide range of tools and resources to accomplish a broad array of tasks. Given this perspective, if you were OpenAI, wouldn't you focus on building out your ecosystem, rather than sprinting towards the release of GPT-5?