The non-profit Partnership on AI (PAI) recently announced the public release of its "Guidance for Safe Foundation Model Deployment" at its AI policy forum in London. This guidance provides recommendations for companies and organizations developing and deploying foundation models, which are AI systems trained on large datasets that can power a wide range of downstream applications. The public comment period for the guidance runs through January 15, 2024.

As AI capabilities rapidly advance, there is growing recognition of the potential for both benefit and harm from foundation large language models. While they could enable creative expression and scientific discovery, there are also risks around issues like bias, misinformation, and malicious uses.

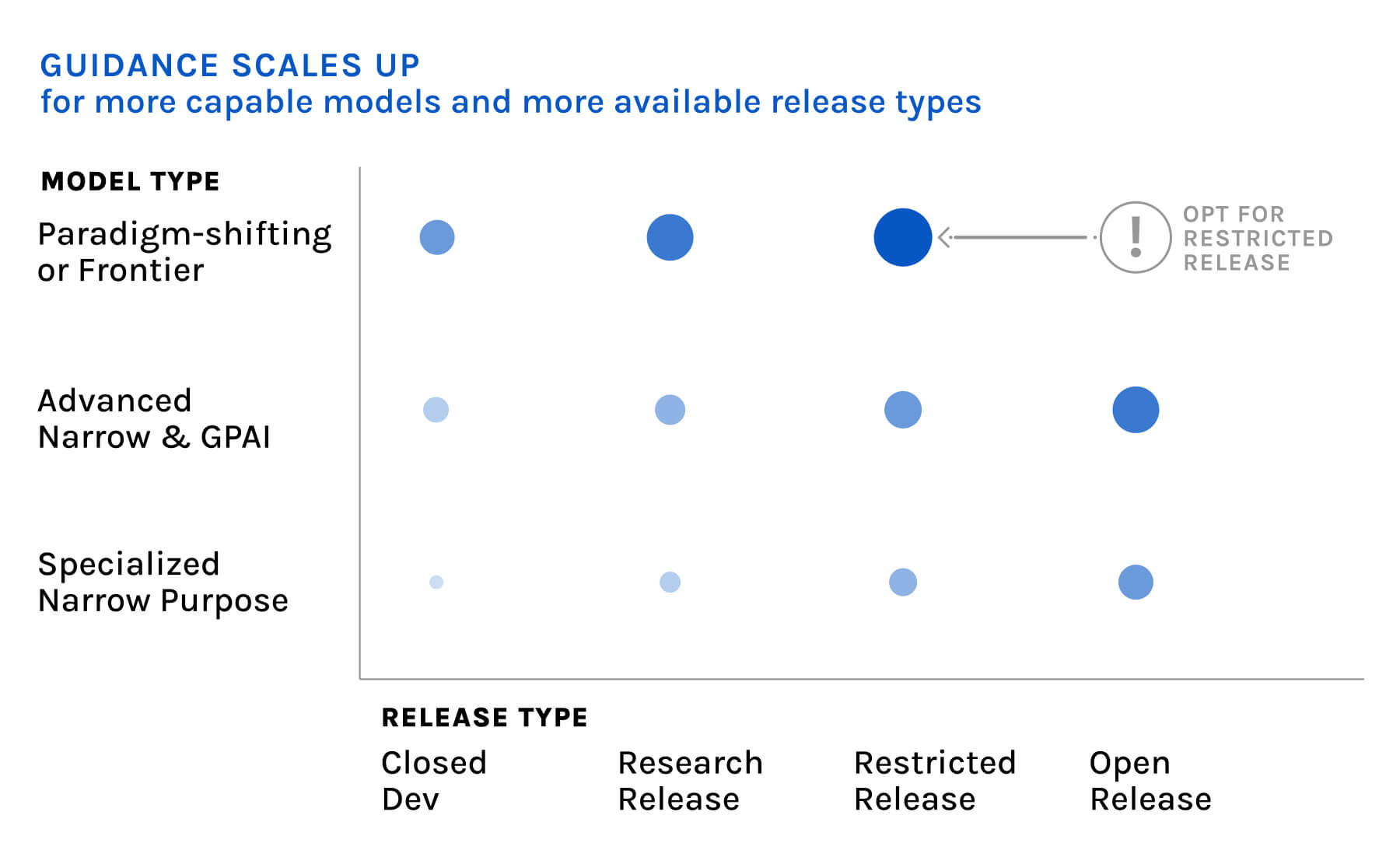

PAI's guidance aims to translate shared principles into practical actions companies can take to foster responsible model development. It offers a framework for collective research and standards around model safety as capabilities evolve. The guidelines avoid oversimplification by tailoring oversight practices based on model capabilities and availability. It offers 22 possible guidelines that vary depending on the model's capability and type of release. For example, more capable and openly available models have more extensive guidelines.

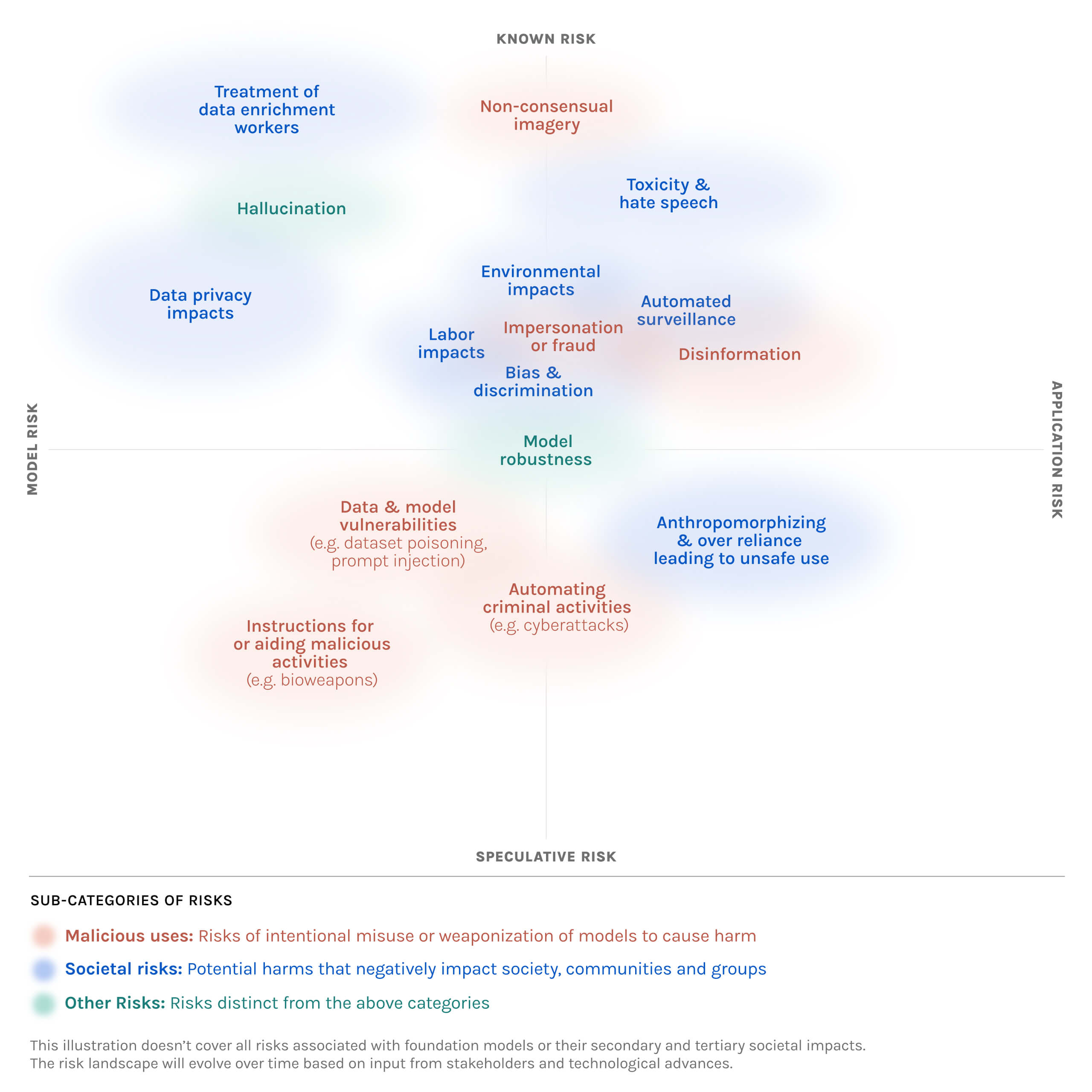

The guidance covers safety risks from the models themselves and risks arising downstream when others build applications using them. It addresses known risks that are well-understood as well as speculative risks that are uncertain and harder to anticipate. Areas of focus include mitigating societal harms, preventing malicious uses, and addressing human-AI interaction risks.

Crucially, PAI designed the guidelines to be flexible and adaptable as technologies and policies continue to change rapidly. Model providers can generate custom guidance for their specific model and release type through PAI's website. The framework also includes cautious rollout recommendations for more advanced "frontier" models.

PAI developed the guidance through a participatory process involving over 40 global institutions. The collaborative group included civil society organizations, academia, and companies like IBM, Google, Microsoft, and Meta. PAI notes the guidelines reflect diverse insights but should not be seen as representing views of any individual participant.

The guidance comes at a pivotal moment, as AI governance debates intensify globally. Initiatives like the recent White House AI Bill of Rights show increased policymaker focus on AI safety. However, businesses express uncertainty on practical steps for responsible model deployment. PAI's guidance aims to complement emerging regulatory approaches by outlining tangible actions companies can take voluntarily.

Over the next year, PAI plans to further develop the guidance based on public feedback. Their roadmap includes exploring how responsibility can be shared across the AI value chain and providing implementation support for putting the guidelines into practice. With carefully crafted safeguards, PAI hopes to ensure foundation models have the greatest benefit for society as their influence grows.