San Francisco-based Pinecone, a leading vector database provider, today announced the launch of Pinecone Serverless, a new cloud-native database architected specifically for developers building modern AI applications powered by large language models (LLMs).

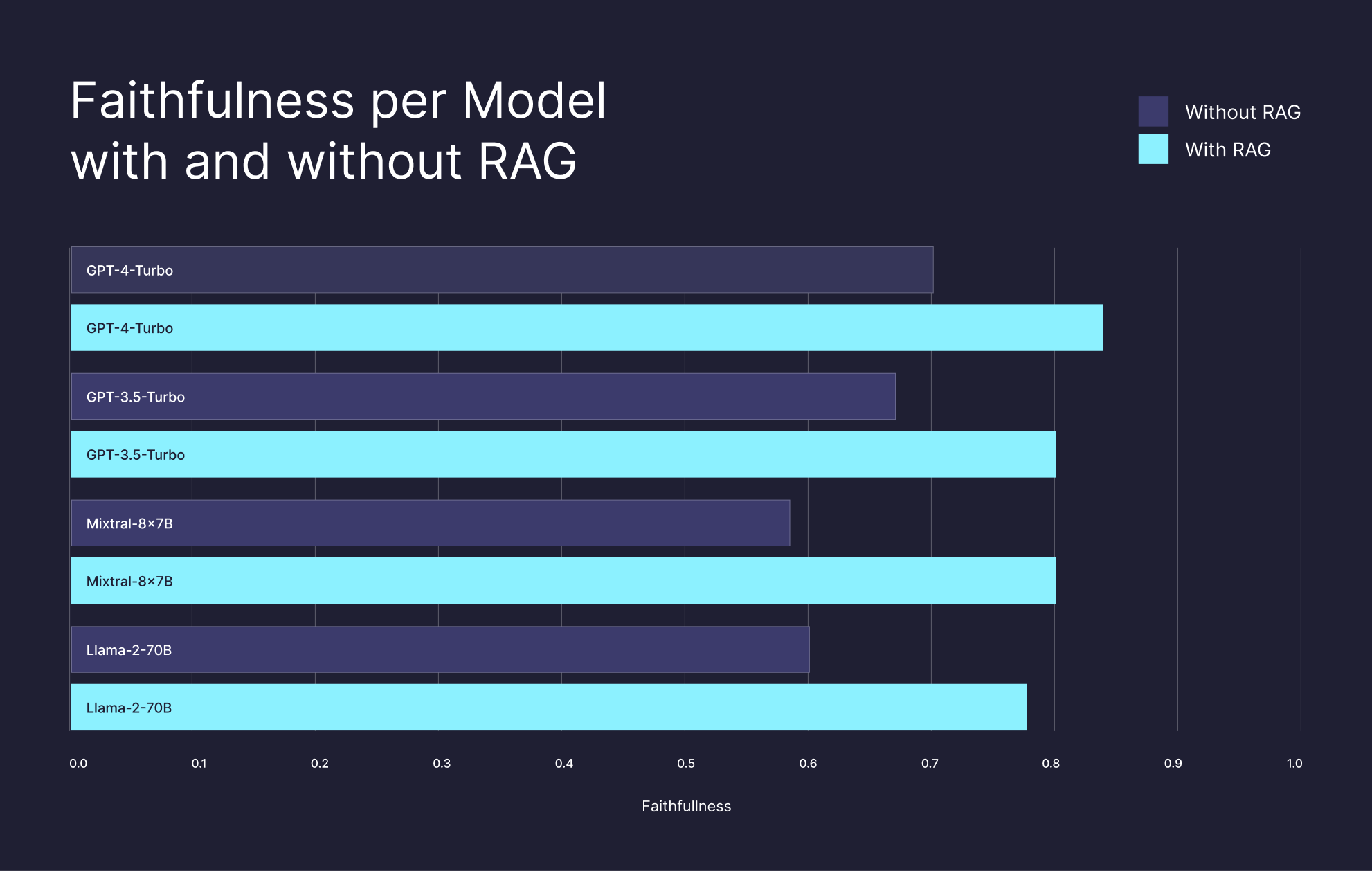

With the rapid emergence of LLMs like GPT4, demand has skyrocketed for vector databases that can provide the advanced semantic search and retrieval capabilities to reduce hallucinations and improve answer quality. Pinecone’s original vector database solution has become wildly popular, used by over 5,000 customers including Notion, Gong and CS Disco. However, the company said they noted that the bar for building commercially viable AI applications was continuing to rise.

“After working with thousands of engineering teams, we found the key ingredient that determines the success of AI applications is knowledge - giving the models differentiated data that they can search to find the right context,” said Edo Liberty, CEO of Pinecone. “We completely reinvented our vector database from the ground up to make it incredibly easy and cost-effective for developers to imbue their AI with unlimited knowledge.”

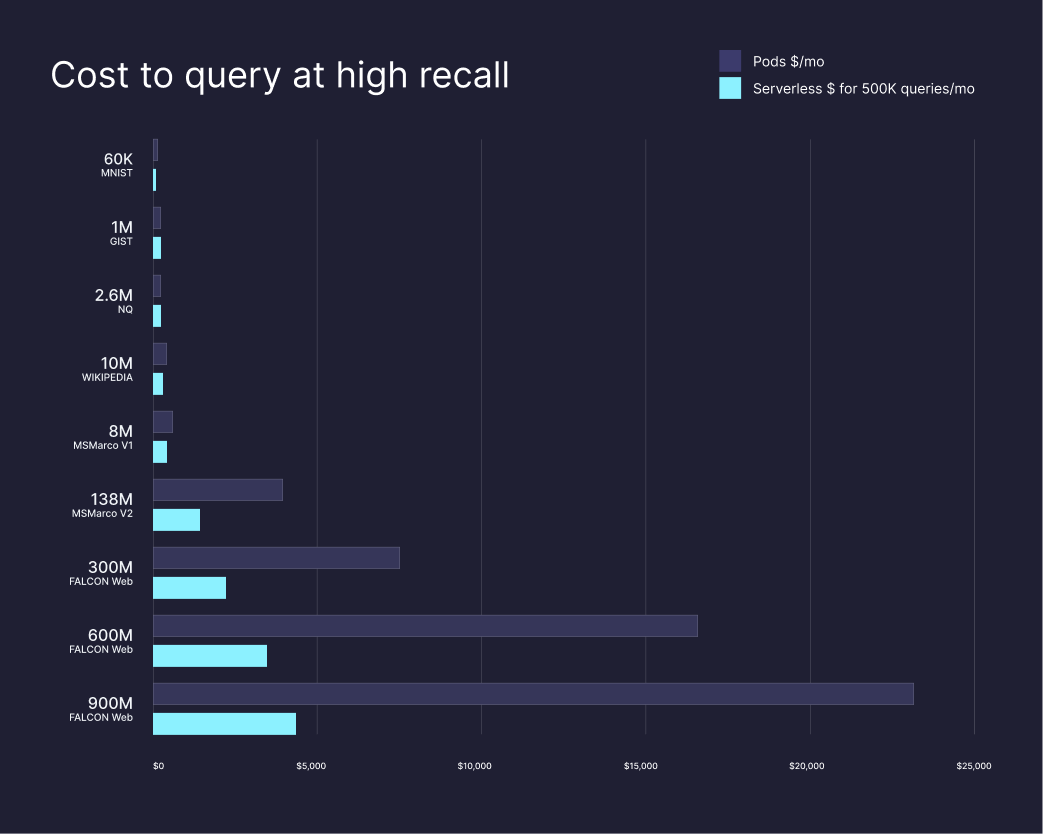

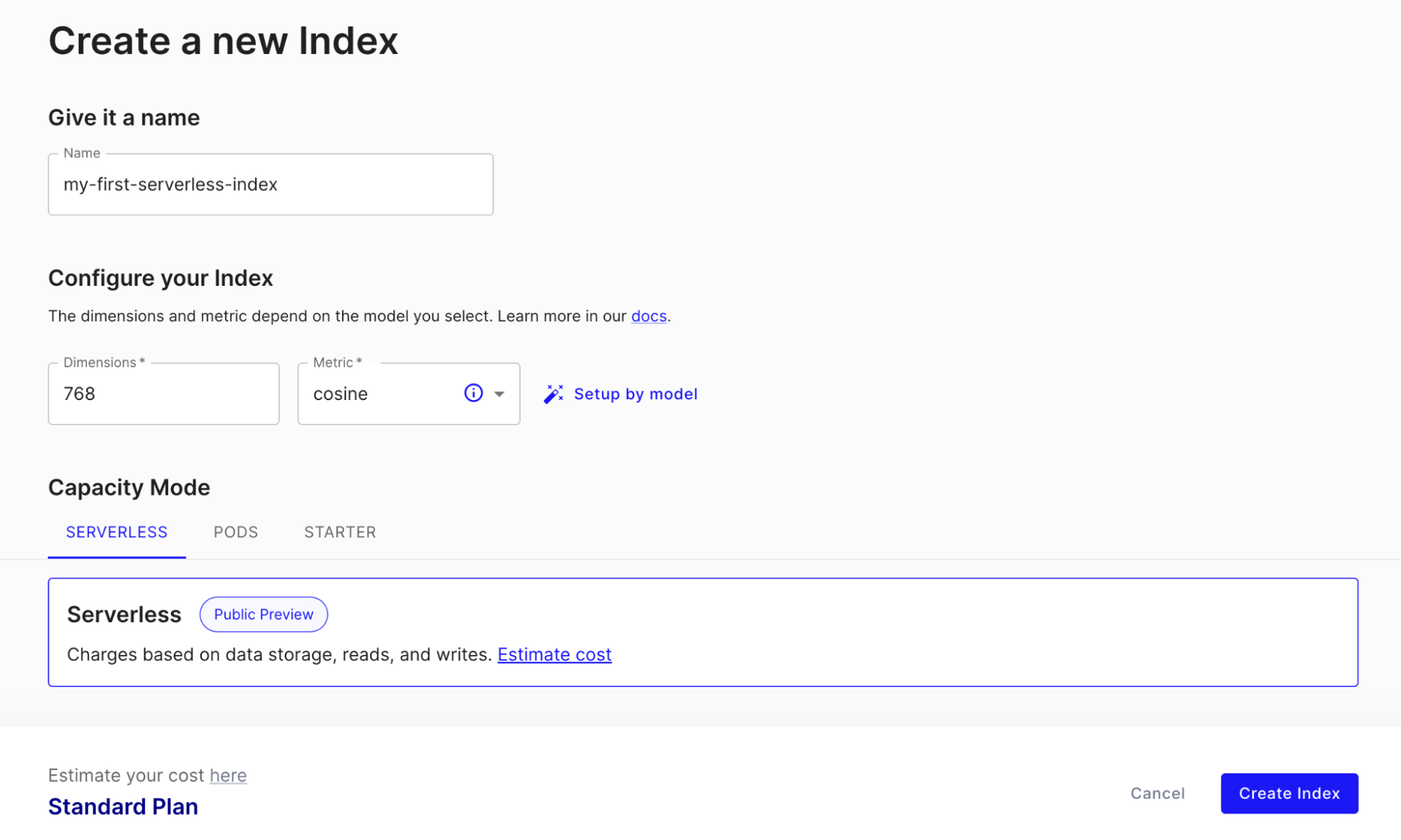

The result of this effort is Pinecone Serverless, a new serverless architecture that separates storage, reads and writes to reduce costs by up to 50x. The multi-tenant system runs on as many nodes as necessary to handle massive throughputs while enabling usage-based billing.

Pinecone’s advances in indexing and retrieval algorithms also unlock vector search over practically limitless records stored across blob storage. This means developers can focus on building applications without worrying about infrastructure constraints.

Akshay Kothari, Co-Founder of Notion, sees immense value in this ease of use combined with scalability. “To make our newest Notion AI products available to tens of millions of users worldwide we needed to support RAG over billions of documents while meeting strict performance, security, cost, and operational requirements. This simply wouldn’t be possible without Pinecone.”

Last year Pinecone raised a $100 million Series B at a $750 million valuation. Pinecone Serverless is now available in public preview on AWS, with support for GCP and Azure coming soon.

While cost and infrastructure advantages are immense, Liberty ultimately sees Pinecone Serverless as the vehicle for unlocking a new wave of revolutionary AI applications. By letting developers easily connect vast knowledge stores to large language models, Pinecone removes the barriers to creating remarkably useful, safe and intelligent AI.

“We’re here to help engineers build better AI products,” said Liberty. “With Pinecone Serverless, we’re giving them the most critical ingredient to make that vision a reality.”

Early adopters span industries from legal tech to sales analytics. But if Liberty and Pinecone realize their full ambition, these pioneers are just the first wave of what could grow into an entirely new paradigm for AI development.