Reka, an AI startup based in Sunnyvale, California, has unveiled Core, its latest and most advanced multimodal language model developed to date. Their Core model joins their existing Flash (21B parameters) and Edge (7B parameters) models. Reka describes Core as a frontier-class model that delivers industry-leading performance across a wide range of tasks involving text, images, video, and audio.

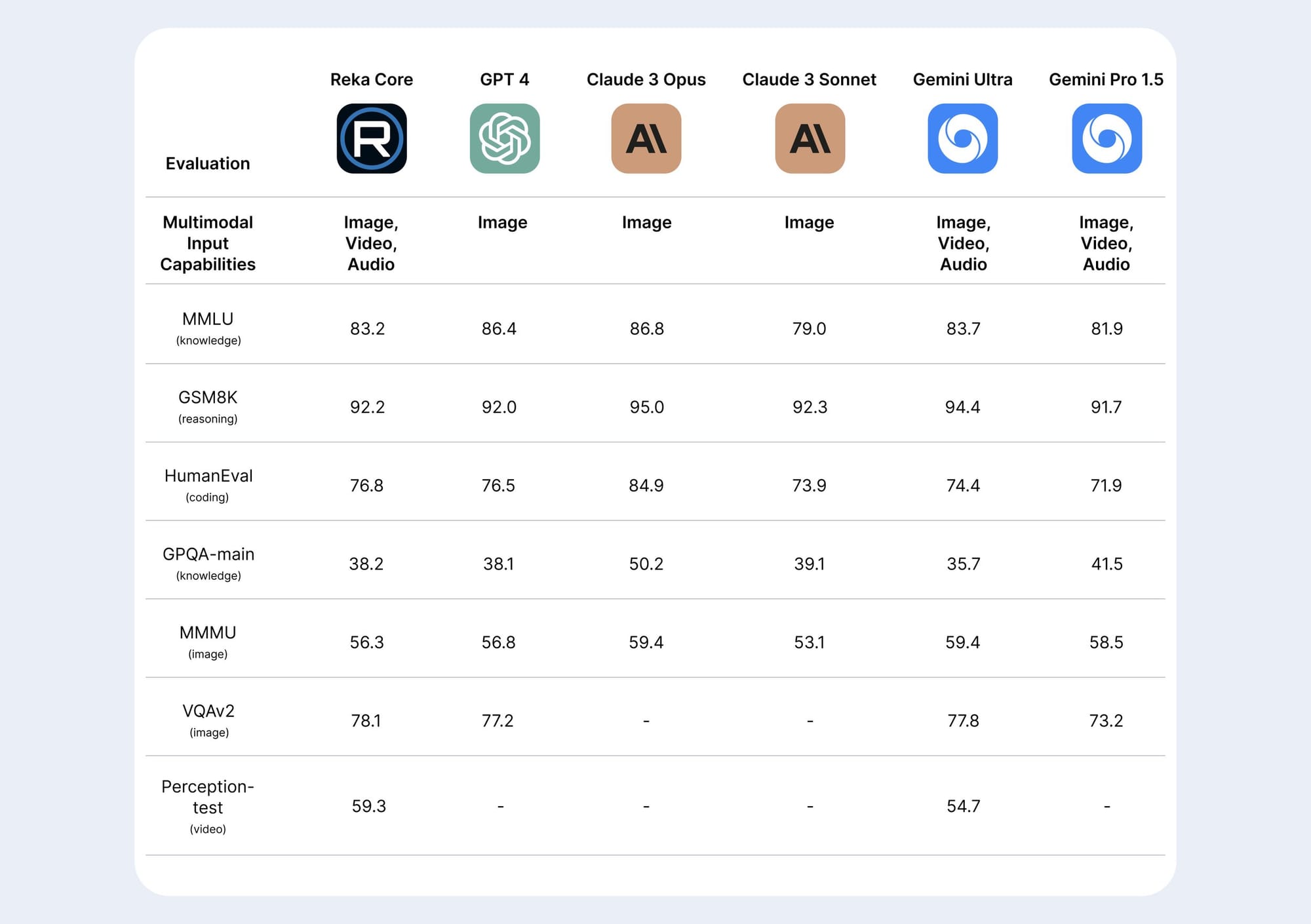

According to Reka's technical report, Core was trained entirely from scratch primarily on NVIDIA H100s using Pytorch. Of note, Reka says the model is not finished training and is still improving. However, results from popular benchmarks so far, show that the model is on par with, or outperforms leading models from OpenAI, Anthropic, and Google. The model demonstrates exceptional reasoning skills, including complex language and math abilities, making it ideal for intricate analysis and problem-solving.

One of Core's standout features is its advanced multimodal understanding capabilities. Unlike many large language models that primarily focus on text, Core has a deep, contextualized understanding of images, videos, and audio. This makes it one of only two commercially available solutions that offer comprehensive multimodal support.

Core also boasts an impressive 128K context window, allowing it to ingest and accurately recall significantly more information than many of its competitors. Combined with its superb reasoning abilities across language and math, this makes Core well-suited for tackling complex, analytical tasks.

Meet Reka Core, our best and most capable multimodal language model yet. 🔮

— Reka (@RekaAILabs) April 15, 2024

It’s been a busy few months training this model and we are glad to finally ship it! 💪

Core has a lot of capabilities, and one of them is understanding video --- let’s see what Core thinks of the 3 body… pic.twitter.com/5ESvog35e9

For developers, Core's top-tier code generation capabilities open up exciting possibilities for empowering agentic workflows. The model's multilingual skills are equally impressive, with fluency in English and several Asian and European languages, thanks to pretraining on textual data from 32 languages.

Like Reka's other models, Core can be deployed via API, on-premises, or on-device to meet the diverse needs of customers and partners. This adaptability, combined with Core's impressive capabilities, unlocks a wide range of potential use cases across industries such as e-commerce, social media, digital content, healthcare, and robotics.

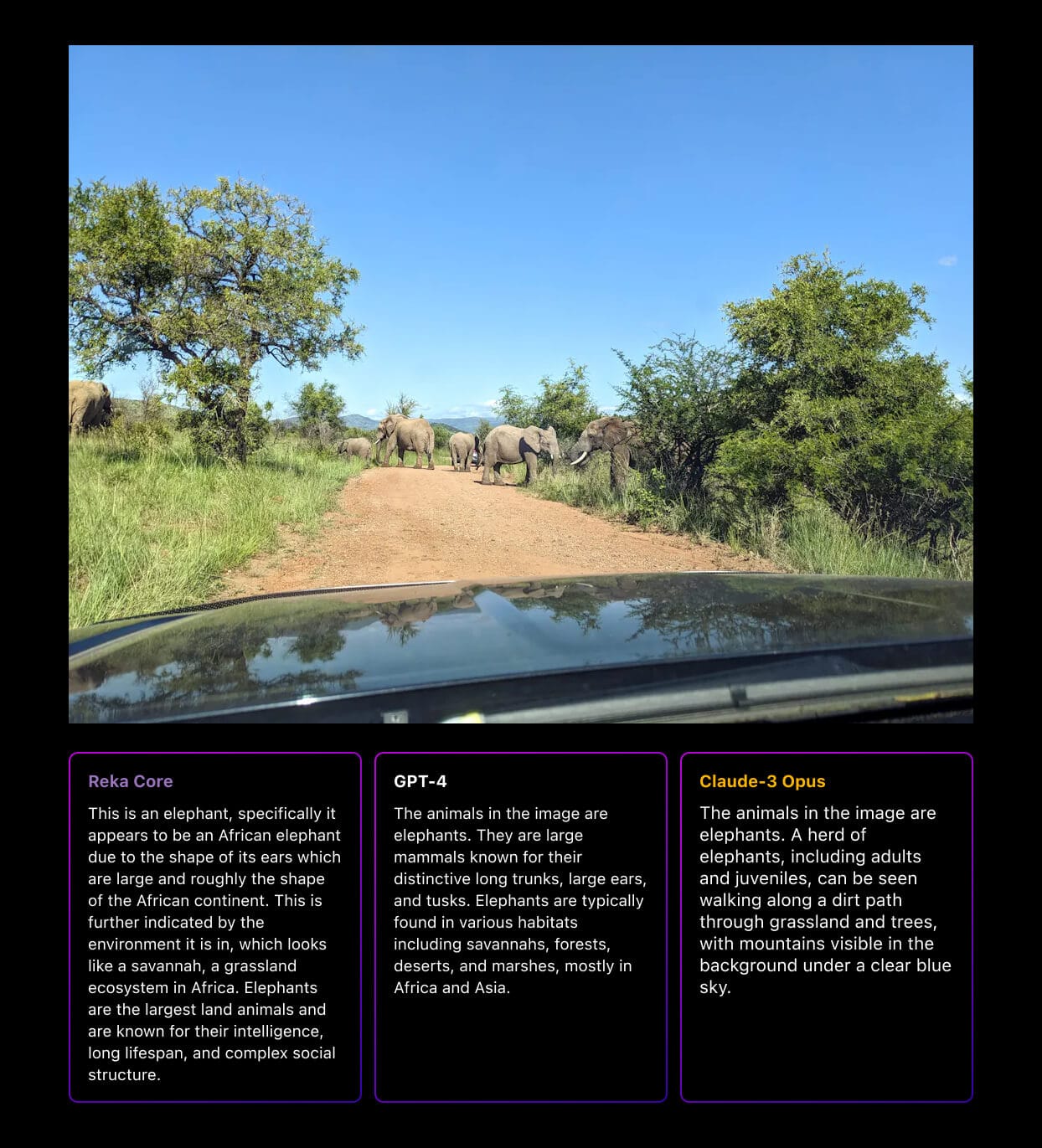

Reka provided a page with various examples comparing Core's output to GPT-4 and Claude 3 Opus. You can also use their chatbot to explore the capabilities of their three models.