Snowflake, has released Arctic, a state-of-the-art large language model designed to be the most open and enterprise-grade on the market. With 480 billion parameters and 17 billion active parameters during generation, Arctic excels at tasks such as SQL generation, coding, and instruction following.

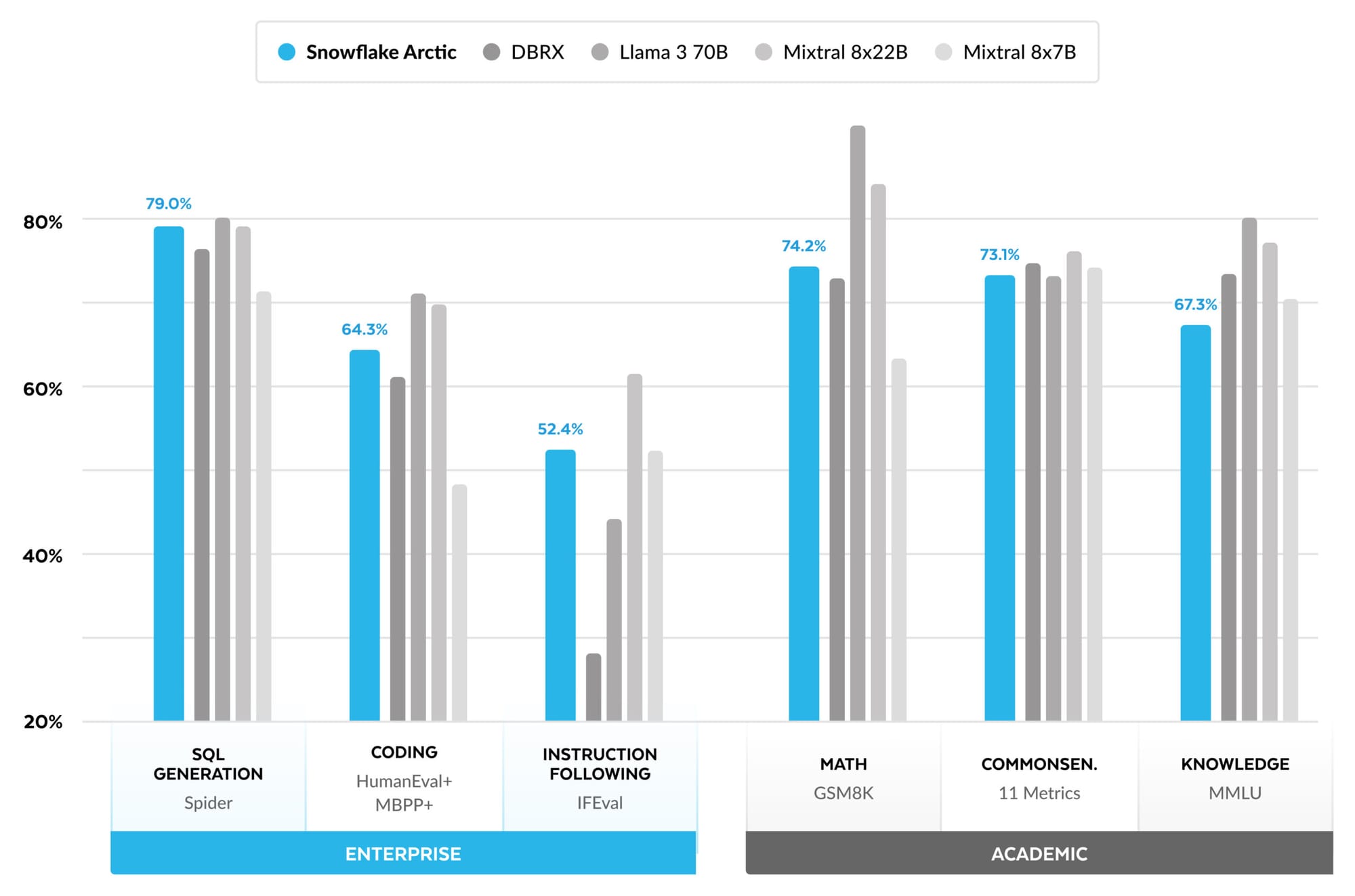

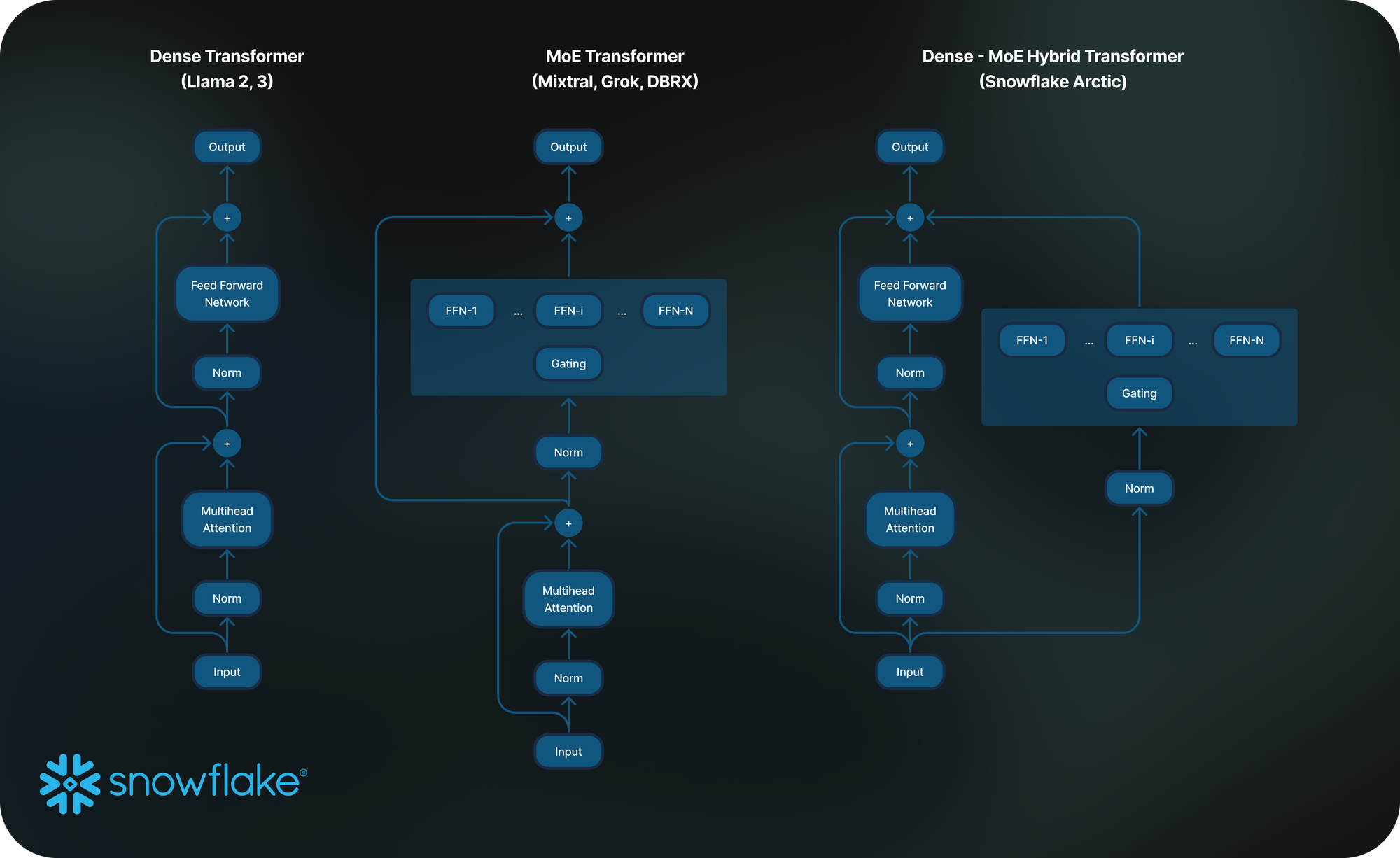

Arctic's unique Mixture-of-Experts (MoE) architecture, combining a 10 billion dense transformer model with a 128x3.66 billion MoE MLP, enables it to deliver top-tier intelligence with unparalleled efficiency at scale. Trained on 3.5 trillion tokens using top-2 gating with 128 experts and two active during generation, Arctic outperforms leading open models in coding, SQL generation, and general language understanding while maintaining resource efficiency.

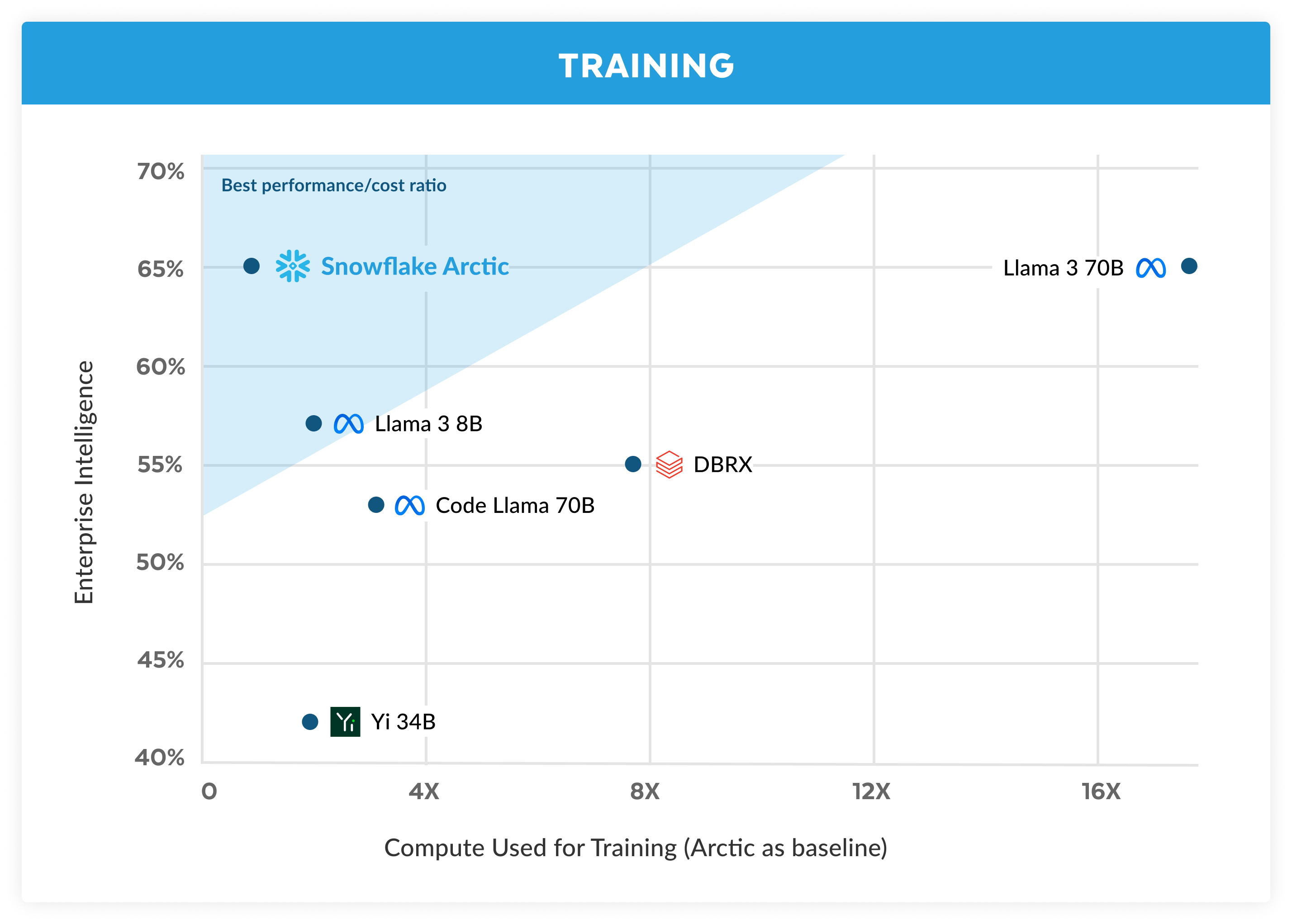

The Snowflake AI Research team, composed of industry-leading researchers and system engineers, built Arctic in less than three months, spending roughly one-eighth of the training cost of similar models. By leveraging Amazon EC2 P5 instances and a meticulously designed data composition focused on enterprise needs, Arctic achieves industry-leading quality with unprecedented token efficiency. It offers significant performance enhancements over traditional models while operating at a fraction of the cost. For example, while similar models might require tens of millions in training costs, Arctic operates under a more modest budget of under $2 million (less than 3K GPU weeks).

Snowflake has fully embraced open-source principles with Arctic, releasing it under an Apache 2.0 license. This means unrestricted access to the model's weights and code, a move that fosters collaboration and innovation. Additionally, Snowflake has open-sourced its data recipes and research insights, providing a comprehensive 'cookbook' to guide others in building world-class MoE models efficiently and economically.

.@SnowflakeDB is thrilled to announce #SnowflakeArctic: A state-of-the-art large language model uniquely designed to be the most open, enterprise-grade LLM on the market.

— sridhar (@RamaswmySridhar) April 24, 2024

This is a big step forward for open source LLMs. And it’s a big moment for Snowflake in our #AI journey as… pic.twitter.com/xtXZdCyFS3

Arctic is available for immediate use through serverless inference in Snowflake Cortex and will be accessible on various platforms, including Amazon Web Services, Hugging Face, Lamini, Microsoft Azure, NVIDIA API catalog, Perplexity, and Together AI. You can try a demo of Artic in this Hugging Face Space.