At its annual Snowday 2023 conference, Snowflake announced the launch of Snowflake Cortex, a new fully managed service that provides access to leading large language models (LLMs), AI models, and vector search functionality. With Snowflake Cortex, the data cloud company aims to simplify how organizations of all sizes can derive value from generative AI in a secure, governed manner.

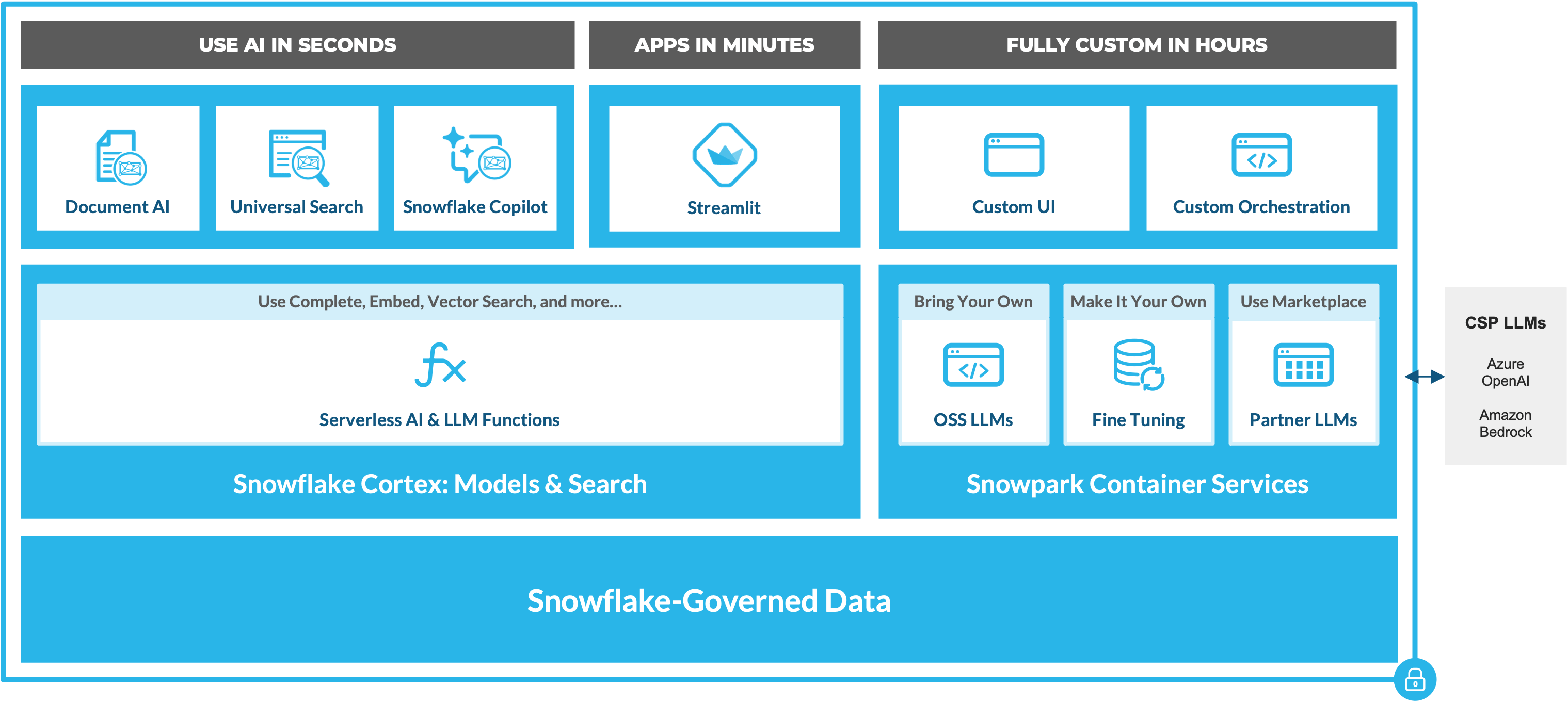

Snowflake Cortex allows users to tap into LLMs and AI through serverless functions callable with SQL or Python. This gives data teams and citizen developers access to advanced models without needing deep AI expertise or managing complex infrastructure.

“Snowflake is pioneering the integration of AI innovation and enterprise data,” said Sridhar Ramaswamy, SVP of AI at Snowflake. “With Snowflake Cortex, customers can instantly and securely harness the power of large language models to reimagine how all users derive value from AI.”

As a fully managed service, Snowflake Cortex provides several types of serverless functions to accelerate analytics and build contextualized LLM apps:

- Specialized functions using cost-effective ML models for sentiment analysis, summarization, and more. Also includes existing Snowflake ML functions like anomaly detection.

- General-purpose functions leveraging LLMs like the open-source Llama 2 for conversational queries. Includes vector search coming soon.

- Streamlit framework to swiftly turn models and data into interactive apps. Over 10,000 Streamlit apps have been built within Snowflake.

Snowflake has also built three LLM-powered experiences on top of Cortex to enhance productivity:

- Snowflake Copilot, an AI assistant for natural language data queries and SQL writing.

- Universal Search to quickly find relevant data, views, apps and marketplace solutions.

- Document AI to easily extract information from documents like invoices with LLMs.

For advanced users, Snowflake Cortex allows containers services for running commercial LLMs and vector databases directly within their Snowflake account. Users can also fine-tune open-source models as needed.

The launch of Snowflake Cortex aligns with the company’s vision of pioneering the next wave of AI by tackling one of the biggest challenges - seamless access to high-quality data.

With data silos eliminated through Snowflake’s single, integrated platform, Cortex allows LLMs to efficiently query data in context. Users can rapidly build AI apps that drive insights across their business.

Snowflake Cortex also enables access to AI in a secure, transparent environment. As models interact with data, Snowflake’s patented Time Travel capability provides an immutable history of usage.

Additionally, Cortex functions execute within isolated virtual warehouses, preventing customer data leakage. Users maintain full control over their data, including the ability to limit model access as needed.

By tackling the challenges of data accessibility and governance, Snowflake Cortex represents a major step toward making AI more consumable for enterprises. The service minimizes the need for specialized skills, infrastructure, and risks.

With Snowflake Cortex, organizations of all sizes can now explore how LLMs and AI models can enhance their analytics and application capabilities. As advanced technologies become more consumable, expect to see accelerated AI adoption across industries in the years ahead.