Stability AI has released an experimental version of Stable LM 3B, a new 3 billion parameter large language model designed for use on portable devices like phones and laptops. The model aims to deliver strong conversational capabilities while remaining compact and efficient.

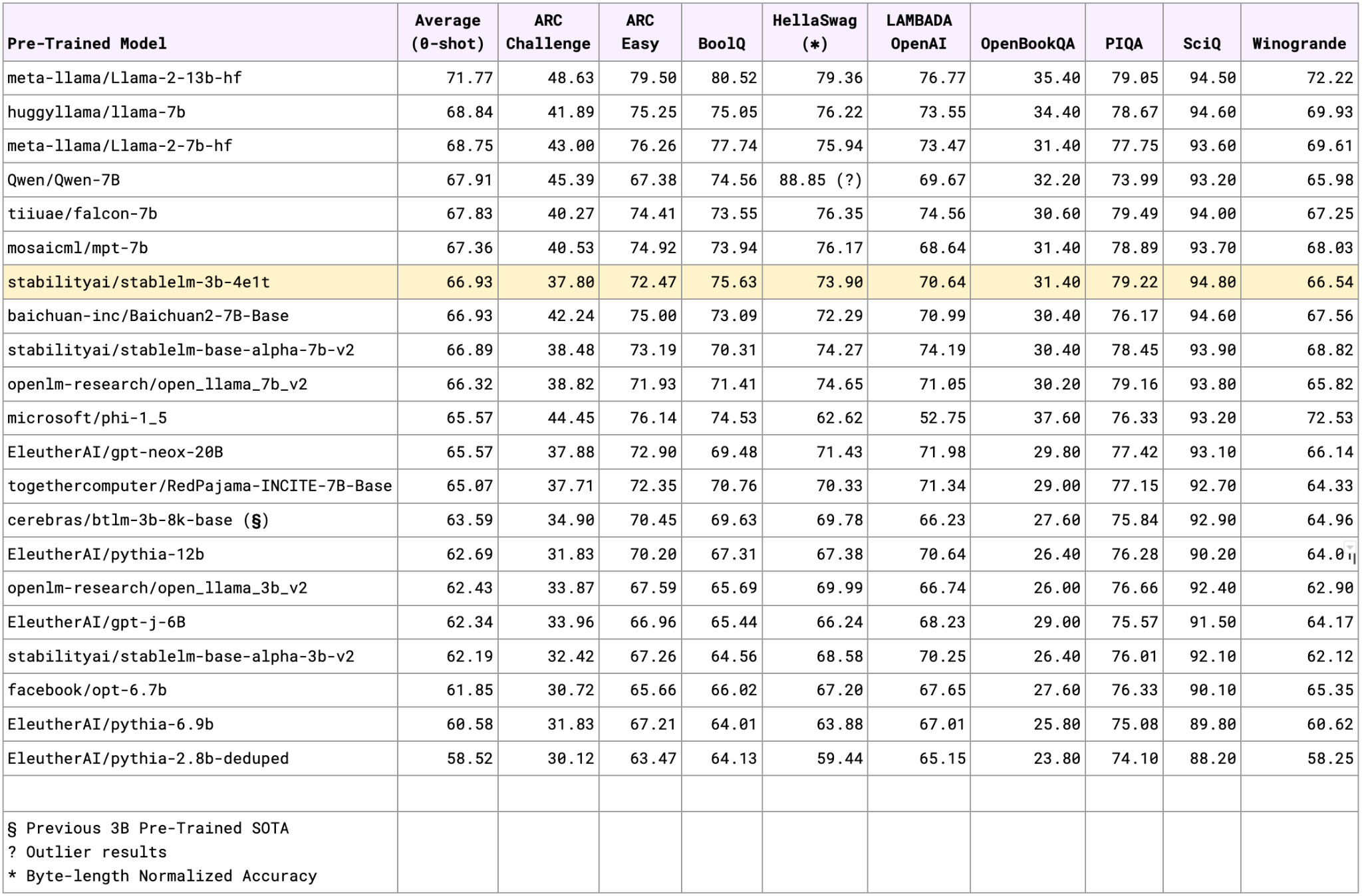

Unlike leading models which can have up to 70 billion parameters, Stable LM 3B requires far fewer resources. This allows it to run on smartphones or home PCs, opening up new possibilities for AI applications "on the edge." Despite its smaller size, Stability AI claims the model achieves state-of-the-art performance compared to other public 3B parameter models. It even outperforms some popular 7B parameter models on certain benchmarks.

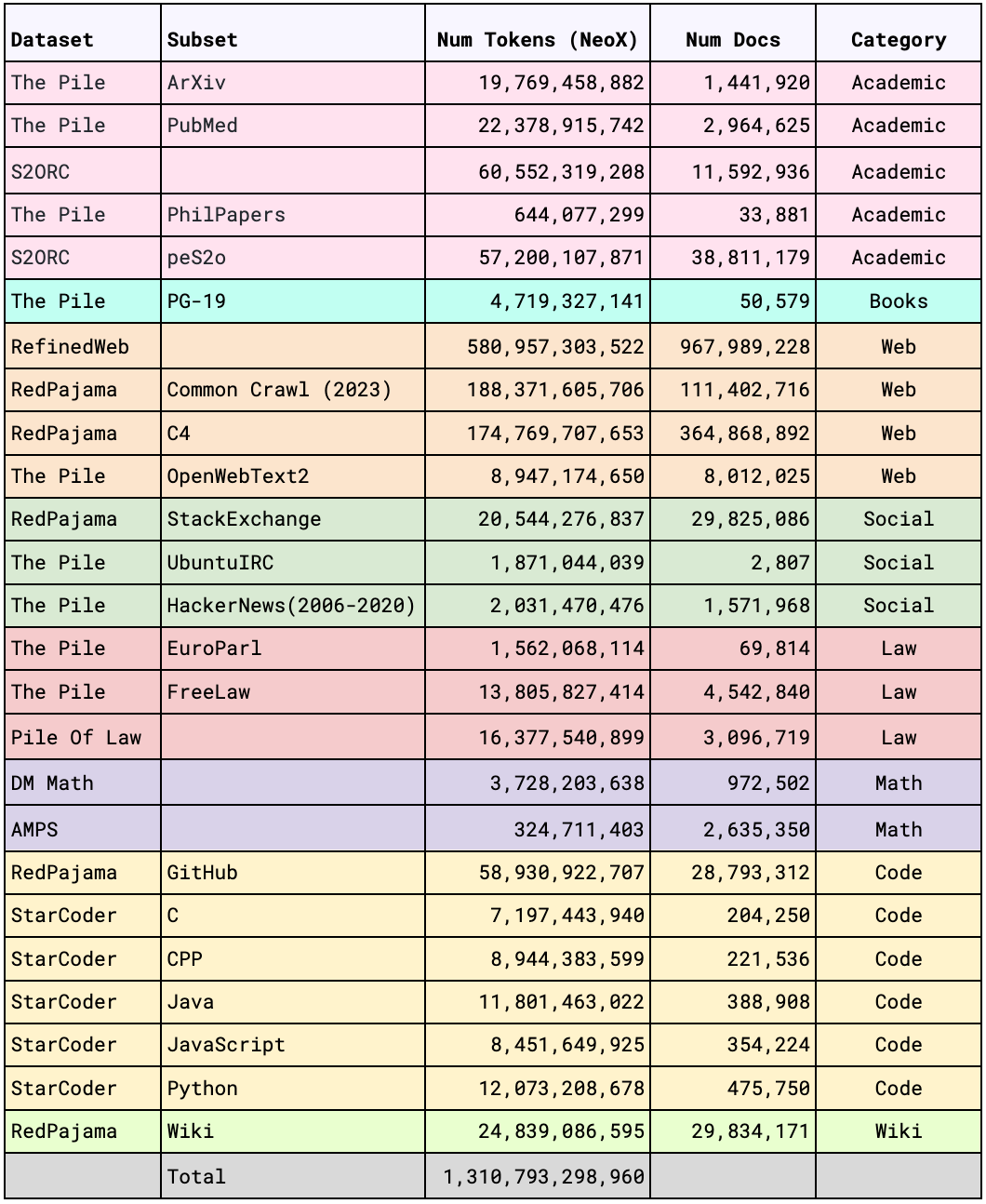

The model is based on the LLaMA decoder-only transformer architecture and Stability AI has released the model weights publicly. At the heart of Stable LM 3B's prowess is its rigorous training regime. By leveraging a vast array of datasets like Falcon RefinedWeb extract, RedPajama-Data, The Pile, and StarCoder, Stability AI ensured the model's precision. Training spanned approximately 30 days on an expansive 256 NVIDIA A100 40GB GPU cluster, capitalizing on cutting-edge techniques from the likes of gpt-neox, ZeRO-1, and FlashAttention-2. For more details, you can dive into the StableLM-3B-4E1T technical report.

The release aims to make customizable, high-performance AI more accessible. Stable LM 3B can be fine-tuned for specific applications like customer service chatbots or programming assistants. This allows companies to adjust the model to their needs while keeping costs low.

However, Stability AI cautions that this is meant to be a base model that requires application-specific fine-tuning, safety testing, and adjustments before deployment. The company plans to release an instruction-fine-tuned version soon that will undergo extensive evaluation first.

Stable LM 3B signifies a step towards practical AI applications on portable devices. Earlier this year, Google released a similar lightweight version of PaLM 2 called Gecko aimed at mobile devices. With open-sourced, auditable models like this, Stability AI aim to build trust and enable responsible AI innovation.