Stability AI in collaboration with its CarperAI lab has unveiled two new large language models, FreeWilly1 and FreeWilly2, that demonstrate advanced language understanding while furthering the mission of open access AI research.

Impressively, both models now sit at the top of the Hugging Face Open LLM Leaderboard which aims to track, rank and evaluate LLMs and chatbots as they are released.

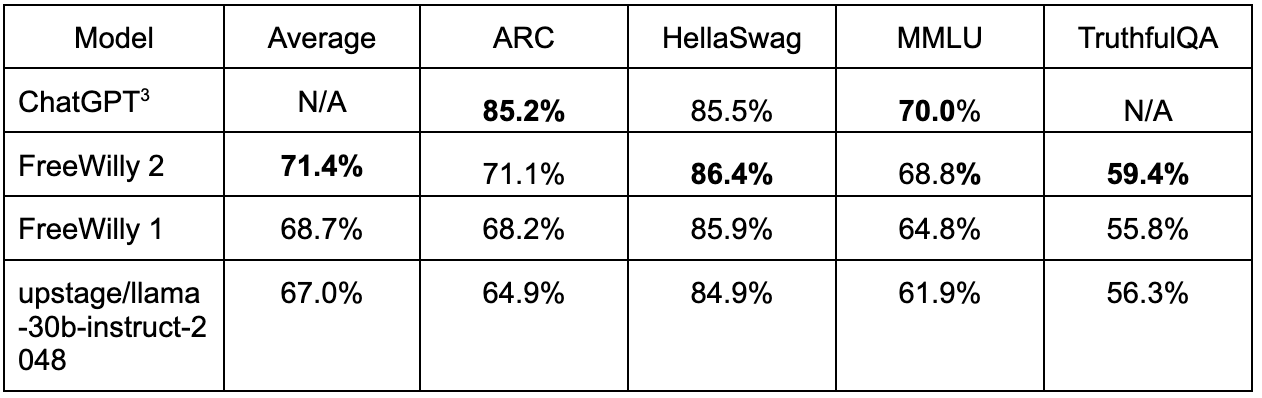

FreeWilly1 and its successor, FreeWilly2, are open access LLMs and showcase extraordinary reasoning capability across various benchmarks. The models are built on the robust foundation models LLaMA 65B and LLaMA 2 70B respectively, and fine-tuned with a synthetically generated dataset using Supervised Fine-Tune (SFT) in standard Alpaca format. The performance of FreeWilly2 even compares favorably with GPT-3.5 on certain tasks.

Researchers attributed this high performance to the rigorous synthetic data training approach. The training for FreeWilly models drew inspiration from Microsoft's methodology outlined in its paper Orca: Progressive Learning from Complex Explanation Traces of GPT-4. However, Stability AI adapted this approach and used different data sources.

The training dataset consisted of 600,000 data points, sourced from high-quality instructions generated by language models. These instructions were from datasets created by Enrico Shippole. Despite the dataset being a tenth of the size used in the original Orca paper, the models have demonstrated exceptional performance, validating the approach to synthetically generated datasets.

To evaluate the models' performance, Stability AI utilized EleutherAI's lm-eval-harness, with the addition of AGIEval. The results underscored that both FreeWilly models excel in intricate reasoning, understanding linguistic subtleties, and answering complex questions in specialized domains such as law and mathematical problem-solving.

Stability AI researchers independently verified the performance results of FreeWilly models, and these were later reproduced by Hugging Face on July 21, 2023, and published in their leaderboard.

Stability AI stressed that it focused on responsible release practices around FreeWilly. The models underwent internal red team testing for potential harms but the company is actively encouraging external feedback as well to further strengthen safety measures.

FreeWilly1 and FreeWilly2 represent a significant milestone in the domain of open access LLMs. They stand to propel research, enhance natural language understanding, and enable complex tasks. Stability AI is optimistic about the endless possibilities that these models will create in the AI community and the innovative applications they will inspire.

The FreeWilly models (1, 2) are available now for non-commercial use from Hugging Face. Researchers are already actively exploring the capabilities of these mighty new LLMs.