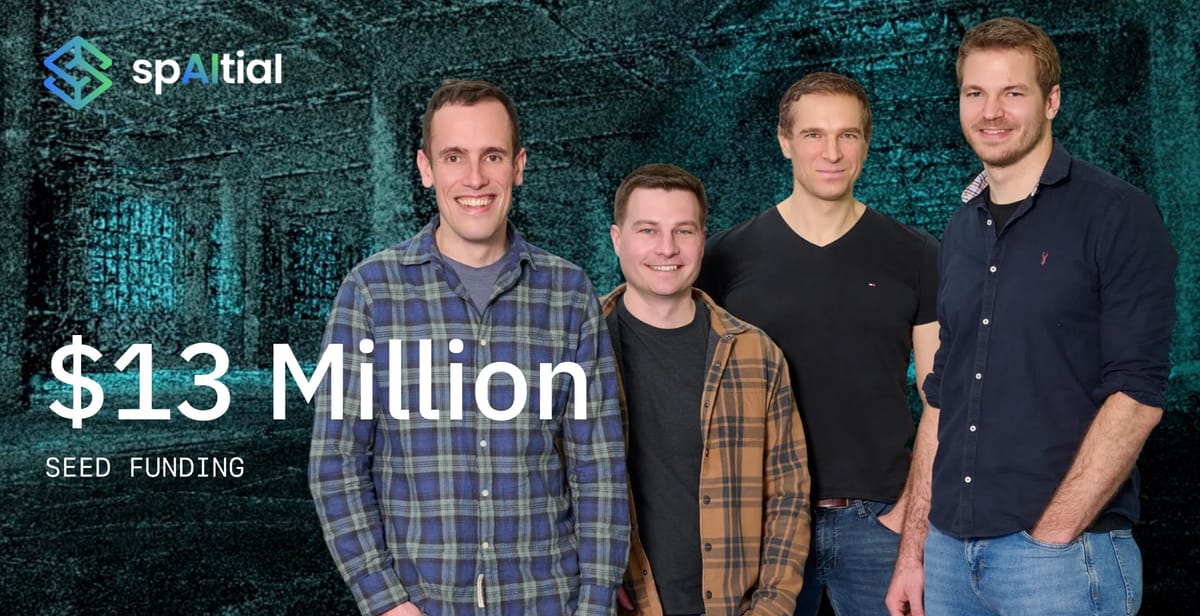

SpAItial, a Munich-based startup founded by Synthesia co-founder Matthias Niessner, has emerged from stealth with a $13 million seed round to build what the company calls "spatial foundation models" that can generate interactive, photorealistic 3D environments from simple text prompts or images.

Key Points:

- Founded by Synthesia co-founder Matthias Niessner and veteran AI researchers from Google and Meta

- Raised unusually large $13M European seed round led by Earlybird, despite only showing teaser demos

- Competing against well-funded rivals like World Labs ($230M, $1B+ valuation) and Odyssey ($27M total)

- Targeting gaming, film, CAD engineering, and robotics applications with "spatially intelligent" AI

AI has gotten pretty good at generating images and spinning up realistic video. But understanding and generating full 3D environments—spaces with geometry, depth, and physics? That’s a whole different level. Enter SpAItial, a new startup that just pulled back the curtain on its early technology, and it’s aiming directly at that gap.

In a teaser dropped this week, SpAItial showed off their tech: type in a short text prompt or feed it an image, and out comes a photorealistic 3D scene. Not just a flat render, but an environment that can be explored from multiple angles, with realistic materials, lighting, and spatial coherence. It’s an early look, but it already suggests the company is thinking far beyond the constraints of today’s AI media generation tools.

What’s powering all this is what SpAItial calls a Spatial Foundation Model—a term you’ll likely be hearing more often soon. Unlike large language models or diffusion-based image generators, SFMs are trained to natively understand the 3D world. That means they don’t just predict pixels—they infer geometry, physics, materiality, and how all of those interact in time and space. Think of it as teaching AI to see and build like a human would, rather than guessing what a photo looks like from the outside.

This approach unlocks some serious possibilities. Game developers could prototype immersive environments with a few lines of text. Engineers might simulate and tweak virtual twins of real buildings before breaking ground. And robots? They could navigate more like us, intuitively understanding how a room is laid out and what happens if something moves.

The team behind SpAItial isn’t short on brainpower either. It includes co-founders like Matthias Niessner (TU Munich professor and Synthesia co-founder), Ricardo Martin-Brualla (formerly of Google’s 3D efforts), and David Novotny (ex-Meta, known for 3D asset generation). Add in researchers behind early neural rendering work like VoxGRAF and X-Fields, and you’ve got a deep bench pushing on what AI can actually know about the physical world.

Backing them is a $13 million seed round led by Earlybird VC, with a roster of angels that includes Synthesia execs, academic heavyweights, and enterprise AI veterans. That kind of capital (and pedigree) signals that SpAItial isn’t just chasing shiny demos—it’s building for deployment.

Of course, they’re not the only ones eyeing this space. World Labs, co-founded by AI pioneer Fei-Fei Li, has already raised $230 million at a valuation exceeding $1 billion and plans to release its first product later this year. Meanwhile, Odyssey, founded by self-driving car veterans, has raised $27 million total and takes a unique approach by sending teams with backpack-mounted camera systems to capture real-world 3D data for training its models.

But Niessner believes there's still relatively little competition compared to other types of foundation models, especially given the "bigger vision" of truly interactive 3D world generation.

For now, SpAItial is opening a waitlist and inviting early testers to try out the tech. No firm product launch date yet, but with talent like this and a demo that already looks a few steps ahead of the field, they’re not just dreaming in 3D—they’re building it.