Tencent has released Hunyuan-Large, a new open-source Mixture of Experts (MoE) model with 389 billion parameters, 52 billion of which are active during computation. This is the largest open-source Transformer-based MoE model to date and boasts a 256K token window.

Why it matters: The model delivers outstanding performance across a variety of tasks, including language understanding, reasoning, math, coding, and long-context processing. It outperforms Meta's LLama3.1-70B and matches the larger LLama3.1-405B in many key benchmarks. With its extensive capability set, Hunyuan-Large is likely to be influential for both research and practical AI applications.

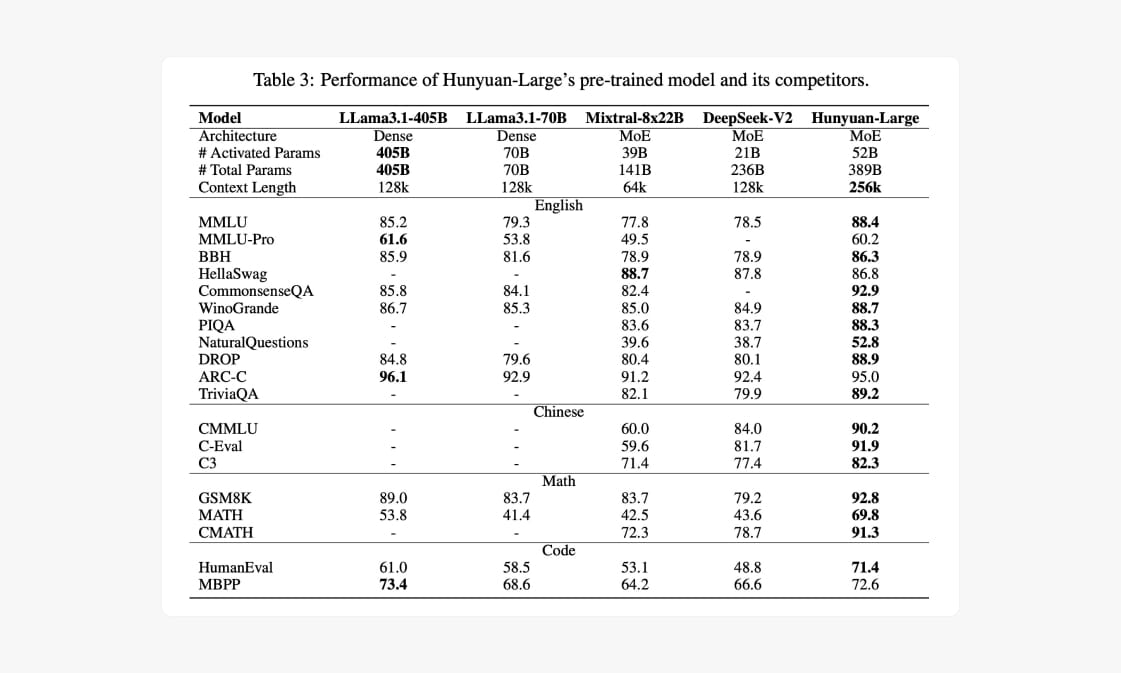

By the numbers: The model dominates key industry benchmarks:

- Scores 88.4% on MMLU (general knowledge and reasoning), surpassing LLama3.1-70B's 79.3%.

- Achieves 92.8% accuracy on GSM8K (mathematical reasoning), surpassing the 89% scored by LLama 3.1-405B. It also surpasses other MoE-based models like Mixtral-8x22B, which achieved 83.7%, and DeepSeek-V2 at 79.2%.

- Reaches 71.4% on HumanEval (code generation), outperforming all major open-source models

Technical Advantage: Unlike most open-source models, which use dense and static structures, Hunyuan-Large employs the MoE architecture, which allows dynamic activation of only the most relevant model components. This selective activation enhances efficiency in both training and inference, leading to reduced computational requirements without compromising performance.

Zoom out: With Hunyuan-Large, Tencent adds a powerful new tool to the growing collection of open-source AI models. Similar to the success that Meta has seen with the Open Science/Open Source community, Tencent is hoping to catalyze further innovation, offering AI researchers and developers a robust platform for future work.

Demo and Access:

- You can access the code, model weights etc. on Hugging Face and GitHub.

- Check out the technical report for additional details.

- To simplify the Training process, HunyuanLLM provides a pre-built Docker image.

- Try the model using the Hugging Face Space.

What’s next: Expect more companies and researchers to adopt Hunyuan-Large for advanced AI applications, particularly in domains needing long-context understanding and efficient, specialized reasoning. As the AI community experiments and builds on this foundation, we may see a surge of new, innovative solutions powered by the efficiency and versatility of MoE-based systems.

P.S. Of note, the model's license does not permit use within the European Union.