The Technology Innovation Institute (TII) has released Falcon Mamba 7B, a new large language model that employs a State Space Language Model (SSLM) architecture. This model, part of TII's Falcon series, represents a departure from traditional transformer-based designs and has been independently verified by Hugging Face as the top-performing open-source SSLM globally.

State Space Language Models are a newer approach to natural language processing that differ from the widely used transformer architecture. SSLMs use techniques from control theory to process sequential data, offering several potential advantages. These models can handle longer sequences of text more efficiently, require less memory to operate, and maintain consistent performance regardless of input size.

The Falcon Mamba 7B model demonstrates these benefits in practice. It can generate significantly longer blocks of text without increasing memory requirements, addressing a common limitation in conventional language models. This capability allows for more efficient processing of extensive text data, potentially opening up new applications in areas requiring analysis of large documents or continuous text generation.

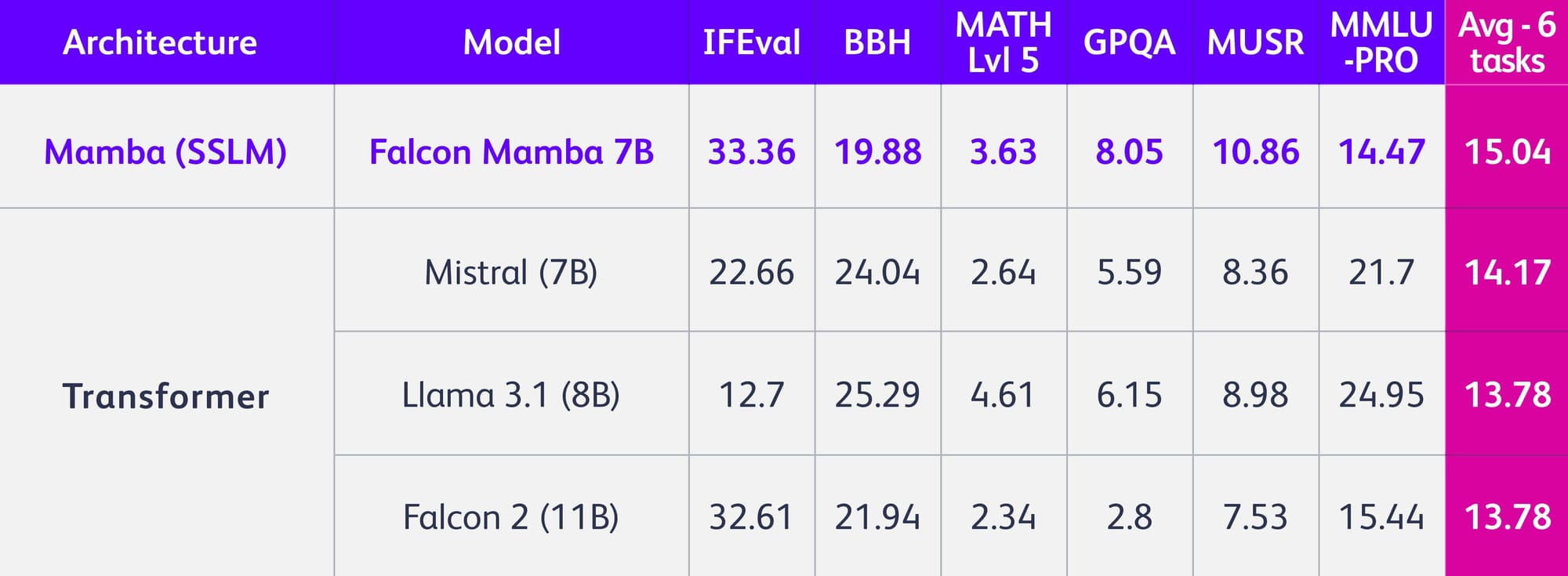

In benchmark tests, Falcon Mamba 7B outperformed established transformer-based models, including Meta's Llama 3.1 8B and Mistral's 7B. This performance highlights the potential of SSLMs to handle large-scale language processing tasks more effectively than their transformer-based counterparts.

Falcon Mamba 7B is available on the Hugging Face platform for researchers and developers to access and test the model. An interactive playground is also available for users to explore its capabilities in various language tasks.

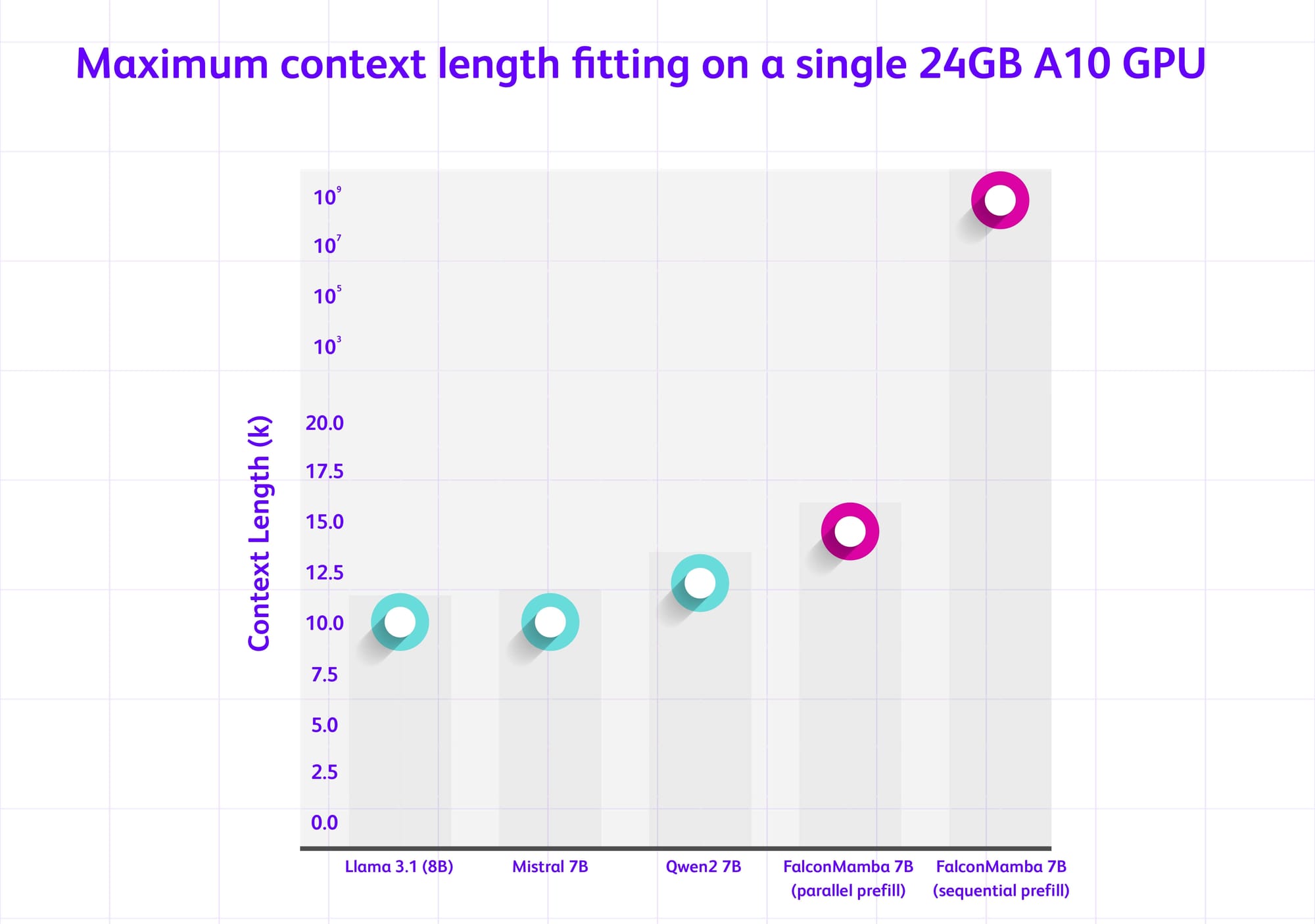

Performance tests conducted on standard GPU hardware showed Falcon Mamba 7B's ability to process longer sequences compared to transformer-based models while maintaining steady generation speed and memory usage. For instance, on an NVIDIA A10 GPU with 24GB of memory, the model demonstrated superior efficiency in handling extended text inputs.

As researchers and developers begin working with Falcon Mamba 7B, its practical impact on natural language processing applications remains to be fully assessed. However, its release represents a significant step in language model development, potentially offering more efficient and capable AI systems for a wide range of text-based tasks.

The introduction of Falcon Mamba 7B by TII, based in Abu Dhabi, also underscores the global nature of AI innovation, with contributions coming from research institutions worldwide. As the field of natural language processing continues to evolve, models like Falcon Mamba 7B may pave the way for new advancements in how AI systems understand and generate human language.