Beijing-based AI startup Zhipu AI held its first-ever Zhipu DevDay, revealing significant advances in its development of large language models. The highlight was the launch of the company's fourth-generation multimodal foundation model, GLM-4, which Zhipu claims has near parity with OpenAI's GPT-4 in overall capabilities.

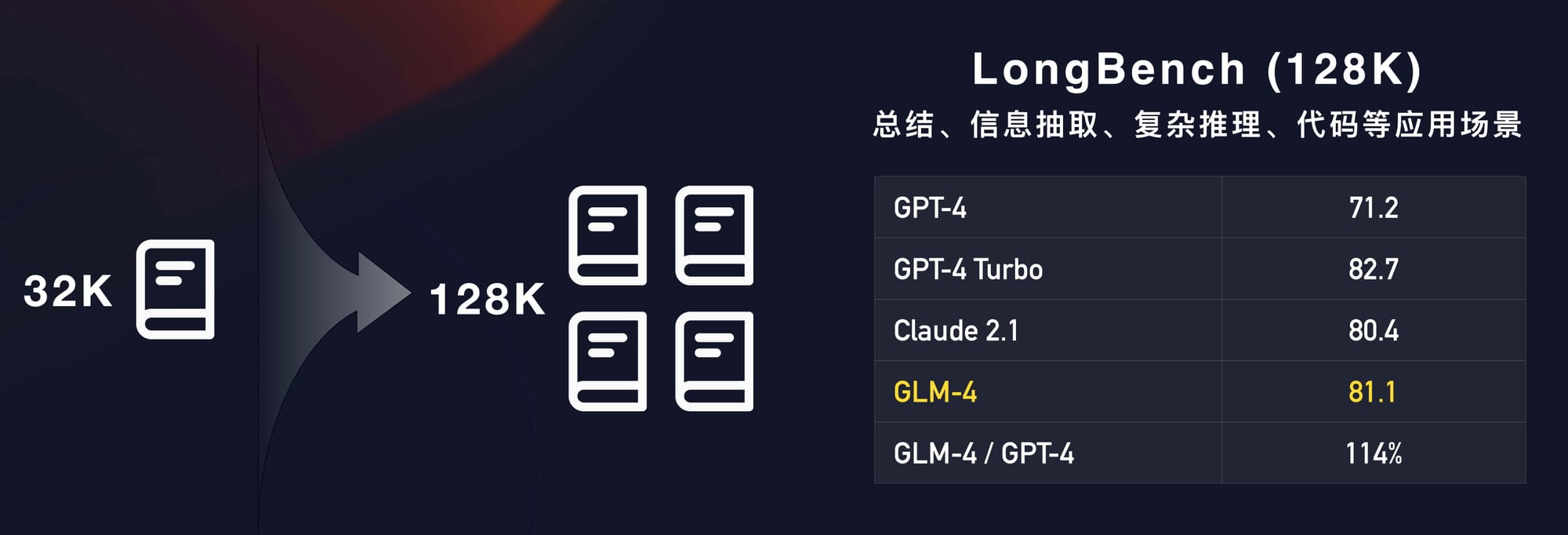

Specifically, GLM-4 supports a context length of 128k tokens (equivalent to about 300 pages of text), and the ability to maintain almost 100% accuracy even with extensive text lengths. Compared to the previous version, GLM-4 has significantly improved in areas like text-to-image generation and multimodal understanding. This is bolstered by an accelerated inference speed, higher concurrency support, and reduced inference costs.

Zhipu CEO Zhang Peng said GLM-4 is roughly 60% more powerful versus its last iteration and is approaching the same level as GPT-4 in natural language processing benchmarks.

Zhang emphasized GLM-4's stronger performance in Chinese versus English in tests of alignment, comprehension, reasoning, and role-playing ability. This could give the model an edge in one of AI's most important global markets.

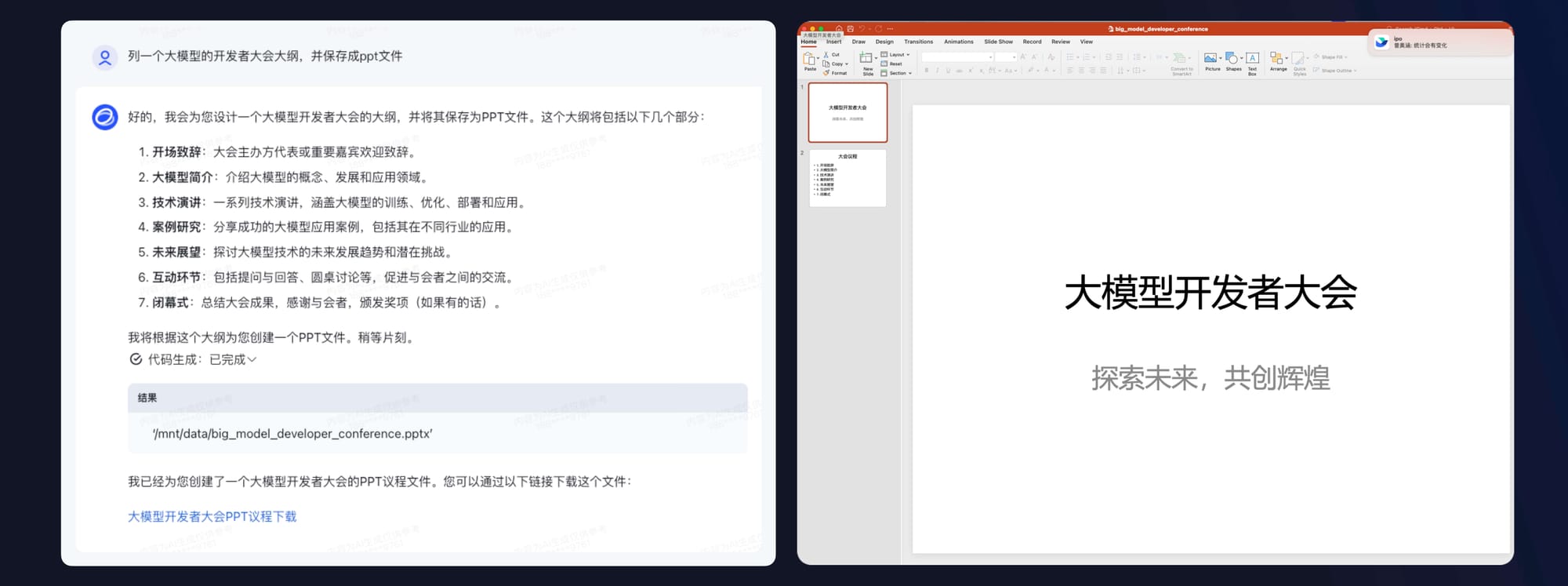

Beyond core language skills, GLM-4 introduces an "All Tools" feature enabling it to autonomously plan and execute complex instructions by calling appropriate tools like web browsers, code interpreters, and image generators. It can handle multifaceted tasks such as data analysis, chart plotting, and generating PowerPoint slides.

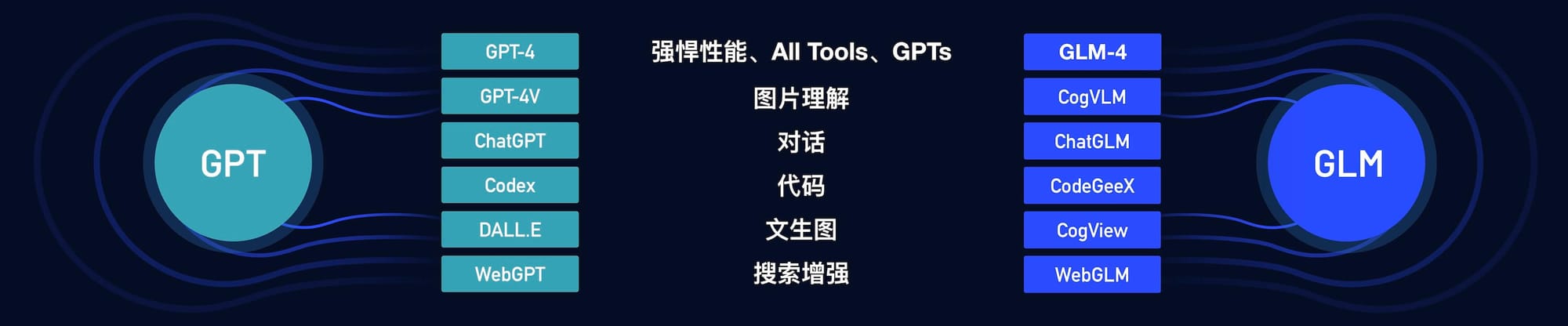

Zhipu also launched "GLMs", which allow anyone to easily creaste their own personalized "AI agents" using natural language prompts. Users can share their created agents via a new AI Agent Center. This essentially copies the "GPTs" capability that OpenAI added to ChatGPT in November and the GPT Store which launched last week.

Looking ahead, Zhipu AI wants to energize the local open-source AI community. The company announced several funds to spur R&D and commercialization of large language models:

- An open-source fund offering GPUs, cash, and free API access to support the developer community

- A $100 million "Z Ventures" startup fund targeting innovative LLM companies

- Expanded academic grants and industry partnerships through organizations like CCF (China Computer Federation)

Since its founding in 2019, Zhipu AI has raised over 2.5 billion yuan (approximately 360 million USD) from backers including Alibaba, Tencent, and GGV Capital. It now partners with hundreds of enterprises exploring real-world applications of its GLM models. The launch of GLM-4 highlights Zhipu's rapid pace of progress in domestic Chinese AI.

Of course, the elephant in the room is that Zhipu's offerings bear striking resemblance to those of OpenAI. From the naming of its models (GLM vs GPT), to offering customizable GLMs, Zhipu seems to be following OpenAI's product roadmap very closely. For now, rather than innovating in new areas, Zhipu's strategy may simply be to reproduce OpenAI's work and capture China's massive AI market.

Ultimately, Zhipu will need to demonstrate truly novel capabilities fitting the unique needs of Chinese users in order to shake off notions that it is merely an OpenAI copycat. Still, by essentially "cloning" OpenAI, Zhipu puts accessible cutting-edge AI into the hands of Chinese developers and users faster than trying to organically grow wholly original models.