Adobe is expanding its suite of artificial intelligence creative tools with the announcement of Project Music GenAI Control, a new system that generates original music with text prompts and allows deep editing control over the audio output.

Unveiled at the recent Hot Pod Summit in New York, Project Music GenAI Control uses the same generative AI techniques behind Adobe's popular image generation system Firefly. Users simply input text descriptions like "happy dance beat" or "somber piano" to produce unique musical compositions in custom styles.

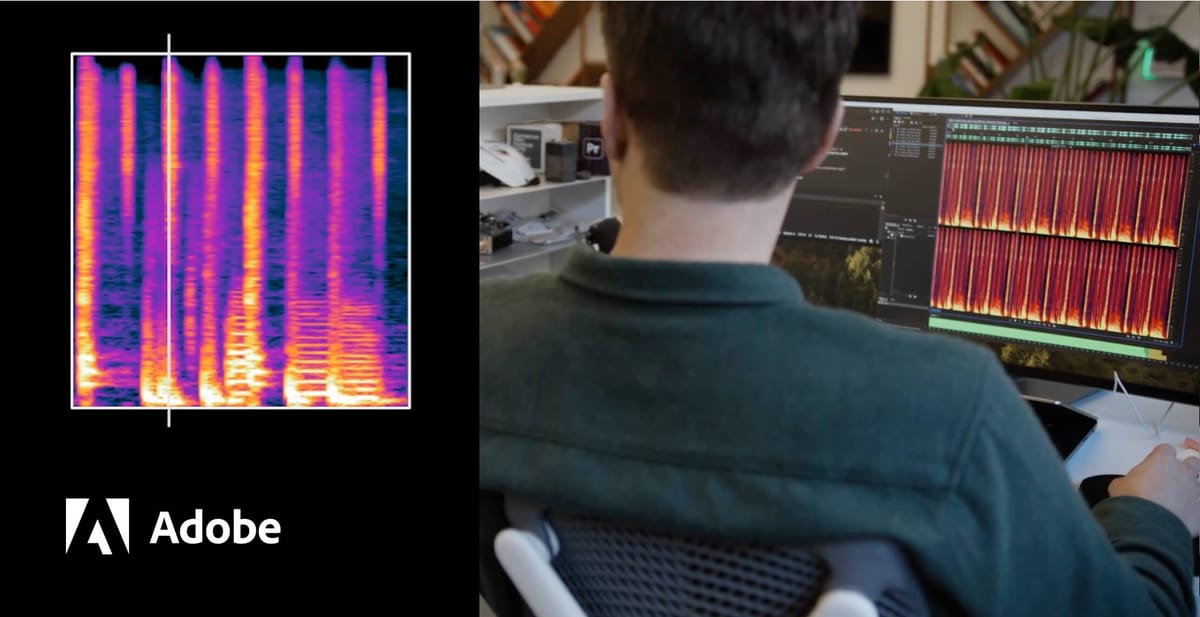

But unlike existing AI music tools, Adobe's new offering goes beyond music generation—it allows you to "edit" the music. Once the AI creates a music track, built-in controls give users options to tweak tempo, patterns, intensity and structure on the waveform level. Sections can be remixed, extended or looped to craft the perfect bespoke background audio, transitions or soundtrack.

"It's taking it to the level of Photoshop by giving creatives the same kind of deep control to shape, tweak, and edit their audio," said senior research scientist Nicholas Bryan in Adobe's announcement. "It's a kind of pixel-level control for music."

The advanced capabilities could solve major pain points for content creators without audio engineering expertise who need customized music and sound for their projects. This could transform workflows for needs spanning podcast intros and outros to video backing tracks to ambient game soundscapes.

Developed in collaboration with leading AI researchers, Project Music GenAI Control utilizes the DITTO framework, short for "Diffusion Inference-Time T-Optimization," allows fine-grained control over text-to-music AI models without any training required.

DITTO optimizes initial noise latents through any differentiable feature matching loss to achieve a target output, leveraging gradient checkpointing for memory efficiency. The method demonstrates a wide range of applications for music generation, including inpainting, outpainting, and looping, as well as intensity, melody, and musical structure control.

Outpainting Exampe:

The beauty of DITTO lies in its ability to achieve high-quality, flexible control of diffusion models without ever fine-tuning the underlying model. When compared to related training, guidance, and optimization-based methods, DITTO outperforms comparable approaches on controllability, audio quality, and computational efficiency.

Inpainting Example

Zachary Novack, a research scientist intern at Adobe and the first author on the DITTO paper, emphasizes the potential of this method. "DITTO achieves state-of-the-art performance on nearly all tasks," he writes, "opening the door for high-quality, flexible, training-free control of diffusion models."

"AI is generating music with you in the director's seat and there's a bunch of things you can do with it," said Gautham Mysore, Adobe's head of audio/video AI research. "[The tool is] giving you these various forms of control so you can try things out. You don't have to be a composer, but you can get your musical ideas out there."