Alibaba Cloud continues to push the boundaries of AI technology with the latest additions to its Qwen series of open-source AI models. The recent release of Qwen-1.8B and Qwen-72B, along with specialized chat and audio models, adds new dimensions to Alibaba's already robust AI offerings. These models underscore Alibaba's commitment to advancing AI capabilities, offering enhanced versatility and performance in language and audio processing.

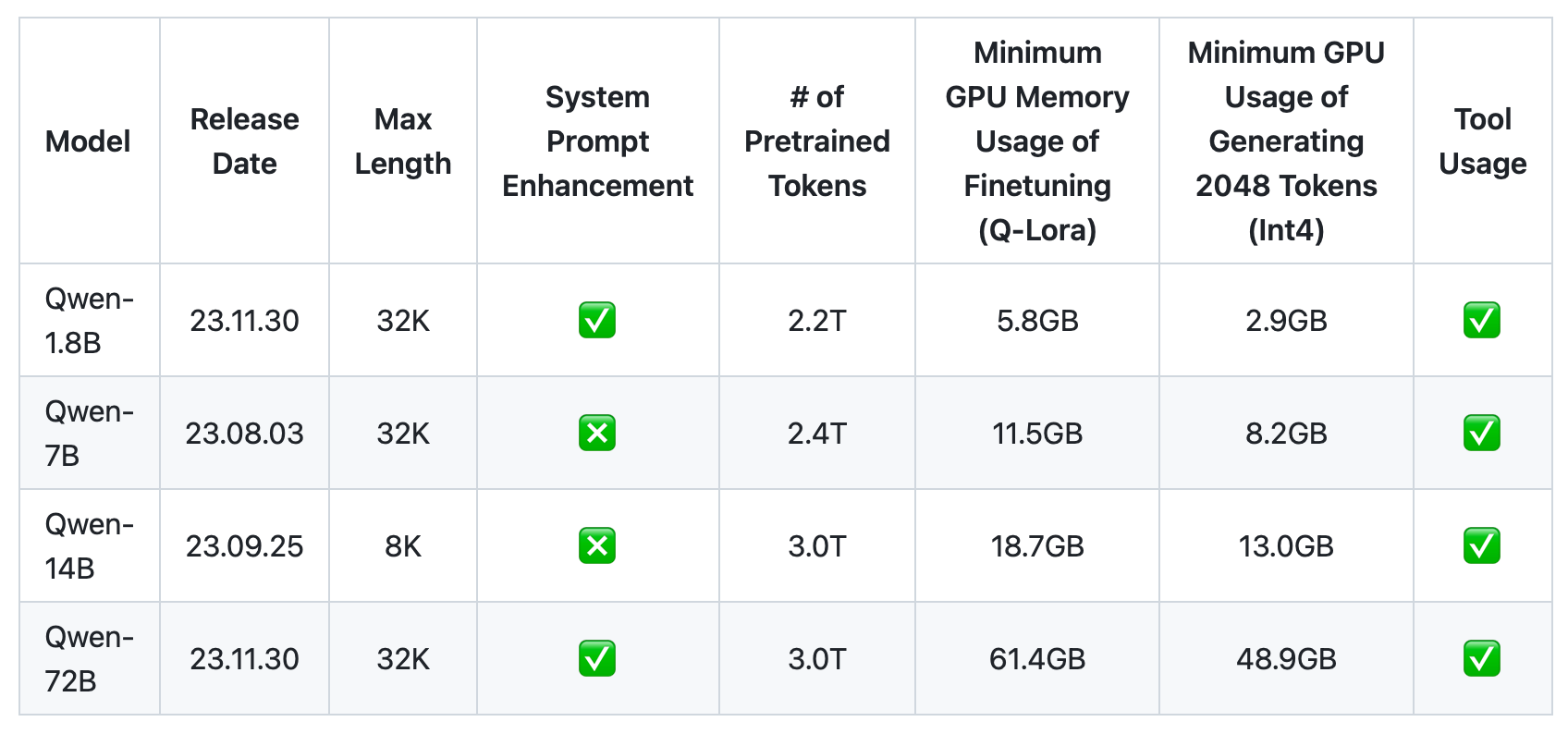

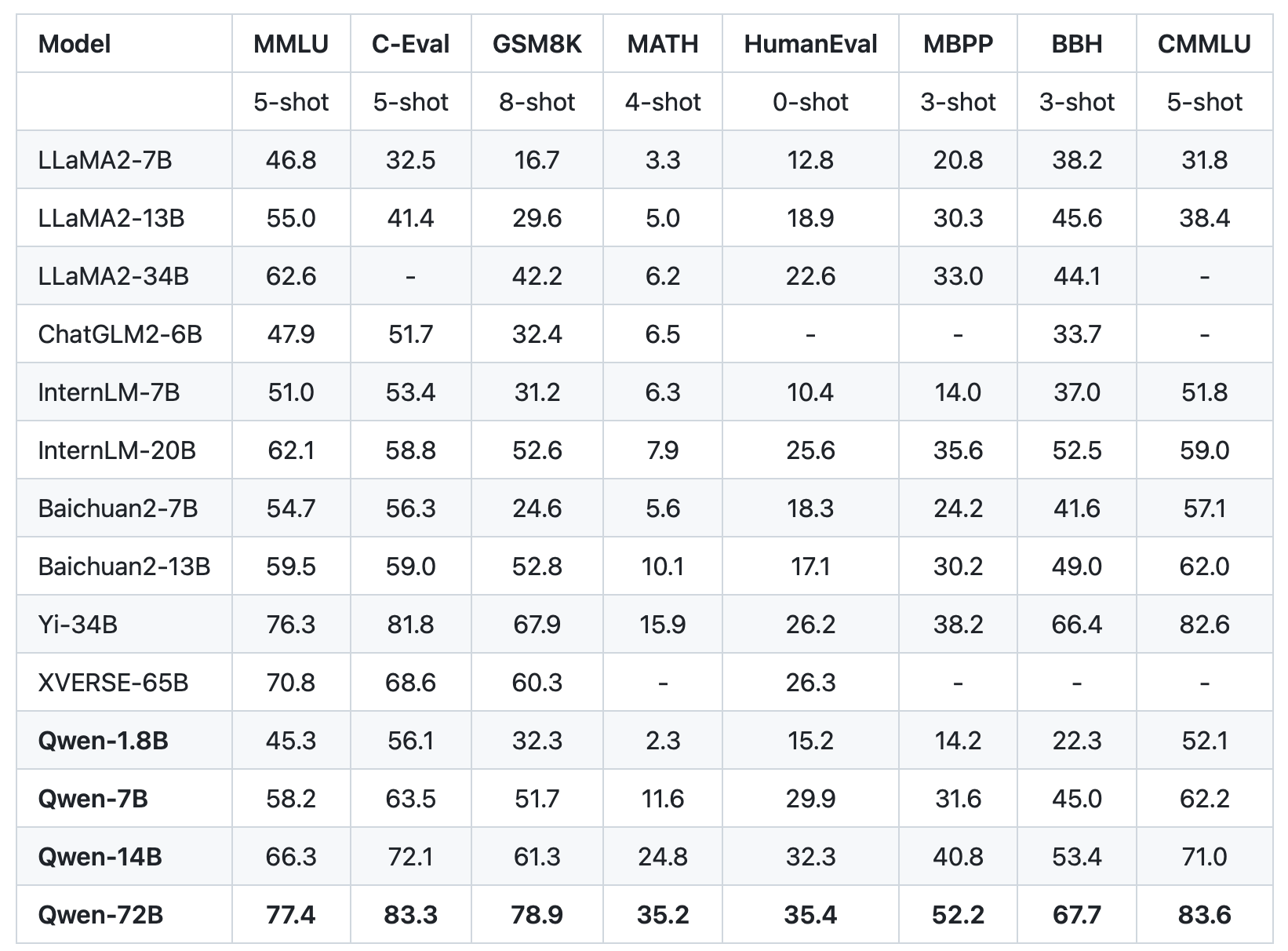

The Qwen series, which already includes Qwen-7B and Qwen-14B, has been further enriched with the introduction of the Qwen-1.8B and its larger counterpart, Qwen-72B. Qwen-1.8B, a transformer-based model with 1.8 billion parameters, is pretrained on a vast corpus of over 2.2 trillion tokens. This model supports a lengthy context of 8192 tokens and excels in various language tasks in both Chinese and English, surpassing many similar-sized and even larger models.

Notably, Qwen-1.8B offers a cost-effective deployment solution with its int4 and int8 quantized versions. These features reduce memory requirements significantly, making it a practical choice for a range of applications. It also boasts a comprehensive vocabulary of over 150K tokens, enhancing its multilingual capabilities.

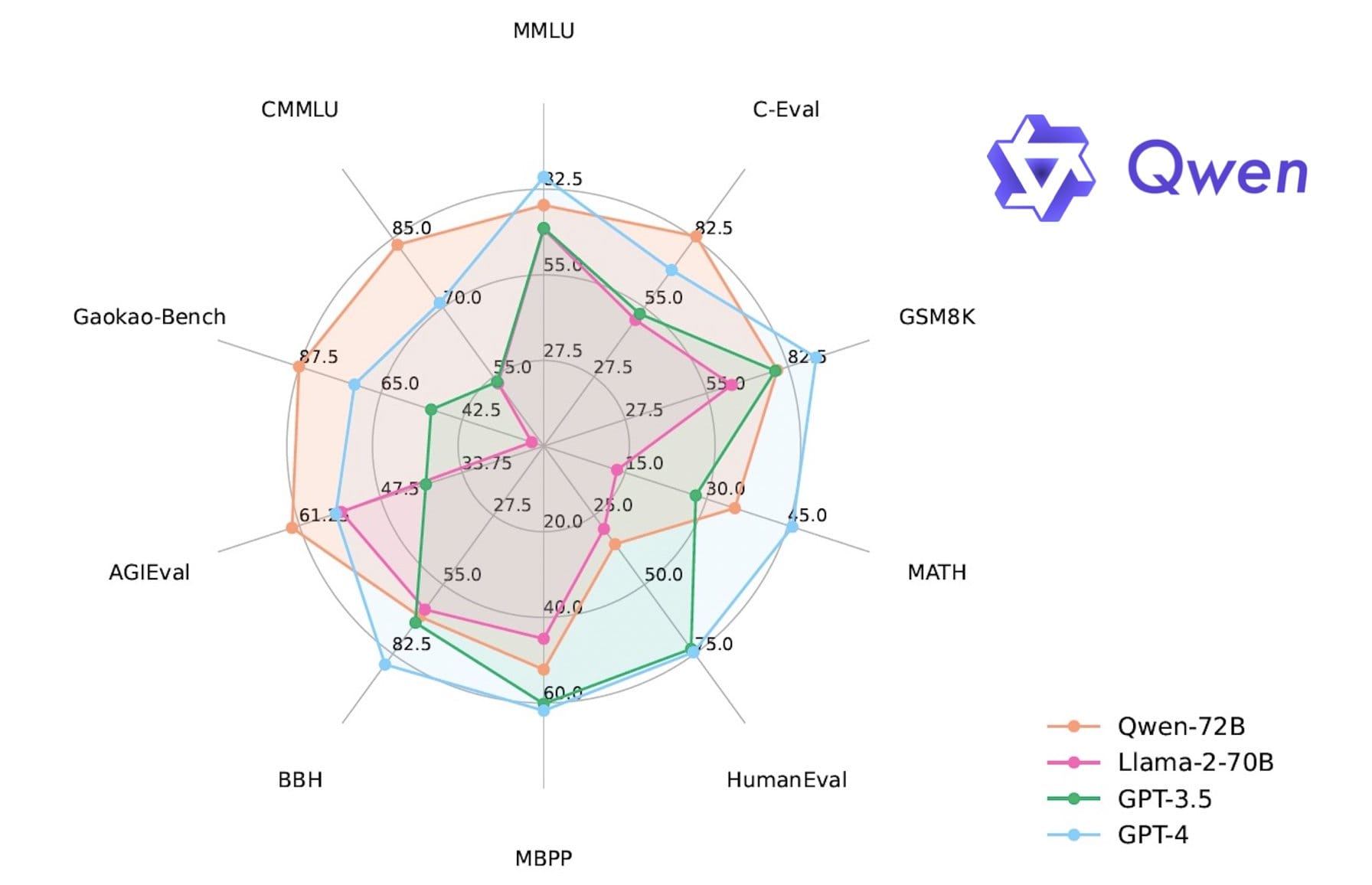

Qwen-72B, the larger model, is trained on an impressive 3 trillion tokens. This model achieves superior performance over GPT-3.5 in the majority of tasks and outshines LLaMA2-70B across all evaluated tasks.

Despite their sizable parameters, Alibaba has engineered the models to enable low-cost deployment, with quantized versions allowing minimum memory usage under 3GB. This innovation dramatically lowers the barriers to working with immense models that once cost millions in cloud computing.

Alongside Qwen base models, Alibaba launched Qwen-Chat, fine-tuned versions tailored for AI assistance and conversational abilities. Qwen-Chat can chat naturally, summarize texts, translate between languages, generate content and even interpret and run code.

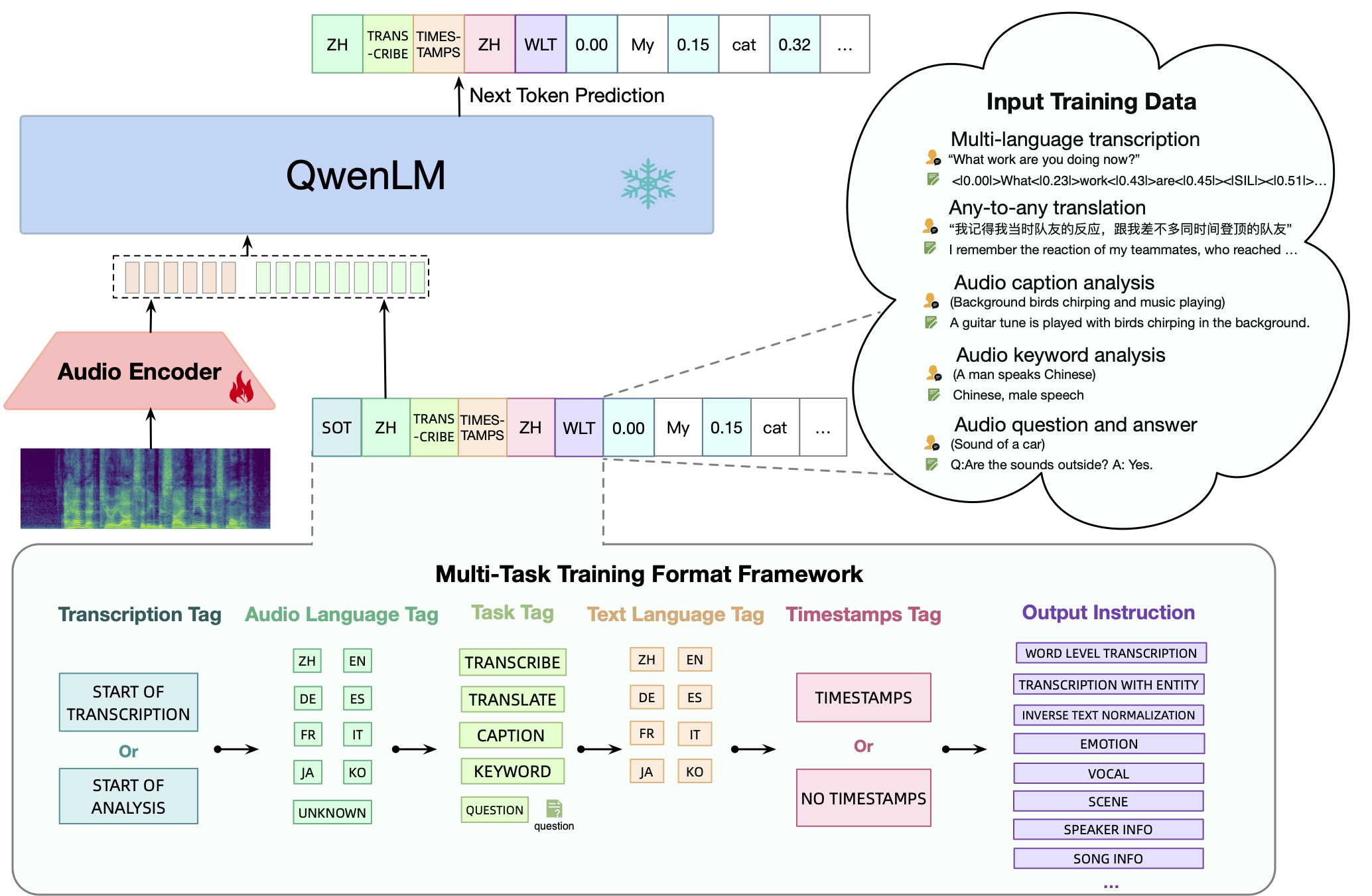

Alibaba's Qwen-Audio represents a significant leap in multimodal AI, processing diverse audio inputs alongside text to produce text outputs. Impressively, with no fine-tuning Qwen-Audio already produces state-of-the-art results in speech recognition and a range of audio understanding benchmarks.

As a foundation audio-language model, Qwen-Audio sets a new standard in the audio domain. It incorporates a multi-task learning framework to handle a variety of audio types, demonstrating remarkable performance across numerous benchmarks, including state-of-the-art results in tasks like AISHELL-1 and VocalSound.

OK. The sentence “わかりました。田舎に行くことに同意します。田舎暮らしを体験してみるのもいいかもしれません。” translated into English is “I understand. I agree to go to the countryside. It’s also good to experience country life.”Qwen-Audio's versatility extends to multi-run chats from both audio and text inputs, covering a range of functionalities from sound understanding to music appreciation and speech editing tools.

With these new models, Alibaba Cloud not only reinforces its position as a leader in AI innovation, but also provides valuable contribution to the open source community.