Last month, Amazon announced a $1.25 billion investment in AI startup Anthropic with the option to invest up to an additional $2.75 billion. The partnership commits Anthropic to using AWS as its primary cloud provider and Amazon's Trainium and Inferentia chips to train and run Anthropic’s foundation models.

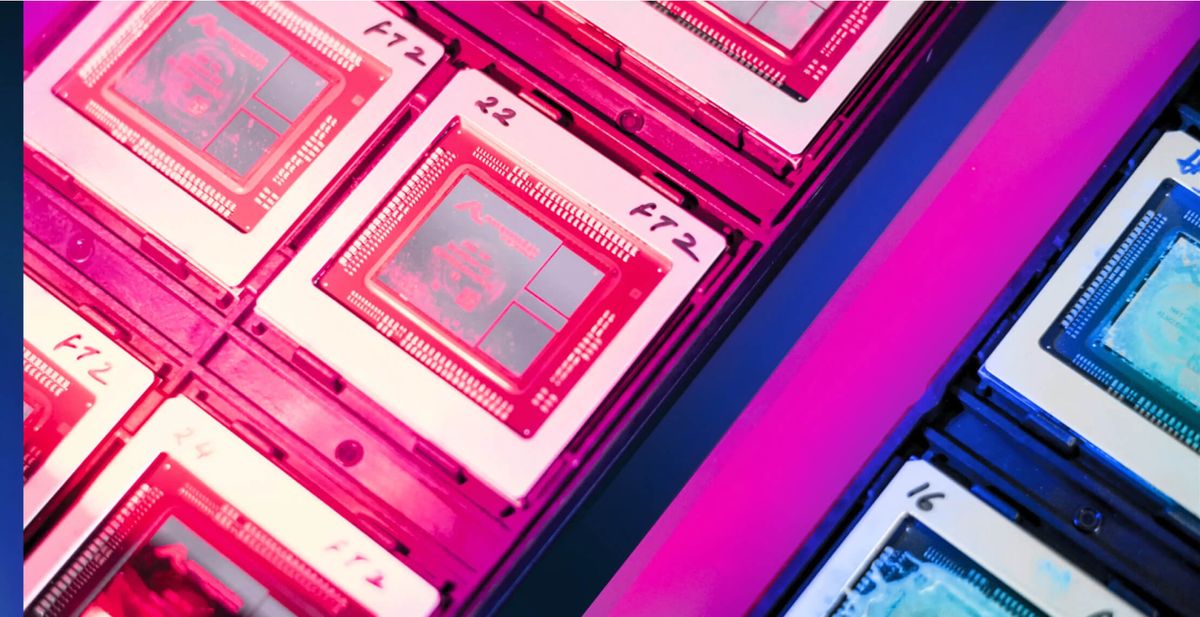

The company is now heavily marketing its custom AI accelerator to other potential customers and touting its performance superiority to traditional GPU setups. According to AWS, its Trainium chip slashes training times and costs up to 50% for complex models like Claude. Their specialized Inferentia2 machine learning inference chip cuts inference costs by up to 50%.

This comes as Microsoft is set to unveil its own AI chip, codenamed “Athena,” next month. Athena is anticipated to rival NVIDIA H100 and could allow Microsoft and its customers like OpenAI reduce reliance on NVIDIA GPUs, cutting costs and providing more leverage as demand surges.

Outside of Anthropic, major customers of AWS's AI accelerators include Snap, Qualtrics, Finch Computing, Dataminr and Autodesk. Finch says it has achieved 80% lower inference costs while Dataminr gained 9x better throughput per dollar with Inferentia optimization. Amazon even highlights that using Inferentia with Alexa has led to lower costs and latency.

The demand for powerful hardware to run large generative AI models is accelerating. NVIDIA, already the market leader in high-end AI processors, plans to release their next-generation GH200 Grace Hopper Superchip Platform in Q2 2024. The GH200 will run models 3.5x larger than previous versions while improving performance with 3x faster memory bandwidth.

The expanded Anthropic collaboration highlights AWS’s aggressive push to sell companies on adopting its custom-designed AI chips. While NVIDIA GPUs dominate today, AWS is touting major cost and performance improvements from its Trainium and Inferentia silicon instead. The stakes are even higher now for AWS with Microsoft entering the fray. Given Microsoft's exclusive access to OpenAI's influential GPT models, they will likely attract many customers.

By aligning with Anthropic, AWS aims to showcase the capabilities of its silicon in generative models and convince companies its homegrown chips are best suited for the future of AI.