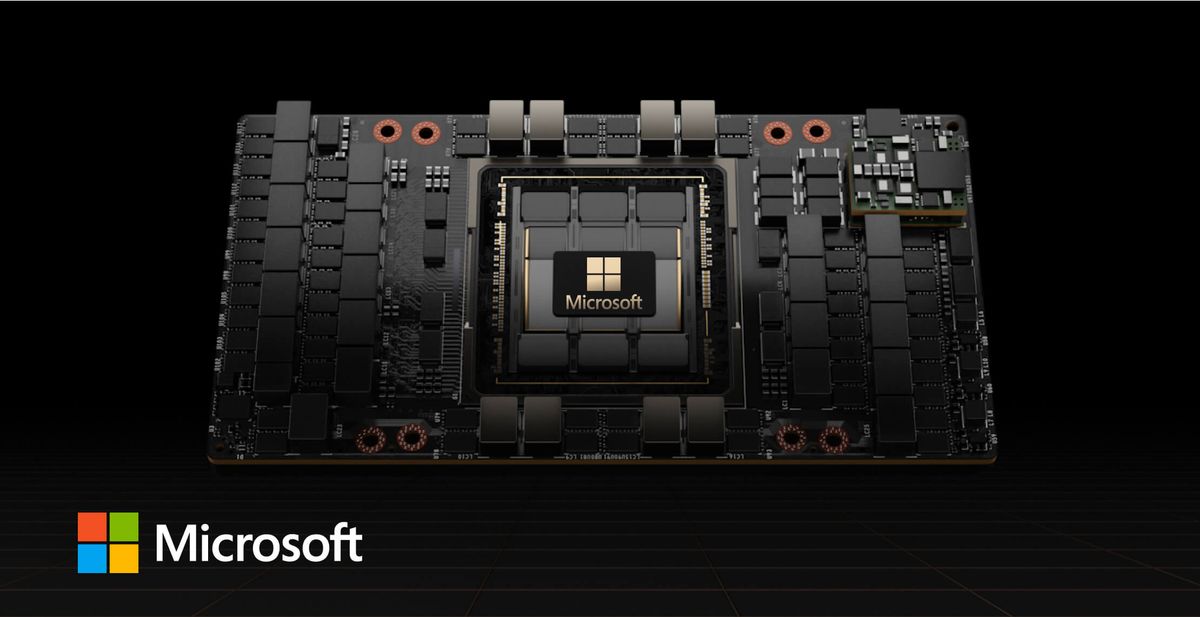

In a strategic move, Microsoft plans to debut its first AI chip next month, according to a new report. Codenamed "Athena," the chip could allow Microsoft to reduce its reliance on NVIDIA-designed GPUs, which have been grappling with supply constraints due to surging demand.

The chip reveal will likely occur at Microsoft's Ignite conference in Seattle starting November 14th. Athena is expected to compete with NVIDIA's flagship H100 GPU for AI acceleration in data centers. The custom silicon has been secretly tested by small groups at Microsoft and partner OpenAI.

Microsoft began developing Athena around 2019, seeking to cut costs and gain leverage with NVIDIA. Azure currently depends on NVIDIA GPUs for AI features used by Microsoft, OpenAI, and cloud customers. But with Athena, Microsoft can follow the lead of rivals AWS and Google in offering homegrown AI chips to cloud users.

Performance details remain unclear, but Microsoft hopes Athena can match the in-demand H100. Despite many companies touting superior hardware and cost benefits, NVIDIA GPUs remain the preferred choice for AI developers because of the company's CUDA platform. Attracting users to new hardware and software will be key for Microsoft.

The plan may also reduce reliance on NVIDIA amid tight GPU supplies. After it began working closely with OpenAI, Microsoft reportedly ordered at least hundreds of thousands of NVIDIA chips just to support OpenAI products and research needs. By using its own silicon instead, cost savings could be substantial.

OpenAI, may also be contemplating reducing its reliance on Microsoft and NVIDIA's chips. Earlier today, Reuters reported that the AI research lab is considering making its own AI chips. Recent job listings on OpenAI's website also suggest the company's intent to recruit talent to assess and co-engineer AI hardware.

While Microsoft and other cloud service providers have no immediate plans to cease purchasing GPUs from NVIDIA, persuading their cloud patrons to adopt more in-house chips over NVIDIA's GPU servers could be economically advantageous in the long haul. It is also working closely with AMD on its upcoming AI chip, the MI300X. The diversified approach provides options as AI workloads surge. Cloud competitors are employing similar tactics to avoid vendor lock-in.

Amazon and Google have strategically integrated their AI chips into the promotional campaigns for their cloud ventures. Amazon's financial backing of Anthropic, a competitor to OpenAI, came with the stipulation that Anthropic would utilize Amazon's AI chips, dubbed Trainium and Inferentia. Meanwhile, Google Cloud has publicized that clients like AI image developer Midjourney and Character AI employ the company's tensor processing units.

The stakes are high as AI chips become vital components in data centers. With this development, Microsoft will join rivals in vying for market share in the accelerating AI silicon space. With Athena, it can offer cloud customers greater choice while charting a more independent course in next-gen AI infrastructure.