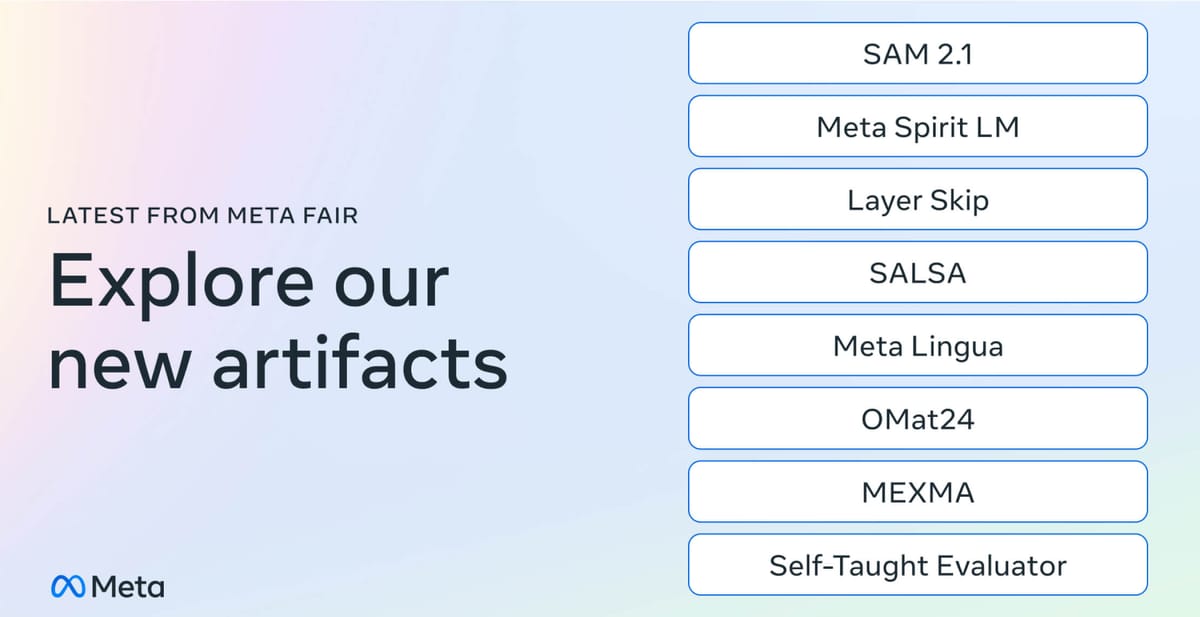

Meta's Fundamental AI Research (FAIR) team has released a treasure trove of new AI models, datasets, and tools, marking a significant leap forward in the pursuit of advanced machine intelligence. The package, which includes eight distinct research artifacts, spans a wide range of AI disciplines and promises to accelerate innovation across the field.

At the forefront of this release is Meta Segment Anything Model 2.1 (SAM 2.1), an upgrade to their groundbreaking image and video segmentation tool. This latest iteration boasts improved object tracking capabilities and enhanced ability to differentiate between visually similar objects. Since its initial release just 11 weeks ago, SAM 2 has been downloaded over 700,000 times, finding applications in diverse fields from medical imaging to meteorology.

Joelle Pineau, VP of AI Research at Meta, highlighted the importance of these releases: "We are always happy to share our work with a broader community and we will continue in the spirit of collaboration and openness, which I think is so important in terms of advancing AI today."

Another standout offering is Meta Spirit LM, an open-source language model that seamlessly integrates speech and text. This multimodal approach allows for more natural-sounding speech generation and opens up new possibilities for cross-modal AI applications. (Paper, Code, Weights)

For developers working with large language models (LLMs), Meta has introduced Layer Skip. This end-to-end solution promises to accelerate LLM generation times without the need for specialized hardware, potentially making these powerful tools more accessible and cost-effective. (Paper, Code, Weights)

In the field of cybersecurity, SALSA provides researchers with new code to benchmark AI-based attacks on cryptographic systems. This work is crucial for validating the security of post-quantum cryptography standards and staying ahead of potential threats. (Paper, Code)

Meta Lingua, a lightweight codebase for training language models at scale, aims to streamline the research process. Its efficient and customizable design allows researchers to quickly test new ideas with minimal setup. (Code)

The company is also making strides in materials science with Meta Open Materials 2024, a dataset and model package that could accelerate the discovery of new inorganic materials. This open-source offering competes with the best proprietary models in the field. (Code, Models, Dataset)

A significant addition in this release is the Self-Taught Evaluator, a novel method for generating synthetic preference data to train reward models without relying on human annotations. This approach uses an LLM-as-a-Judge to produce reasoning traces and final judgments, with an iterative self-improvement scheme. The resulting model outperforms larger models and those using human-annotated labels on various benchmarks, while being significantly faster than default evaluators. (Paper, Code, Model, Dataset)

Rounding out the release is MEXMA, a cross-lingual sentence encoder covering 80 languages, which combines token- and sentence-level objectives during training for improved performance. (Paper, Code, Model)

This release from Meta is a goldmine for AI researchers and developers. It underscore Meta's commitment to open science and its belief that widespread access to cutting-edge AI technologies can drive innovation and benefit society as a whole.