AMD recently unveiled its latest data center accelerators, the AMD Instinct MI300 Series, designed to deliver breakthrough performance for demanding AI and high performance computing (HPC) workloads.

The new MI300 series includes three offerings - the MI300X Accelerator designed for generative AI workloads and HPC applications, the MI300X Platform optimized for massive multi-GPU AI deployments, and the MI300A APU tailored for advanced HPC workloads.

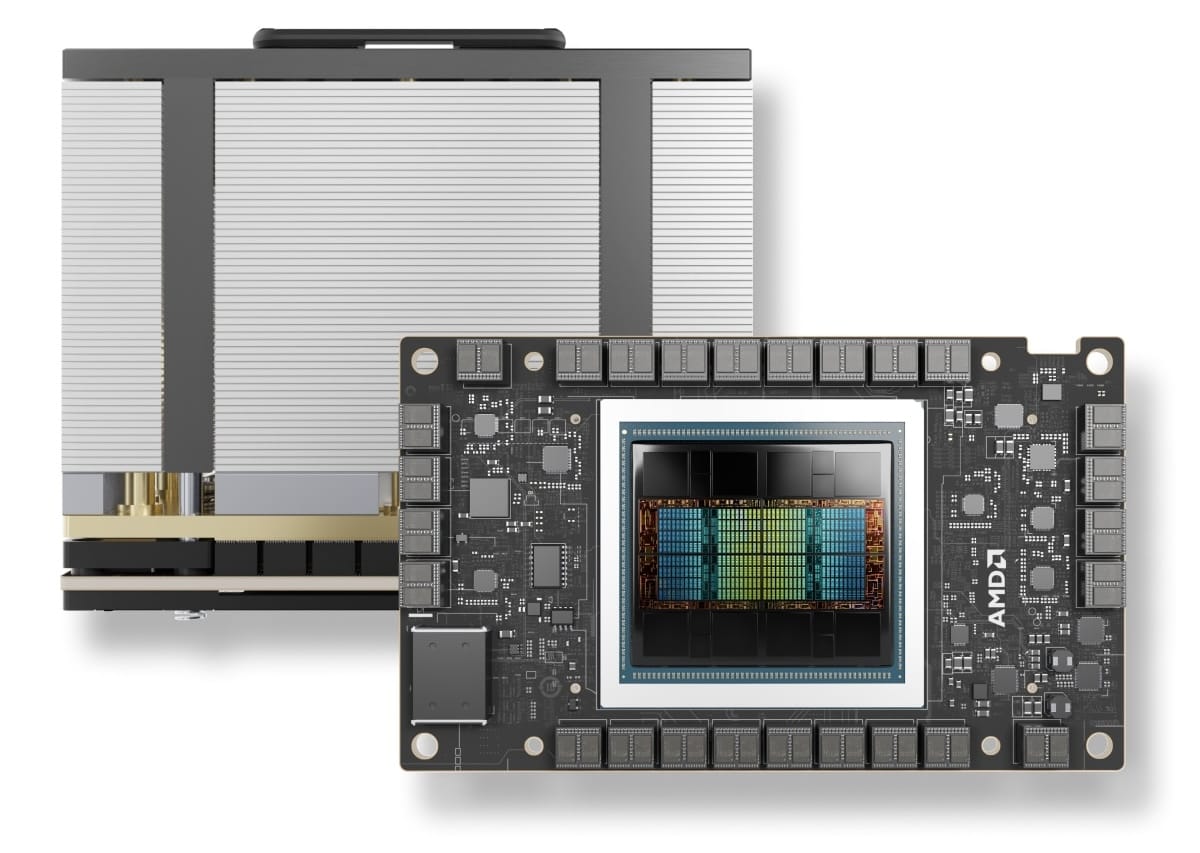

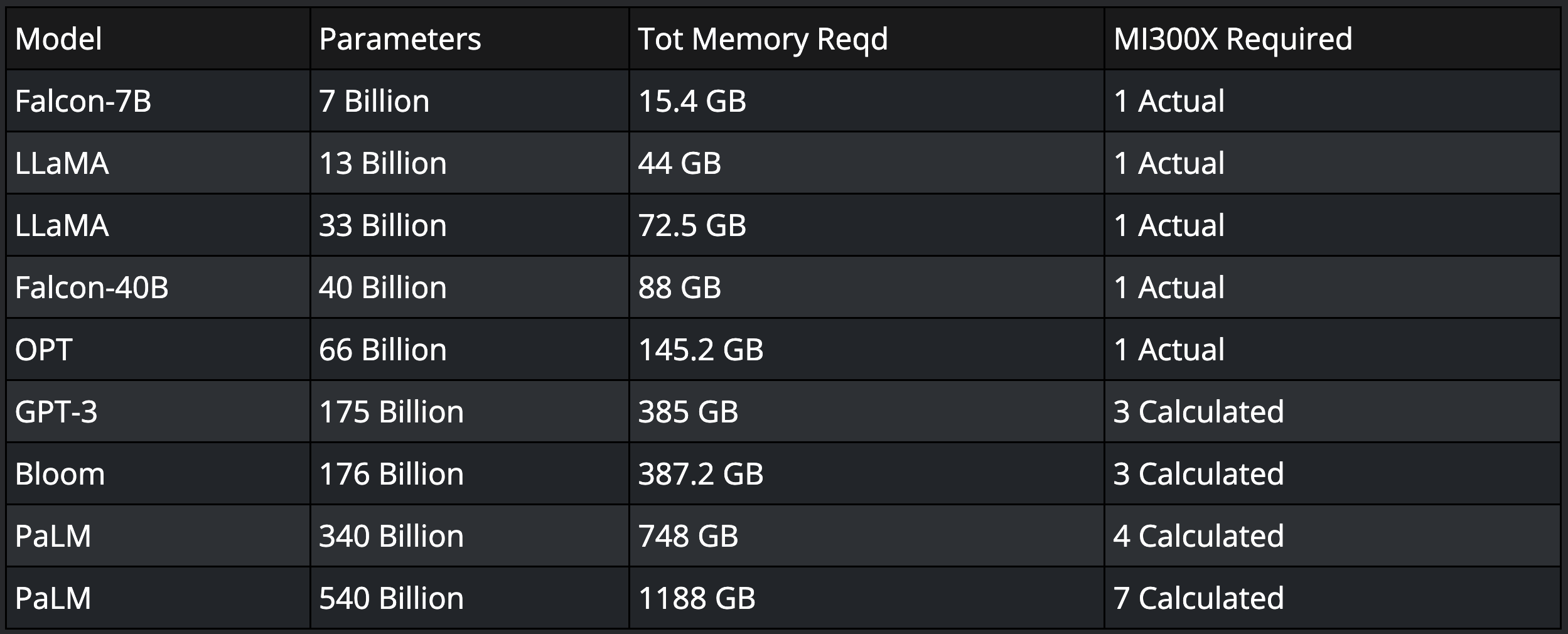

The AMD Instinct MI300X Accelerator packs up to 192GB of memory and leadership performance for large language model training and inferencing.

For enterprises needing to scale out across even more GPUs and memory, the AMD Instinct MI300X Platform integrates 8 fully connected MI300X GPU modules.

In its full configuration, it provides a staggering 1.5TB total HBM3 memory capacity and up to 42.4TB/s aggregate memory bandwidth for running the largest AI models.

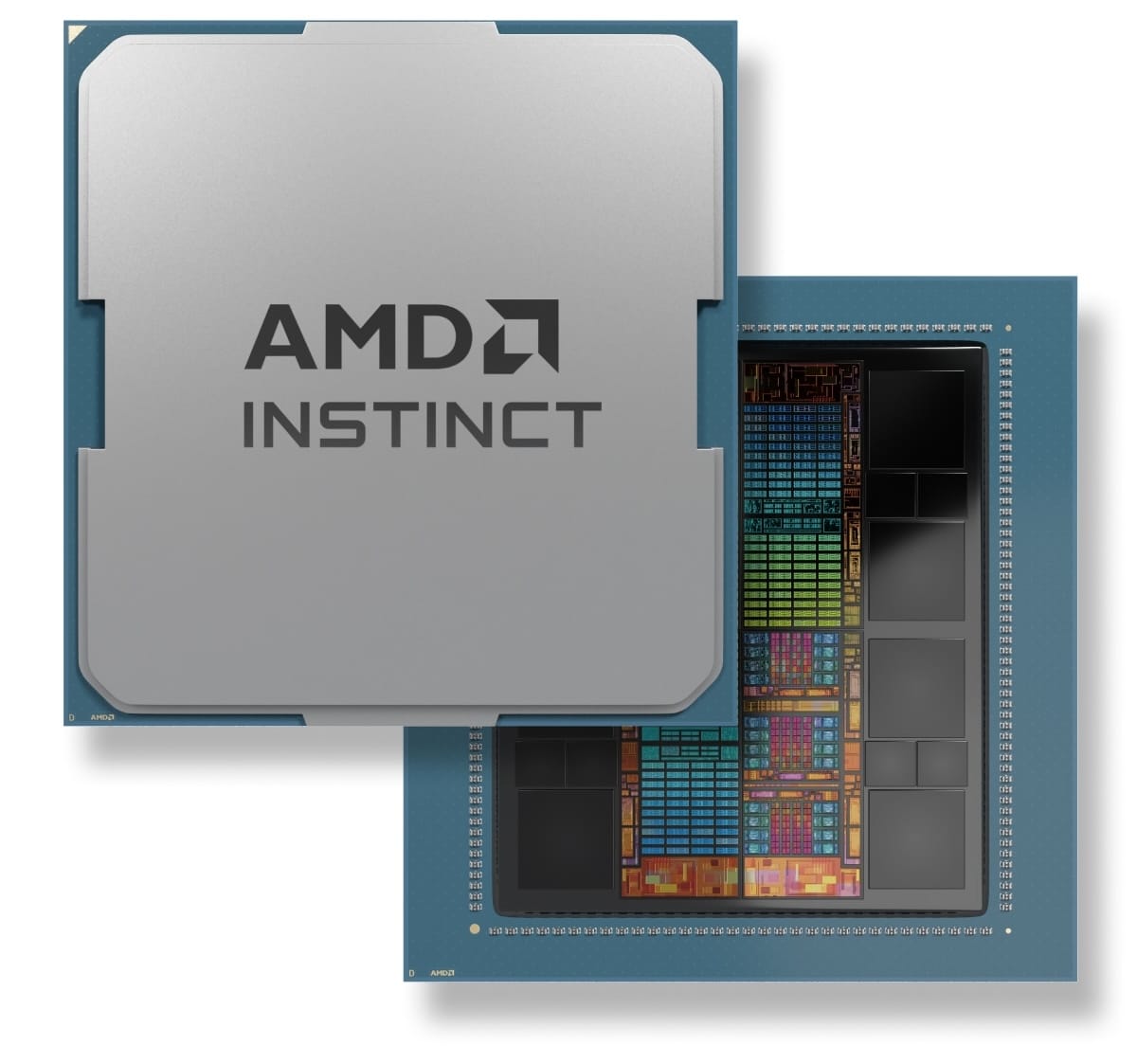

Finally, the AMD Instinct MI300A APU combines the latest CDNA3 graphics architecture and Zen 4 CPUs into a single advanced package. This provides strong double precision performance and a unified programming model suited for compute-intensive HPC computing challenges involving large datasets.

Driving the MI300X Accelerator is a need for more memory capacity and bandwidth to run ever-larger AI models being developed. Compared to previous gen AMD Instinct MI250X accelerators, the MI300X offers 40% more compute units, 1.5x more memory, and 1.7x higher peak memory bandwidth. The top-end configuration packs a whopping 192GB of HBM3 memory delivering up to 5.3TB/s of bandwidth to feed its demanding matrix processing workloads.

Compared to the Nvidia H100 HGX, AMD claims the Instinct MI300X Platform can offer up to 1.6x higher throughput for large language model inferencing workloads like BLOOM 176B. Additionally, MI300X accelerators can uniquely run inference on extremely large 70B parameter models within a single GPU. This simplifies deployments and drives better total cost of ownership for enterprise-scale AI.

While not suited for massive AI models, the MI300A APU is purpose-built for crunching big HPC datasets. It combines CDNA3 GPU chiplets and latest generation Zen 4 CPU chiplets onto a single advanced package, enabling tight integration and unified memory pools. This provides strong double precision floating point throughput required by many traditional HPC applications.

The flexible architecture allows configuring different ratios of CPUs and GPUs. The high-end MI300A model announced integrates 24 Zen 4 cores with 228 CDNA3 GPU cores and offers similar unified HBM3 memory capacity to the MI300X Accelerator. AMD quotes up to 1.9x better performance per watt over prior gen MI250X accelerators for FP32 workloads.

Software plays a key role to realize the potential of new hardware. AMD announced ROCm 6, the latest version of their open-source software platform for programming AMD GPUs. For AI workloads, they claim it can deliver up to 8x higher performance on MI300 accelerators over previous platforms. ROCm 6 adds support for new features critical to large language models and other hot areas.

AMD says they already have strong adoption for the new Instinct lineup from major cloud providers like Microsoft Azure and Oracle Cloud. Both plan to offer MI300X accelerator-based instances. The MI300A APU will power the 2-exaflop El Capitan supercomputer being being built at Lawrence Livermore National Lab. Major OEMs like Dell, HPE, Supermicro, and Lenovo also announced servers to leverage the new AMD offerings.

Ultimately, AMD's new accelerators are not just hardware upgrades; they are enablers of future technological advancements and tools for solving some of the most pressing challenges of our time. As AI and HPC applications continue to grow and evolve, these innovations ensure that the computational power needed to fuel this growth is not just available but also leaps ahead in efficiency and capability.