Large language models (LLMs) and other generative AI tools promise to transform how companies do business. However, many organizations are making crucial mistakes in their AI product strategies. The root cause is that hype around AI's potential is obscuring the hard work required to implement it successfully.

Companies must focus on user needs, not technology hype, when building AI solutions. Here are 5 things they are getting wrong:

1. Focusing on the technology and not the experience

The most common mistake companies offering AI solutions make is focusing too much on the technology itself, and not enough on the value it delivers. While the underlying technology matters, users really don't care. They care more about the benefits it provides—time saved, productivity gained, convenience increased.

To see this in action, look back at Apple's annual World Wide Developer Conference (WWDC) held earlier this month. Unlike most of their competitors, AI was not the star of the show—in fact, they barely mentioned it at all. Apple focused on highlighting user benefits of new products, not the sophistication of the technology powering them.

Contrast this to Google's I/O conference held a month earlier, where AI took center stage. Check out this supercut from TheVerge:

While both companies are pioneers in AI, their approaches in presenting their innovations to the world starkly contrast.

You see, Apple excels at abstracting the technology away from its customers in favor of simplicity and utility.

We've seen this story before—multiple times actually.

The original "Say Hello to iMac" campaign contrasted the complexities of a PC with the simplicity of a Mac. Similarly, with the iPhone, instead of dwelling on megapixels and memory, Apple focused on the experience and value—capturing the best photos, and making apps as responsive as possible.

Early movers in AI risk repeating the same mistake pioneers made in other categories. They would do well to learn from Apple's playbook: prioritize the user experience, highlight the benefits and stop talking so much about the technology.

2. Mystifying LLMs

Imagine traveling back in time to the early nineteenth century armed with an iPhone, still connected to today's data network and infrastructure. Anyone seeing you use it would certainly think you were a sorcerer. The device in your pocket would be the most powerful computing machine known to mankind. You could predict the weather, conduct advanced calculations, read any book, play any song, look up any information you wanted, and much more. Moreover, the device only functions when you look at it and talks back when spoken to. Imagine the awe this would inspire.

Any sufficiently advanced technology is indistinguishable from magic.- Arthur C. Clarke's Third Law

Today, as we witness LLMs in action, we can't help but feel a similar sense of marvel. The recent explosion of AI capabilities, from generating realistic images and text to composing music, can indeed feel otherworldly.

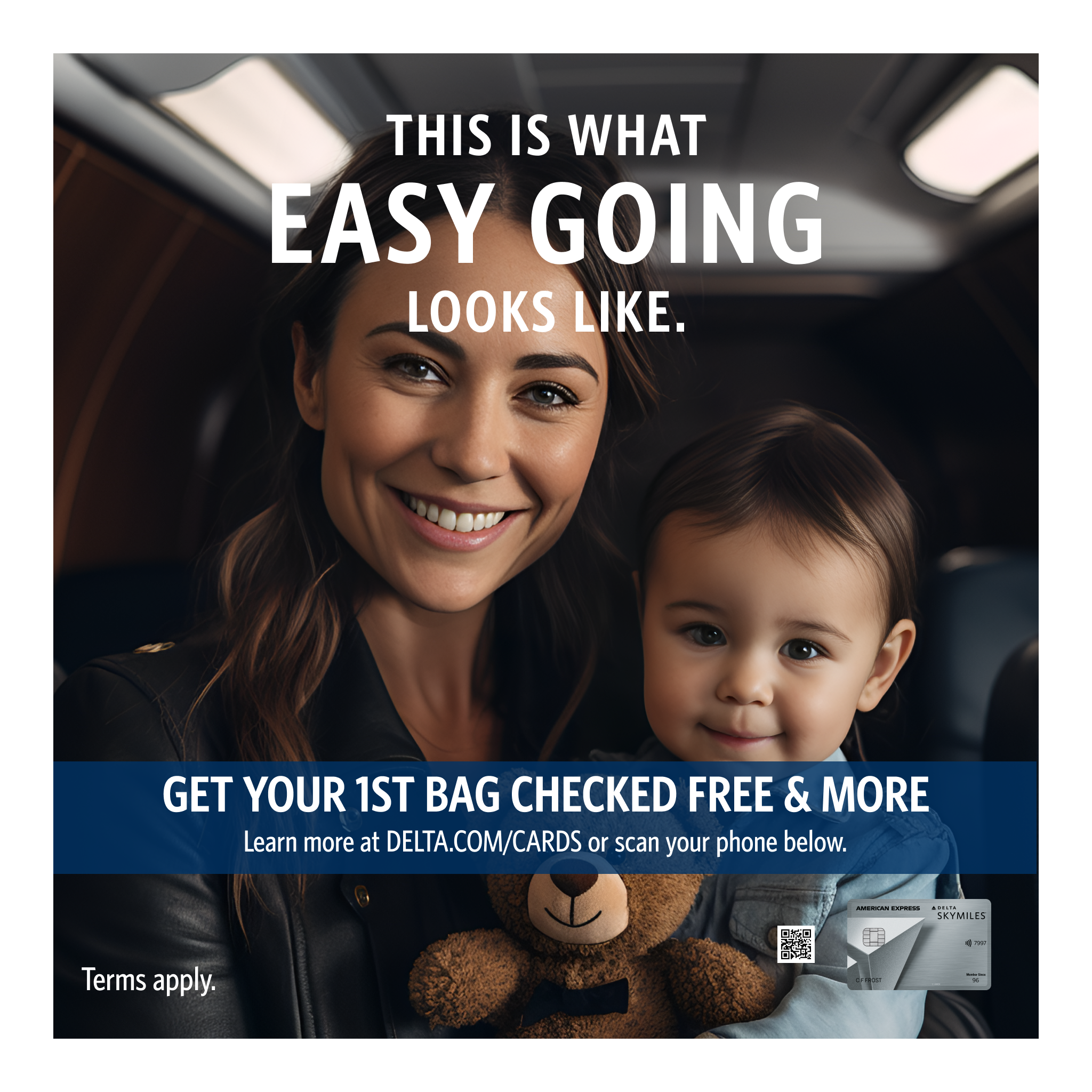

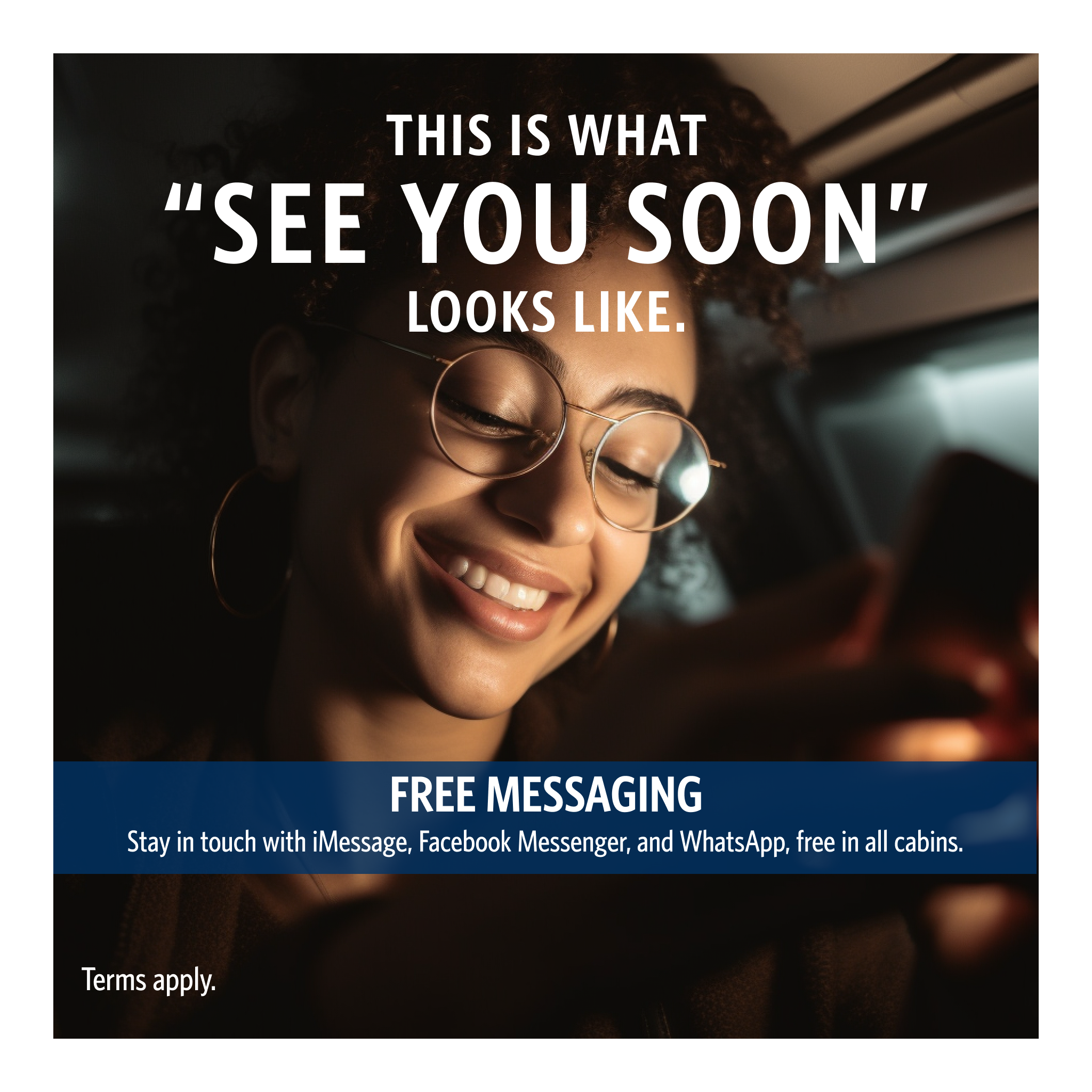

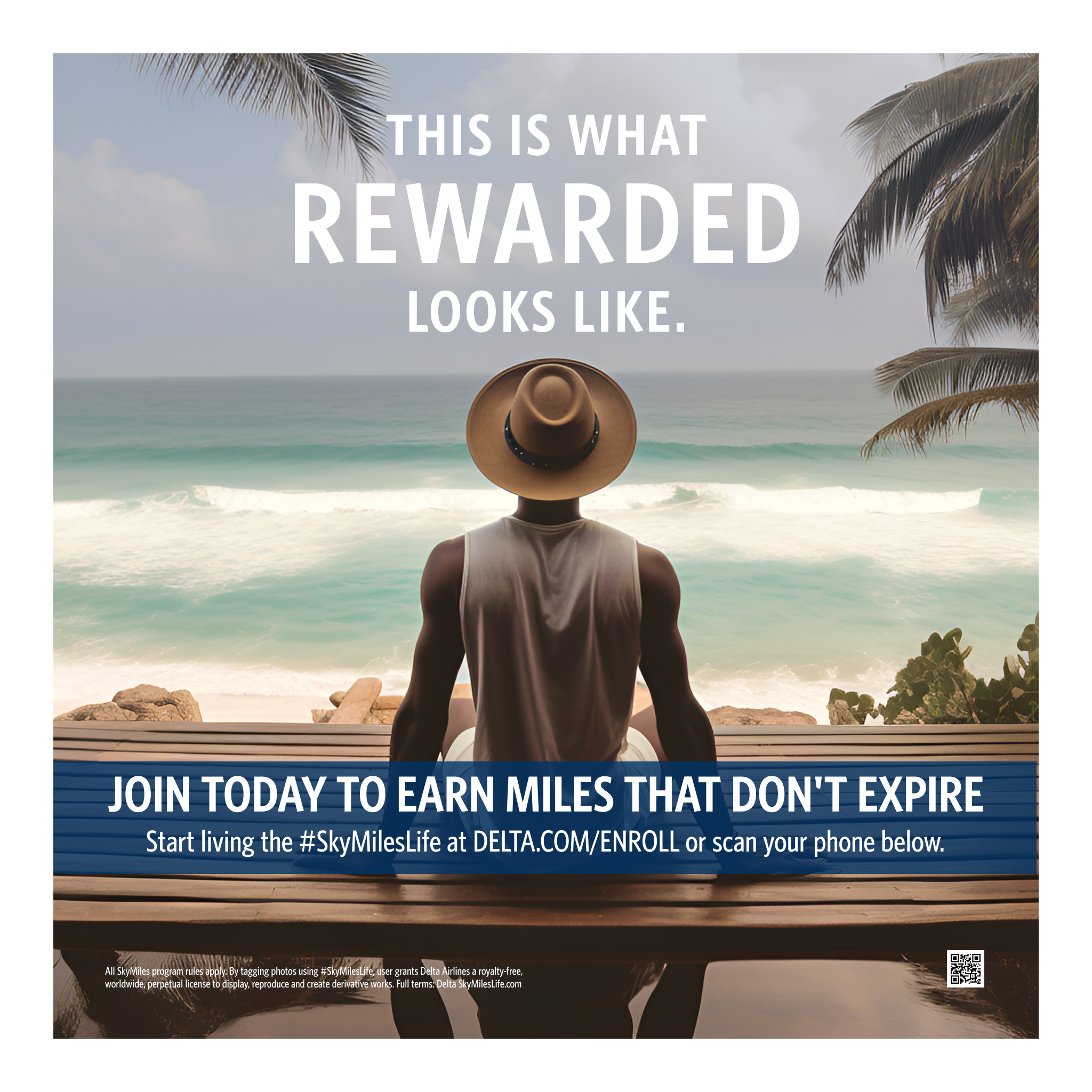

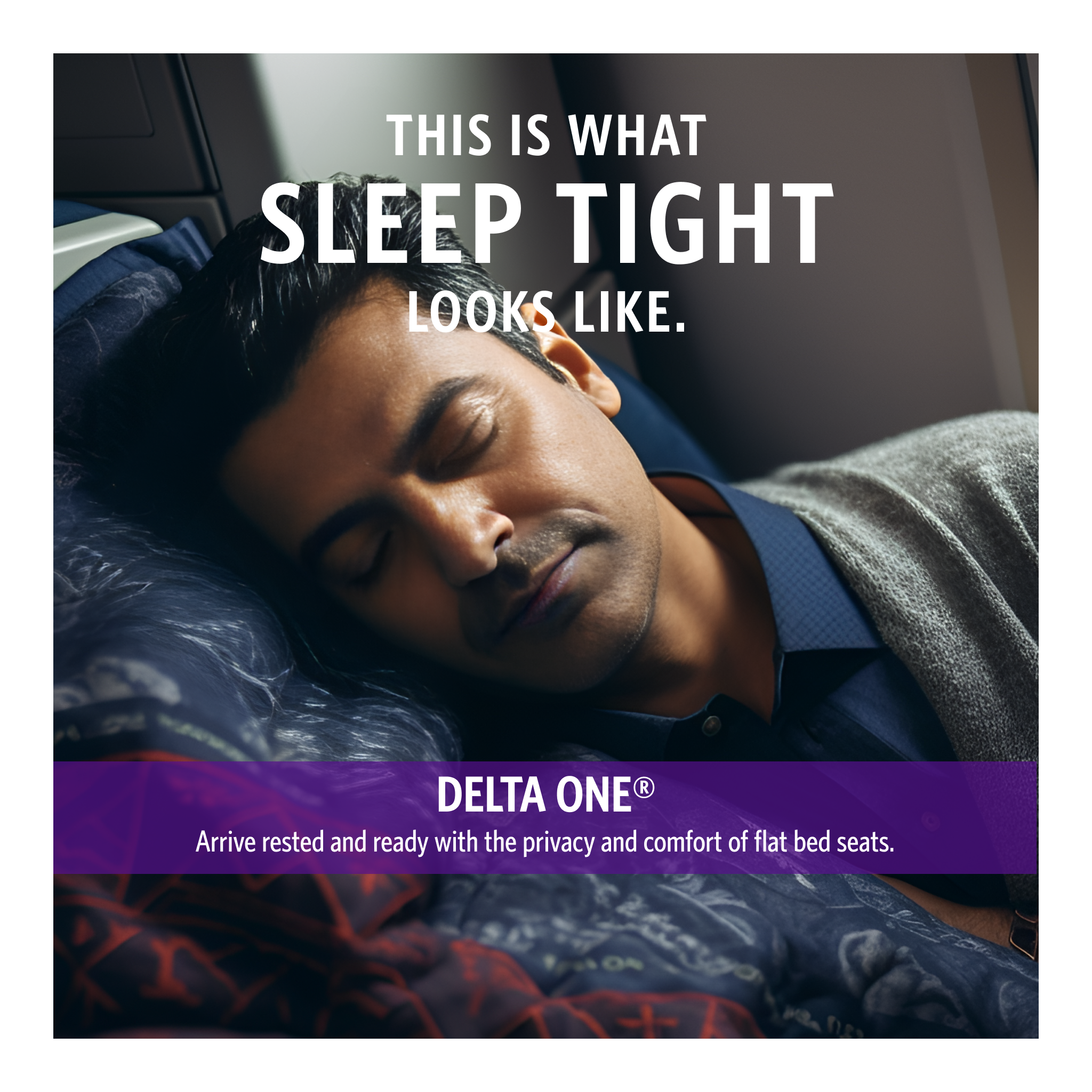

See how we recreated a Delta ad campaign with AI

Consider tools like Midjourney, which can create photorealistic images and art in any style based on simple descriptions, or OpenAI's ChatGPT that can generate human-like text with uncanny fidelity and nuance. Put simply, they are amazing, and given their capabilities, this sense of wonder is not misplaced.

Yet, it's important to underscore that AI, in all its forms, is just technology. They are the product of intricate algorithms, large amounts of training data, and years of meticulous research and development—all processed through advanced computational hardware. It's not magic; it's the fruit of human ingenuity and relentless pursuit of knowledge.

Many companies, in their fervor to adopt AI, perpetuate the mystique surrounding it, which only serves to create unrealistic expectations and misunderstandings. Treating LLMs as a mysterious, silver bullet solution risks obscuring its real benefits and potential drawbacks, leading to misguided implementation strategies and missed opportunities.

Instead, businesses should aim for transparency, educating their stakeholders about what AI can and can't do, and how it can truly drive value in their specific context.

3. Rushing to Market and Announcing Vaporware

"Sign up for beta access." This call to action has become all too familiar with companies teasing their upcoming AI products, and often a red flag for vaporware. Hoping to capitalize on the success of ChatGPT, some companies (you know who you are) are prematurely announcing new AI products or features that are merely in the embryonic stages of development.

The early hype that is gained, while momentarily exciting, is a double-edged sword. It heightens expectations, and if those expectations aren't met — when the promised features either fail to materialize or are drastically scaled back — the result can deal a significant blow to a company's credibility and reputation. Vaporware doesn't merely disappoint customers; it risks undermining trust, a crucial element of long-term business success.

The temptation to rush to market, particularly in a domain as dynamic as AI, is understandable. The competitive stakes are high, and the pressure to claim first-mover advantage is intense. Yet, a little perspective can go a long way. It's easy to forget that it's only been seven months since the release of ChatGPT, yet its impact on the AI landscape has been seismic.

Rather than rushing to announce features or products still in their infancy, businesses should focus on developing a realistic product roadmap. This plan should include comprehensive testing and validation stages to ensure that when the product is finally unveiled, it lives up to its promise. Additionally, companies should prioritize AI training and education.

It's not about who announces first or even who has the best technology, but about who delivers reliable, valuable, and credible solutions. Patience, prudence, and a steadfast commitment to delivering on promises should be the guiding principles in this journey.

4. Underinvesting in Design

User-centered design principles are the bedrock of any good product, and AI solutions are no exception. In the sprint to get to market faster, design is getting sidelined. Companies must remember that while AI applications might be robust and complex in the back end, they need to be simple, intuitive, and user-friendly in the front end. Neglecting this can lead to user frustration, low adoption rates, or even outright rejection of the technology, thus undermining the whole AI investment.

Generative AI interfaces pose a unique challenge as they must facilitate communication between humans and machines. For instance, how does the system provide feedback? How do you keep humans in control? When should the system override user inputs versus providing notifications? How do you make it accessible to all users? These design decisions can make the difference between a useful tool and a source of frustration.

To better appreciate the balance required when designing AI interfaces, let's look at some simple yet familiar tools that we interact with daily: spell check, autocorrect, and predictive text.

Spell check, a passive system, underlines misspelled words, indicating to users that they should make corrections. Autocorrect, on the other hand, is an active system that automatically corrects what it perceives as errors. However, it often leads to amusing or frustrating errors due to misinterpretation of user intent. Predictive text, or autosuggest, presents suggestions without enforcing them, giving users control while providing assistance.

A key feature of autocorrect—and a critical aspect of AI interface design—is the option to undo the automatic correction. This simple design choice respects user agency, allowing them to override the AI when it misinterprets their intent. It's a subtle but crucial reminder that the technology is there to assist, not dictate.

These simple tools show the importance of nuanced design in AI systems. There isn't a one-size-fits-all approach, and the choice between passive, active, or suggestive systems depends on the specific use-case and user preferences.

Companies should consider design as an integral part of their AI strategy, not as an afterthought. Investing in thoughtful UX/UI design is crucial in driving AI adoption and ensuring that users can reap the full benefits of the technology.

P.S. Avoid the trap of thinking every generative AI solution needs to be a chat interface like ChatGPT. While chat interfaces can effectively mimic human-like conversation, they may not always be the best solution. The goal should be to tailor the interface to the use-case, ensuring that it enhances the user experience rather than complicating it.

5. Mistaking Novelty for Necessity

Lastly, as companies continue to respond to the growing influence of LLMs, it is imperative that they take a step back to scrutinize their motivations behind adopting these technologies.

Not all problems require an AI solution. Unfortunately, many organizations are adopting these technologies simply because they are novel, overlooking the key question—is this technology truly necessary or beneficial to our operations?

The dangers of over-engineering cannot be overstated. In an attempt to "stay ahead of the curve", companies may implement complex AI solutions when simpler, more traditional methods could have served the purpose equally or even more effectively. Over-indexing on LLMs can lead to more complicated systems that demand significant resources to manage and may fail to deliver the expected ROI.

The right solution isn't always the most sophisticated one. Instead of getting swept up in the novelty of LLMs, companies should consider their specific needs, available resources, and the end-user experience. A well-chosen, simpler solution could meet their requirements more efficiently, be less demanding in terms of resources, and result in a better user experience.

At Maginative, our perspective on AI is decidedly optimistic and are convinced of its game-changing potential in transforming products and services, and a myriad of business aspects. However, we recognize that a mindful approach to building solutions or adopting AI can mean the difference between effective innovation and costly missteps.

Misaligned expectations, insufficient understanding of AI's capabilities, lack of appropriate skills and infrastructure, or a poorly designed user experience can all turn an AI initiative into a costly endeavor.

Move fast, but move wisely. Avoid the hype and focus on user needs. Prioritize experience over technology, educate customers, and set realistic expectations about what's possible now and in the years ahead.

Goodluck!