Artificial intelligence promises to reshape our world, making the companies that control this technology among the most influential in history. This poses a conundrum: will the development of AI be shared for the benefit of all, or hoarded to further concentrate power? The open-sourcing of AI models provides a window into how this tension is playing out.

Big tech companies like Google, Microsoft, and Meta are making major contributions to open-source AI models, accelerating innovation while pursuing competitive advantage. Hosted on platforms like Hugging Face, these models enable a global developer ecosystem to build upon cutting-edge research.

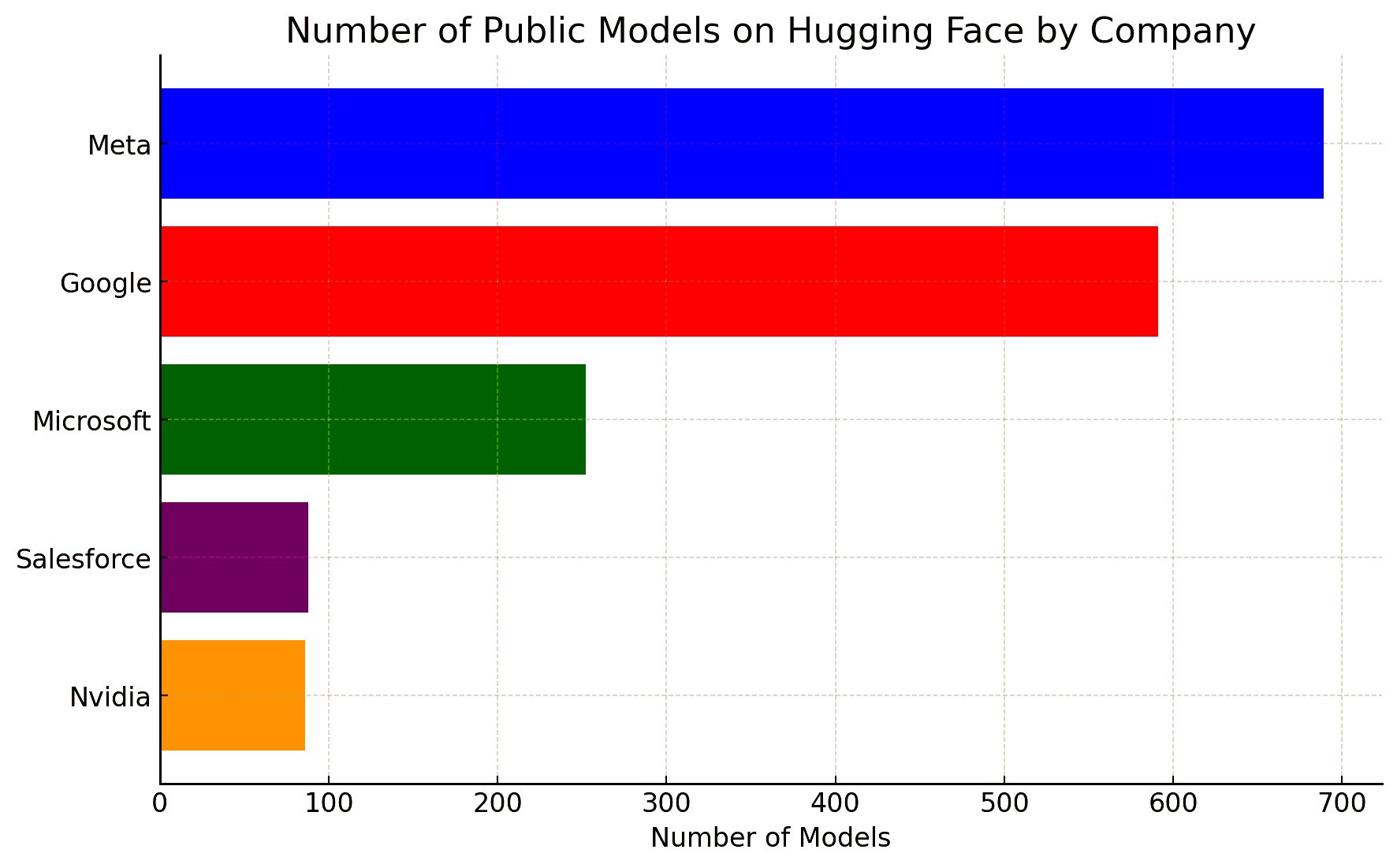

A recent tweet by Clement Delangue, CEO of Hugging Face, highlighted the growing number of open-source models that tech giants have released on their platform.

- Meta: 689 including MusicGen, Galactica, Wav2Vec, RoBERTa

- Google: 591 including BERT, Flan, T5, mobilnet

- Microsoft: 252 including DialoGPT, BioGPT, layoutLM, uniML, Deberta

- Salesforce: 88 including CodeGen, Blip

- NVIDIA: 86 including Megatron, Segformer

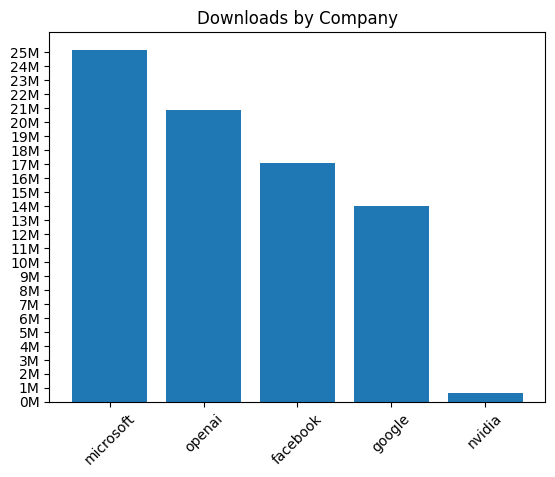

However, raw model counts don't tell the whole story. For instance, the 252 models from Microsoft account for 25 million downloads, significantly outpacing Meta and Google. In fact, OpenAI, with just 30 models, has garnered over 20 million downloads. This disparity sheds light on the differing value and utility of each company's offerings.

One might ask, why are these companies, known for their guarded trade secrets and intense competition, so willing to contribute to open-source AI? The answer lies in the philosophy that fuels open-source software development. When companies open-source their AI models, they invite a global community of developers to use, modify, and improve upon these models. This helps in stress-testing these models in a myriad of use-cases and applications, leading to models that are more robust and widely useful.

The decision to open-source remains a hotly debated topic among safety experts and AI practioners. Proponents argue this accelerates innovation, allowing collective advancement instead of guarded secrecy.

Meta for example made waves in February with its release of LLaMA. While LLaMA was released under a research license, Meta provided enough information to enable developers to replicate the model for use in commercial applications. Researchers have since used it to develop many of the leading open-source LLMs today such as Vicuna, whose capabilities have approached those of proprietary versions likle GPT-3. Meta reportedly plans to release a fully open-source version of LLaMA that is available for commercial use.

Yet, we must acknowledge that the open-sourcing of AI models, while technically empowering, also presents risks if not paired with responsible controls. OpenAI once a champion of open-source community, has not released the model weights and essential research for their LLMs since GPT-2. Ilya Sutskever, OpenAI’s co-founder and chief scientist, says the company’s past practice of sharing its research as open-source software was a mistake. Nevertheless, OpenAI is also reportedly exploring how it can provide an open-source model.

The reality is, each open-source contribution by big tech also serves as a strategic chess move in the ongoing AI arms race. By open-sourcing models, companies shape the field to align with their own technical architectures and business interests. They cement reputations as AI leaders, attract talent, and lay the groundwork for future capabilities.

It is naive to consider these open-source contributions as merely a grand act of technological philanthropy.

As we stand on the threshold of a brave new world reshaped by AI, we find ourselves, paradoxically, dependent on the benevolence of these very tech companies. Their willingness to share or withhold technological advancements is shaping not just the field of AI, but potentially our collective future. We cannot take for granted that their interests fully align with the public good.

Fundamentally, we must rethink how power should be distributed in the AI era. Handing influence to those controlling the most valued models could create extraordinary inequalities. Alternatives like decentralized networks, resource allocation, and public oversight could distribute decision-making. The future of AI must be shaped democratically, or we may one day find ourselves beholden to forces beyond our control. The choices we make now will set the trajectory for generations. The stakes could not be higher.

The questions this raises are as profound as they are complex.

- How should we navigate this reality, where a small cluster of companies hold the reins to such transformative technology?

- To what extent can or should we rely on the benevolence of these tech giants?

- Are we comfortable with the notion that our collective AI future may be forged by the strategic interests of a few corporations?

Lastly, there is the question of accountability. As these corporations shape our AI landscape, who holds them accountable for the societal impacts of the technologies they unleash? In an age where AI's influence permeates every aspect of our lives, we need robust mechanisms to ensure that those who wield this power do so responsibly.

Ultimately, we must foster a collective conscience around AI development. The trajectory of its evolution cannot be left solely in the hands of tech giants. The democratic shaping of our AI future is not just desirable, it's a necessity. It's a matter of public concern, requiring scrutinty and active engagement from all of us.