Cohere for AI, the non-profit research lab of Cohere, has released Aya 23, the second iteration of it's multilingual large language model (llm). This state-of-the-art LLM, available in 8B and 35B open weights, supports 23 languages and outperforms its predecessor, Aya 101.

Aya 23 combines a highly performant pre-trained model from the Command family with the recently released Aya Collection. The result is a powerful multilingual language model that expands state-of-the-art capabilities to nearly half the world's population. Unlike Aya 101, which focused on breadth by covering 101 languages, Aya 23 emphasizes depth by allocating more capacity to fewer languages during pre-training.

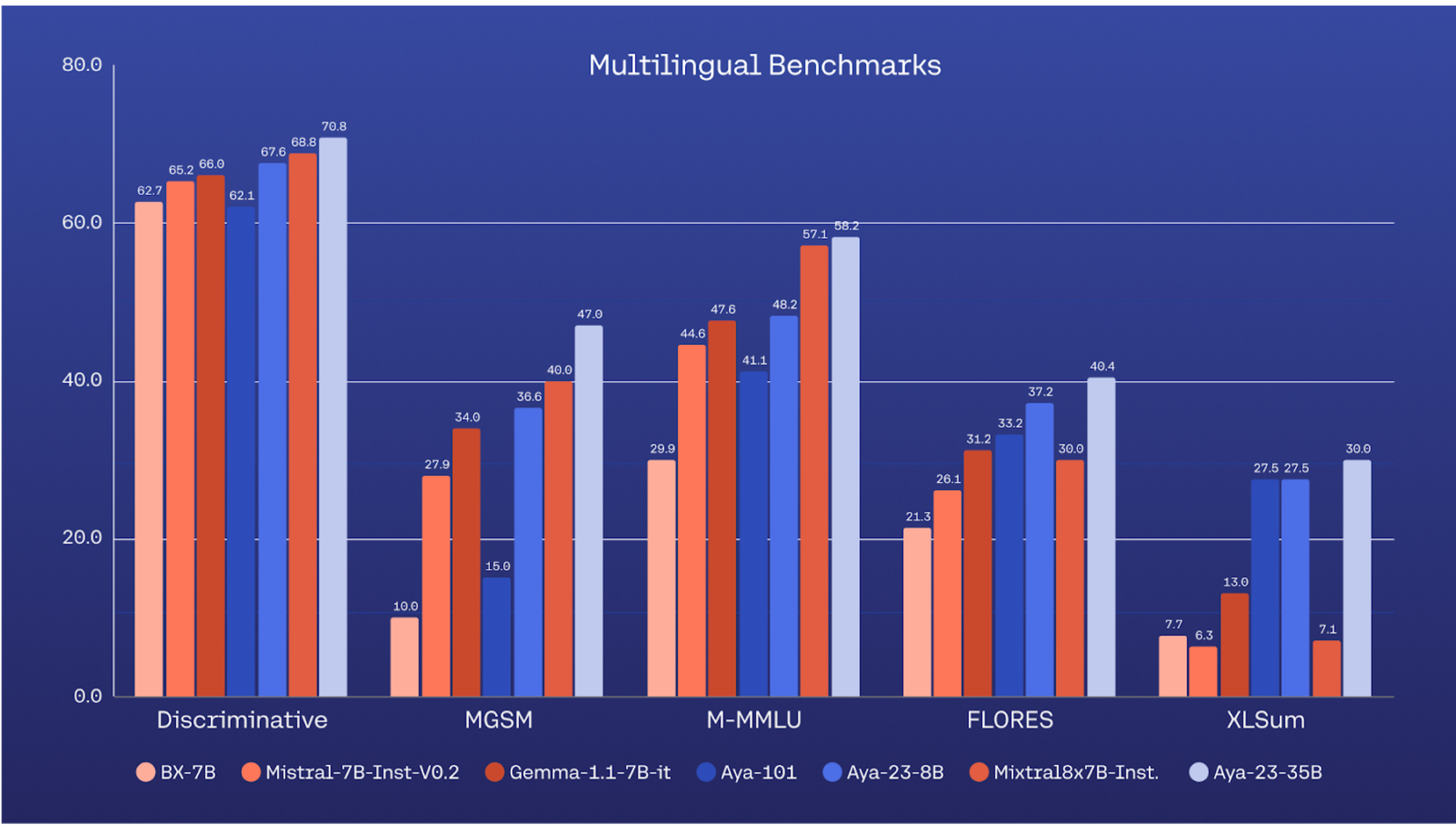

The model demonstrates superior performance compared to widely used models like Gemma, Mistral, and Mixtral across a range of discriminative and generative tasks. Notably, the 8B version achieves best-in-class multilingual performance, making the advancements accessible to researchers using consumer-grade hardware.

Cohere for AI has released the open weights for both the 8B and 35B models under a CC-BY-NC license. This release is part of their ongoing commitment to expanding access to multilingual progress and pushing the boundaries of what's possible in multilingual AI.

Aya 23 supports 23 languages: Arabic, Chinese (simplified & traditional), Czech, Dutch, English, French, German, Greek, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Persian, Polish, Portuguese, Romanian, Russian, Spanish, Turkish, Ukrainian, and Vietnamese. This release is an impportant step towards treating more languages as first-class citizens in the rapidly evolving field of generative AI.

You can try the 35B model in the Hugging Face space below: