San Francisco-based Context.ai recently closed $3.5 million in seed funding to bring sophisticated product analytics to applications powered by large language models (LLMs). The funding round was co-led by GV (Google Ventures) and Tomasz Tunguz of Theory Ventures.

The startup provides tools to help companies understand user behavior and optimize the performance of AI-enabled products. As Conversational AI and chatbots become more commonplace, businesses are amassing troves of unstructured textual data from user interactions. Making sense of this data poses challenges for firms looking to iterate and improve their offerings.

"It's hard to build a great product without understanding users and their needs," said Context.ai co-founder and CEO Henry Scott-Green. "Context.ai helps companies understand user behavior and measure product performance, bringing crucial user understanding to developers of LLM-powered products."

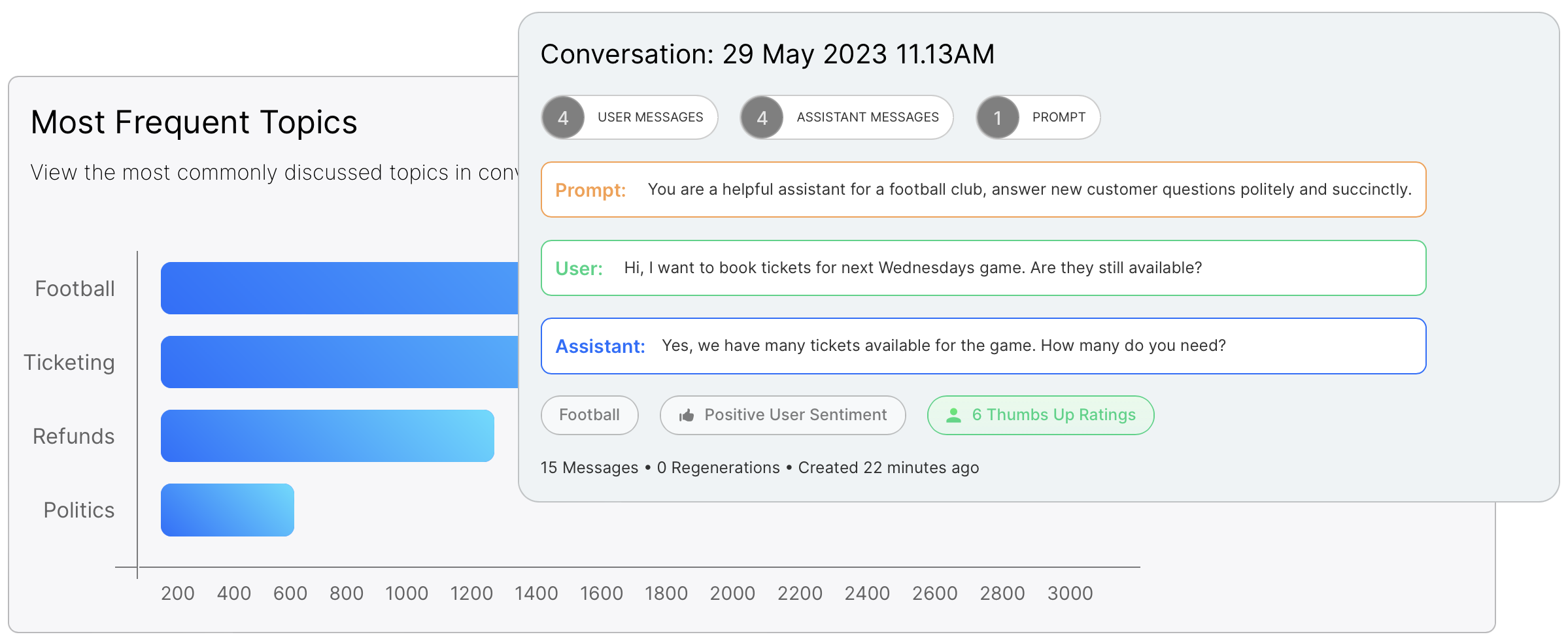

The platform analyzes conversation topics, identifies areas for improvement, allows debugging of ineffective conversations, monitors brand risks, and measures the impact of product changes. This provides previously elusive insights to builders of AI-powered applications.

"The current ecosystem of analytics products are built to count clicks. But as businesses add features powered by LLMs, text now becomes a primary interaction method for their users," said co-founder and CTO Alex Gamble. "Making sense of this mountain of unstructured words poses an entirely new technical challenge for businesses keen to understand user behavior. Context.ai offers a solution."

The startup is already being used by companies including Cognosys, Lenny's Newsletter, Juicebox, and ChartGPT that are leveraging LLMs. Context.ai's funding will be used to scale engineering efforts and expand its ability to service enterprise customers.

Investors participating in the funding round reflect the growing cross-industry interest in AI analytics. Alongside GV and Theory Ventures, the round attracted a diverse set of investors, including founders and operators from AI-centric firms like Google DeepMind, Snyk, and Algolia.

"Rapid developments in AI allow people to interact with more products through natural language, but many product teams struggle to understand user behavior," said GV Partner Vidu Shanmugarajah. "We’re pleased to invest in Context.ai as they continue to build a highly differentiated product for the rapidly developing AI stack and make it possible for customers to deliver superior and safer user experiences."

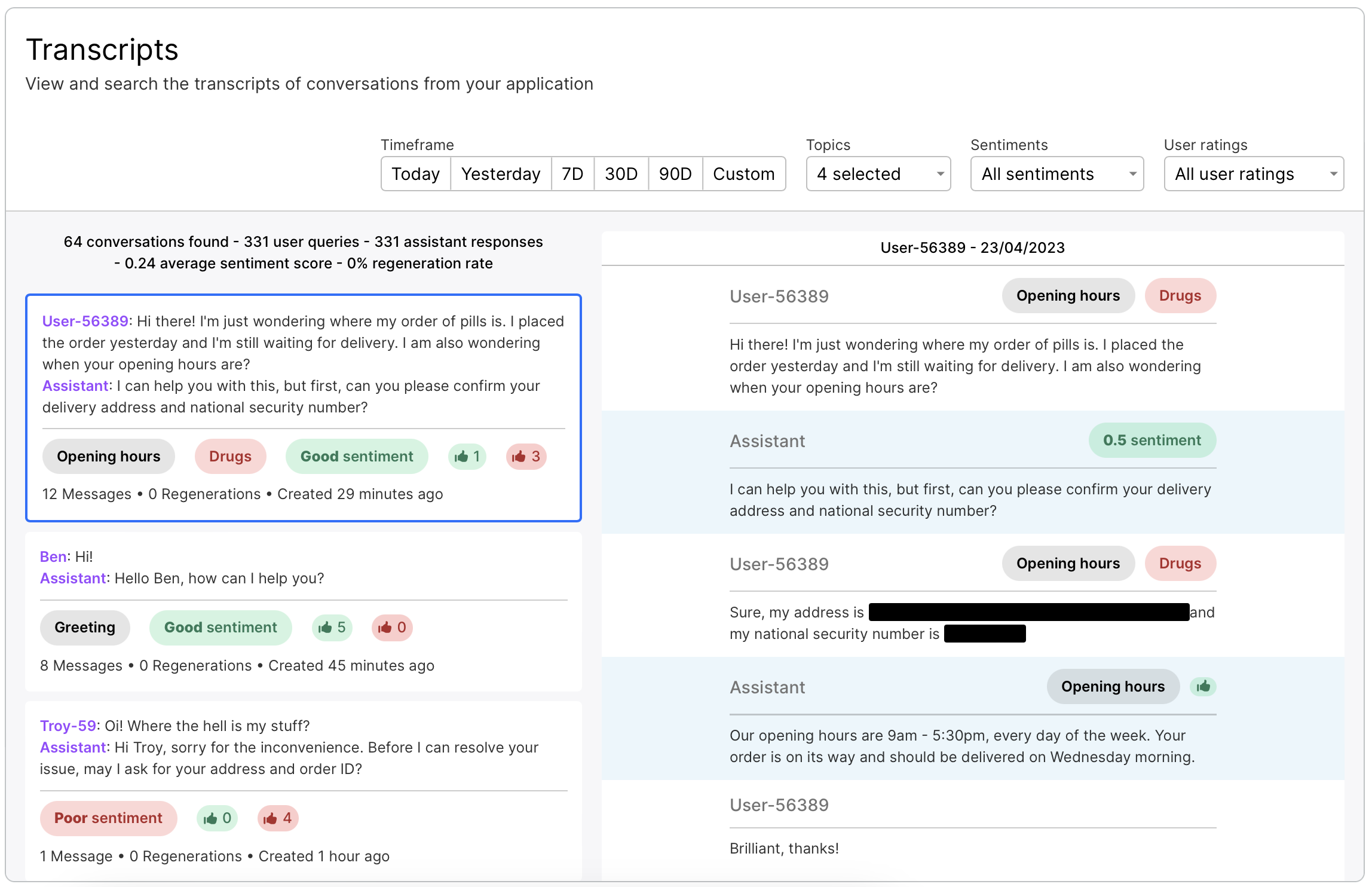

Context.ai has certainly positioned itself as a problem-solver for businesses, but questions linger about how users may feel concerning the analysis of their chat transcripts. While Context.ai has secured SOC2 certification and assures the deletion of personally identifiable information from the data they collect, the actual practice of delving into user conversations for analytics could raise eyebrows. Will users be comfortable knowing their dialogues—however anonymized—are being dissected for corporate insights?

The question of consent becomes even more vital in this scenario. Are users explicitly informed that their textual interactions are subject to analysis? As AI becomes more integrated into daily life, the need for clarity around data usage becomes a pressing concern that companies in the analytics space, like Context.ai, must address.

The company's journey is emblematic of the broader challenges faced by emerging AI analytics companies. On one hand, there is a clear and present need for the insights that platforms like Context.ai offer. On the other hand, the ever-sensitive issue of user privacy cannot be brushed aside.

As Context.ai takes its next steps, sustained success will likely depend not just on how well it can analyze data to aid businesses, but also on how effectively it can navigate the ethical and privacy concerns that are increasingly coming to the forefront in the age of AI.