An advanced version of Gemini with "Deep Think" enabled achieved gold medal performance at the 2025 International Mathematical Olympiad (IMO), solving five out of six brutally difficult problems and earning 35 out of 42 possible points.

Key Points:

- Historic achievement: First AI to officially achieve gold medal standard at IMO, solving problems in natural language within the 4.5-hour time limit

- Massive improvement: Jumped from last year's silver medal performance (28 points) to gold medal level (35 points) in just 12 months

- Technical breakthrough: Used "parallel thinking" to explore multiple solution paths simultaneously, unlike previous systems that required formal language translation

Since 1959, the IMO has been the proving ground where the world's most gifted teenage mathematicians tackle problems so challenging that even brilliant students train for thousands of hours just to compete.

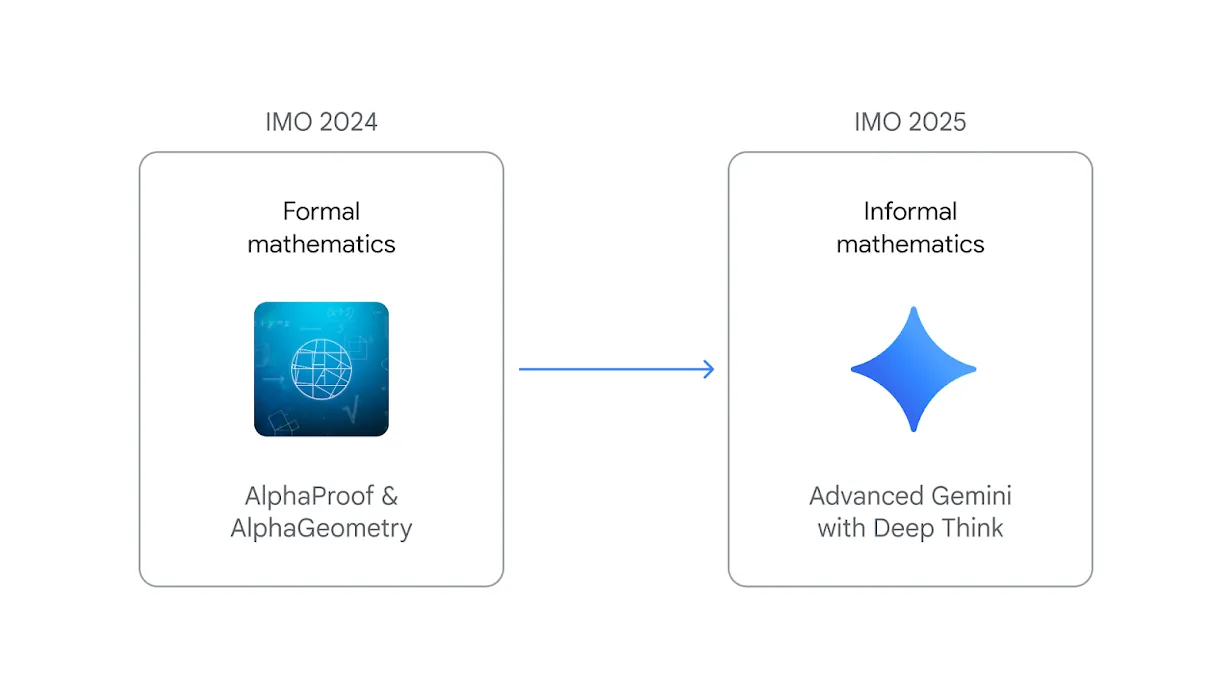

Last year, Google's specialized AlphaProof and AlphaGeometry 2 systems managed to solve four problems and earn silver medal status—but only after experts manually translated each problem from natural language into formal mathematical notation like Lean. The process took up to three days per problem.

This year's advanced Gemini model operated end-to-end in natural language, producing rigorous mathematical proofs directly from the official problem descriptions – all within the 4.5-hour competition time limit.

Google says the new system uses a technique called “parallel thinking,” that allows the model to simultaneously explore and combine multiple possible solutions before giving a final answer—rather than pursuing a single, linear chain of thought.

Over the weekend, OpenAI researcher Alexander Wei seemingly stole Google's thunder by claiming their own experimental model had achieved gold medal performance on the same IMO 2025 problems.

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO). pic.twitter.com/SG3k6EknaC

— Alexander Wei (@alexwei_) July 19, 2025

However, the devil is in the details—Google DeepMind's model results were officially graded and certified by the IMO, while OpenAI's were not. OpenAI's model wasn't officially entered in the competition, while Google participated in the inaugural cohort to have AI results officially evaluated by IMO coordinators using the same criteria as human contestants. "We can confirm that Google DeepMind has reached the much-desired milestone, earning 35 out of a possible 42 points — a gold medal score. Their solutions were astonishing in many respects. IMO graders found them to be clear, precise and most of them easy to follow," said IMO President Prof. Dr. Gregor Dolinar.

Thang Luong, who leads the Superhuman Reasoning team at Google DeepMind, also pointed out that given that OpenAI had one point deducted, their model had achieved Silver and not Gold.

Yes, there is an official marking guideline from the IMO organizers which is not available externally. Without the evaluation based on that guideline, no medal claim can be made. With one point deducted, it is a Silver, not Gold. https://t.co/0X665z0Pmn

— Thang Luong (@lmthang) July 20, 2025

The competition itself tells the story of just how difficult these problems are. Each country sends a team of up to six students who get two sessions of 4.5 hours each to solve six problems covering algebra, combinatorics, geometry, and number theory. For this year's competition, only 67 of the 630 total contestants received gold medals, or roughly 10 percent.

Google's technical approach involved training the advanced Gemini model on novel reinforcement learning techniques specifically designed for multi-step reasoning and theorem-proving. They also provided Gemini with access to a curated corpus of high-quality solutions to mathematics problems, and added some general hints and tips on how to approach IMO problems to its instructions.

The implications stretch far beyond mathematical competitions. "This breakthrough lays the foundation for building tools that can help mathematicians prove long-standing conjectures or discover new mathematical realms," according to Google's announcement. The idea of AI systems that can engage in the kind of sustained, creative mathematical reasoning required for original research is no longer science fiction.

Google plans to make a version of this Deep Think model available to trusted testers, including mathematicians, before rolling it out to Google AI Ultra subscribers. OpenAI's competing model however remains a research prototype and won't be coming to the public for several months.

8/N Btw, we are releasing GPT-5 soon, and we’re excited for you to try it. But just to be clear: the IMO gold LLM is an experimental research model. We don’t plan to release anything with this level of math capability for several months.

— Alexander Wei (@alexwei_) July 19, 2025