The first truly portable version of Google DeepMind’s Vision-Language-Action engine now runs entirely on the robot itself, opening the door to warehouse bots and factory cobots that keep working even when Wi-Fi dies.

Key Points

- Runs fully offline yet scores nearly as high as the cloud Gemini model.

- Fine-tunes to new tasks or robot bodies with just 50–100 demos.

- Gemini Robotics SDK lands for developers, though access starts via a “trusted tester” program.

- Part of a broader arms race with Nvidia’s GR00T and OpenAI’s RT-2 for general-purpose robot brains.

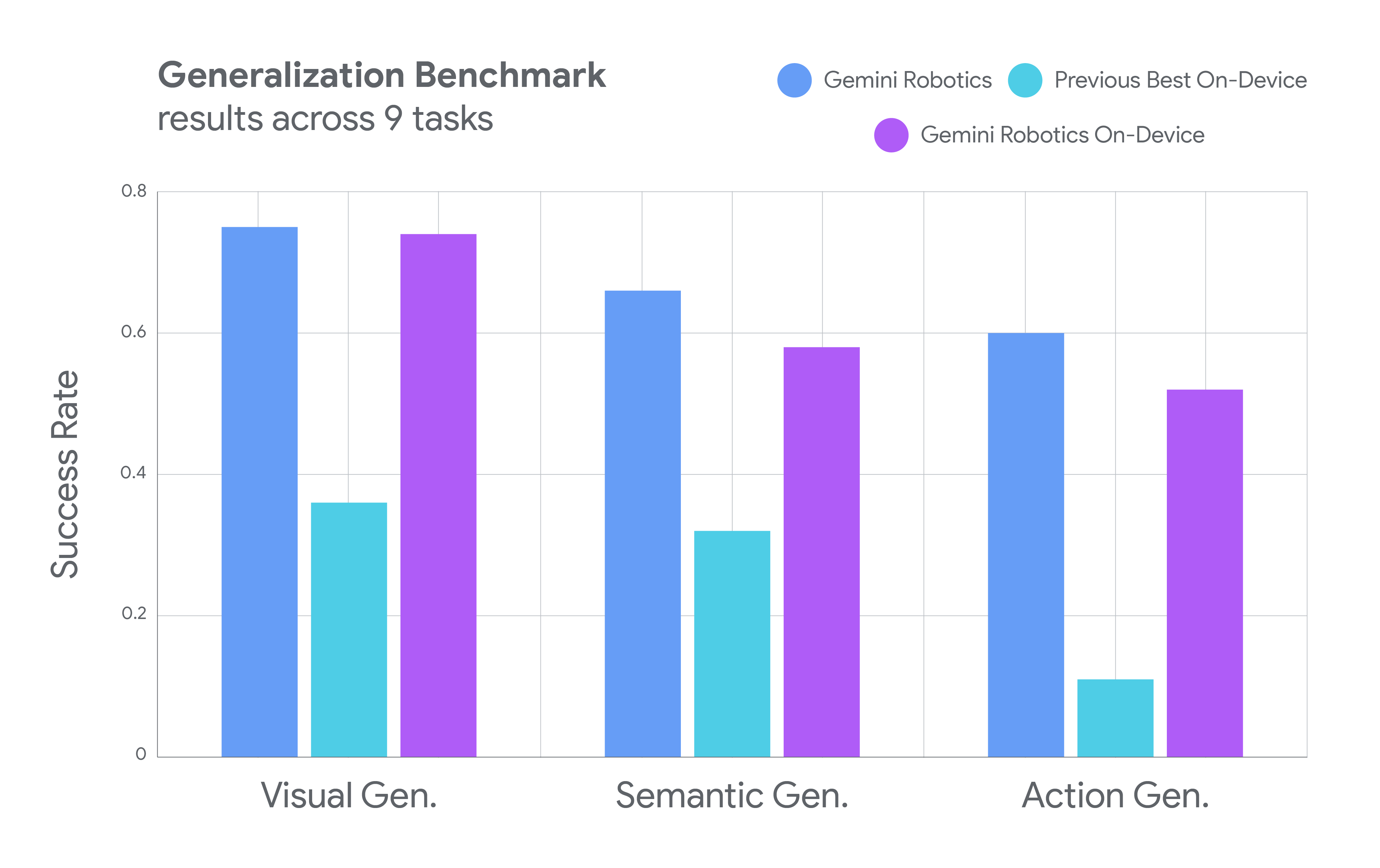

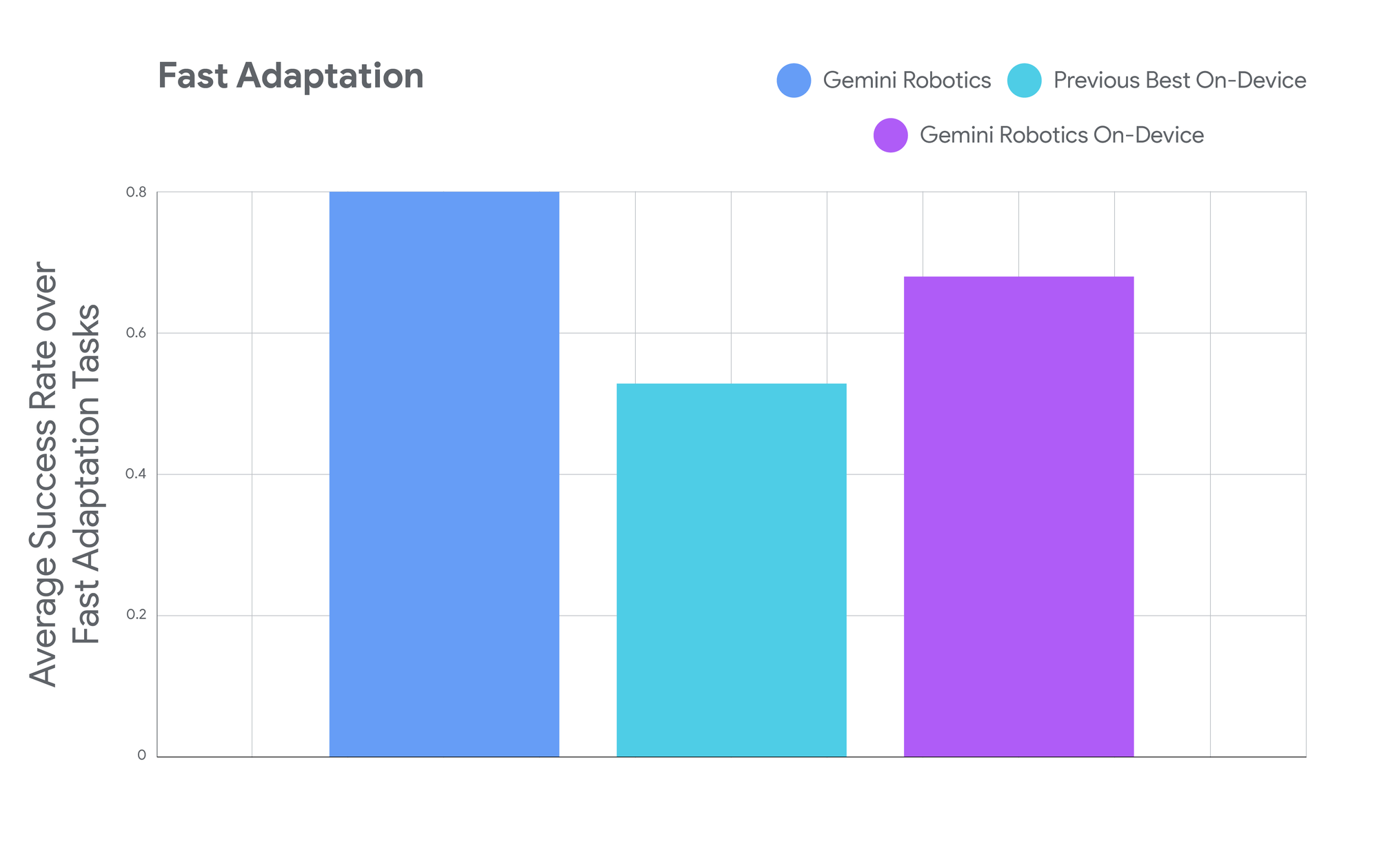

Google DeepMind’s new Gemini Robotics on-device model is a small powerhouse. The company says it retains “almost” the same prowess as the hybrid cloud version launched in March but is lean enough to live entirely on a robot’s onboard computer. In internal tests, bots running the model zipped zippers, folded shirts, and sorted unseen parts—all without sending a single packet to the cloud.

This is a significant shift for Google, which has been pushing cloud-connected robotics through its RT-1 and RT-2 models. But this new "Gemini Robotics On-Device" model acknowledges what anyone who's ever dealt with spotty WiFi knows: sometimes you just can't rely on the internet.

This isn't just about convenience. When robots need to make split-second decisions — like catching a falling object or navigating around a suddenly-appearing obstacle — every millisecond counts. Sending visual data to the cloud, processing it, and beaming back instructions introduces delays that can turn helpful robots into liability risks.

Google's solution builds on what the company calls Vision-Language-Action (VLA) models — AI systems that can see their environment, understand natural language commands, and translate both into physical actions. Think of it as a more sophisticated version of asking Alexa to turn on the lights, except the robot can actually grab, manipulate, and move objects in the real world.

The on-device model inherits the dexterity of Google's flagship cloud-based Gemini Robotics but compresses it down to run on the robot's local hardware. In tests, it performed complex manipulation tasks like folding origami and preparing salads — the kind of precise, multi-step activities that have historically been robotics white whales.

Google says the model can learn new tasks with as few as 50 demonstrations, and it can even transfer its knowledge to completely different robot bodies. The company showed the same AI controlling both academic ALOHA robots and commercial humanoid robots from Apptronik, suggesting a level of generalization that could accelerate robotics deployment.

The same generalist model can follow natural language instructions and manipulate different objects, including previously unseen objects, in a general manner.

But this local-first approach comes with trade-offs. On-device processing means more limited computational power compared to Google's massive cloud infrastructure. While the robots can handle a wide range of tasks "out of the box," the most demanding applications might still need the full cloud-based Gemini Robotics model.

As robotics companies race to deploy everything from warehouse automation to home assistants, connectivity has emerged as a crucial bottleneck. Tesla's Optimus robots, Boston Dynamics' Atlas, and startups like Physical Intelligence are all struggling with the same fundamental question: how much intelligence should live on the robot versus in the cloud?

Google's bet on local processing also addresses growing privacy concerns. When your robot can see your home, understand your routines, and remember your preferences, keeping that data local becomes more than just a technical consideration — it's a trust issue.

The company is rolling out the technology through a trusted tester program along with a Gemini Robotics SDK that lets developers fine-tune the model for their specific use cases. It's a careful, controlled launch that suggests Google learned from the sometimes chaotic rollouts of consumer AI products.

Whether this represents the future of robotics or just one approach among many remains to be seen. But Google's move to bring serious AI capability directly onto robots — without the internet umbilical cord — could accelerate the deployment of useful robots in settings where connectivity isn't guaranteed. And in a world where even our cars are getting smarter, that might be exactly what robotics needs to finally leave the lab.