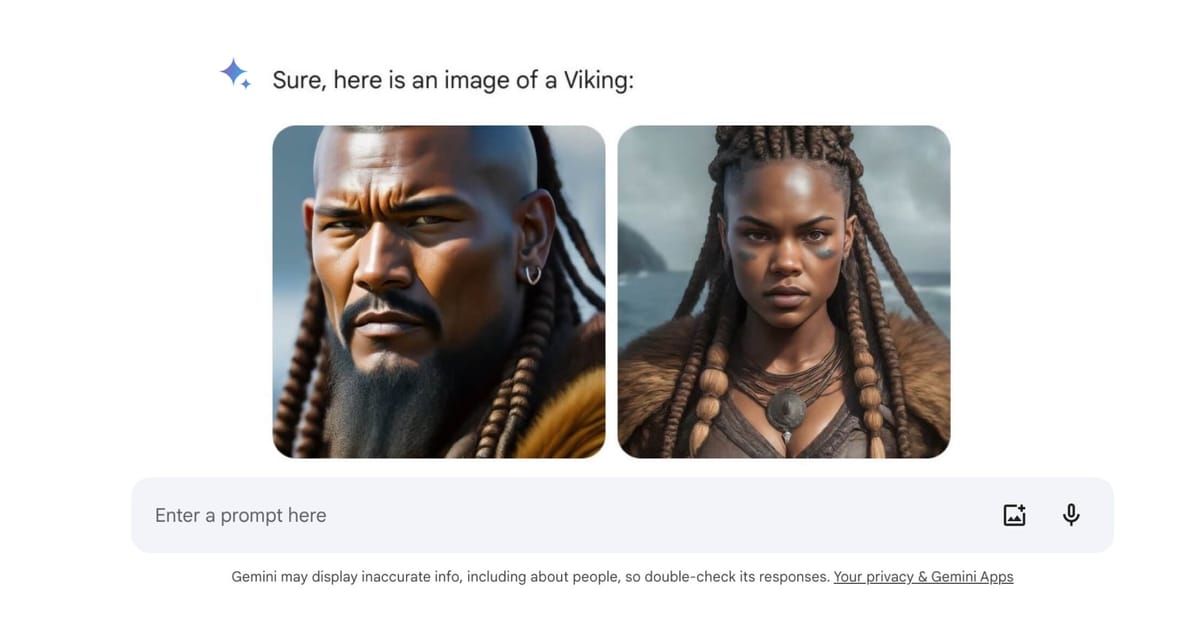

Earlier this week, Google announced that it was pausing Gemini's ability to generate images. Following widespread user criticism and an internal review, the company confirmed that Gemini (formerly Bard) was creating “inaccurate or even offensive” images that were “embarrassing and wrong.”

Gemini's image generation was built on top of Imagen 2, which was fine-tuned to avoid past pitfalls of AI image models, such as producing violent, sexually explicit, or unethically realistic depictions of individuals. Google intended for Gemini to generate images that reflected the diversity of global users. However, when prompted for images depicting specific types of individuals or those within certain cultural or historical contexts, their approach lead to the generation of content that were not only incorrect but also insensitive.

In a blog post explaining the issues, Senior Vice President Prabhakar Raghavan said two main problems arose with Gemini's image generator. First, attempts to tune the system to showcase diversity backfired. While the goal was preventing single ethnicities from dominating results globally, it led to strange responses to simple prompts seeking specific depictions.

Secondly, the model started refusing reasonable prompts entirely out of overcaution. Raghavan said it began to “wrongly interpret some very anodyne prompts as sensitive.” Combined, these two issues led to the awkward outcomes that provoked criticism.

As a result, Google has temporarily turned off Gemini's people image generation feature, with plans for significant improvements before its reinstatement.

Raghavan stated bluntly that the generated results were “not what [Google] intended” and that the feature had “missed the mark.” He committed to significant testing and improvements prior to re-enabling the ability to generate images with people. He even conceded directly, “I can’t promise that Gemini won’t occasionally generate embarrassing, inaccurate or offensive results.”

He also highlighted the inherent limitations and potential for errors within AI models like Gemini, especially regarding current events or controversial topics. He recommended relying on Google Search for accurate information on such matters, given its robust algorithms for surfacing high-quality content.

While it is good that Google pulled the feature, important questions must be asked and changes demanded. Given all the red-teaming, testing, and responsible AI practices that Google touts with each model release, how did such an obviously flawed feature end up in one of the world's most leading foundation models? How much testing is actually happening? Who is leading these efforts? OpenAI reportedly spent 6 months testing GPT-4 before it was released. Was Gemini's release rushed?

The incident is another reminder of the complexities inherent in creating AI systems that interface with the nuanced and diverse nature of human society. As Google works to refine Gemini, the episode underscores the tech industry's broader challenge: balancing the speed of innovation with ethical considerations and user expectations.