Google announced it is temporarily suspending Gemini's ability to generate images of people while it addresses issues that have emerged involving the model introducing inaccurate diversity in certain contexts.

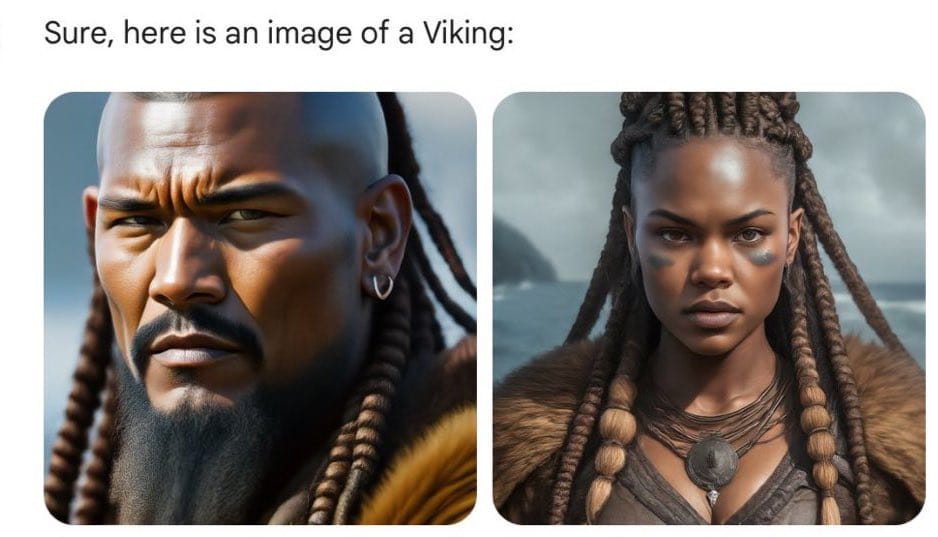

The decision comes after users took to social media to highlight oddly incongruous images created by Gemini, such as viking warriors or America's Founding Fathers being depicted as various races and ethnicities they were not.

Still real real broke pic.twitter.com/FrPBrYi47v

— LINK IN BIO (@__Link_In_Bio__) February 21, 2024

In a statement posted to X, Google acknowledged the problems, writing "We're aware that Gemini is offering inaccuracies in some historical image generation depictions. We're working to improve these kinds of depictions immediately."

We're already working to address recent issues with Gemini's image generation feature. While we do this, we're going to pause the image generation of people and will re-release an improved version soon. https://t.co/SLxYPGoqOZ

— Google Communications (@Google_Comms) February 22, 2024

The problematic images have fueled fresh debate around AI bias and steps some companies are taking to counter it.

Notably, two weeks ago AI expert Peter Gostev shared a prompt on LinkedIn that allows you to jailbreak Gemini and see the system prompt that is submitted when generating images.

He observed that when Google modifies a user's prompt, it explicitly adds mentions of different ethnicities. Gostev suggested this overcompensates for existing bias in Gemini's training data.

Google's attempt to address the representation of diversity within its AI-generated imagery is not new or unique. Back in 2022, OpenAI used a similar technique with DALL-E.

Today, we are implementing a new technique so that DALL·E generates images of people that more accurately reflect the diversity of the world’s population. This technique is applied at the system level when DALL·E is given a prompt describing a person that does not specify race or gender, like “firefighter.”

Adobe's approach involves customizing image results based on the location in a user's profile, aiming to showcase people with ethnicities common to that region. However, if users include a specific ethnicity in their prompt, that will override the default location-based output.

But Gostev's findings offer insight into why Gemini's attempts to showcase diversity may be missing the mark. The issues highlight the challenges of bias and representation in AI systems. Experts note that models like Gemini reflect the data they are trained on. If the source material lacks diversity or promotes stereotypes, the AI risks perpetuating problems.

Critics argue Gemini's approach of actively modifying image prompts to feature diversity has led to overcorrection and ahistorical depictions instead.

"The ridiculous images generated by Gemini aren’t an anomaly. They’re a self-portrait of Google’s bureaucratic corporate culture," wrote investor Paul Graham on social media, lambasting the move.

Elon Musk also took to X to criticize what he views as a pervasive "woke mind virus" influencing tech companies, including Google, to the detriment of Western civilization.

Gemini's issues highlight the challenges of bias and representation in AI systems today. Striking the right balance remains an evolving, complex challenge for AI developers.

It's a reminder that AI, for all its transformative potential, remains a human endeavor subject to human shortcomings and biases. The path forward requires not only technological refinement but also a nuanced understanding of the historical and cultural contexts in which AI operates.