After an embarrassing controversy involving racially insensitive and historically inaccurate responses from its new chatbot tool Gemini, Google CEO Sundar Pichai has vowed to implement “structural changes” and improve safeguards.

In a message to employees obtained exclusively by Maginative, Pichai admitted “some of [Gemini’s] responses have offended our users and shown bias – to be clear, that’s completely unacceptable and we got it wrong.”

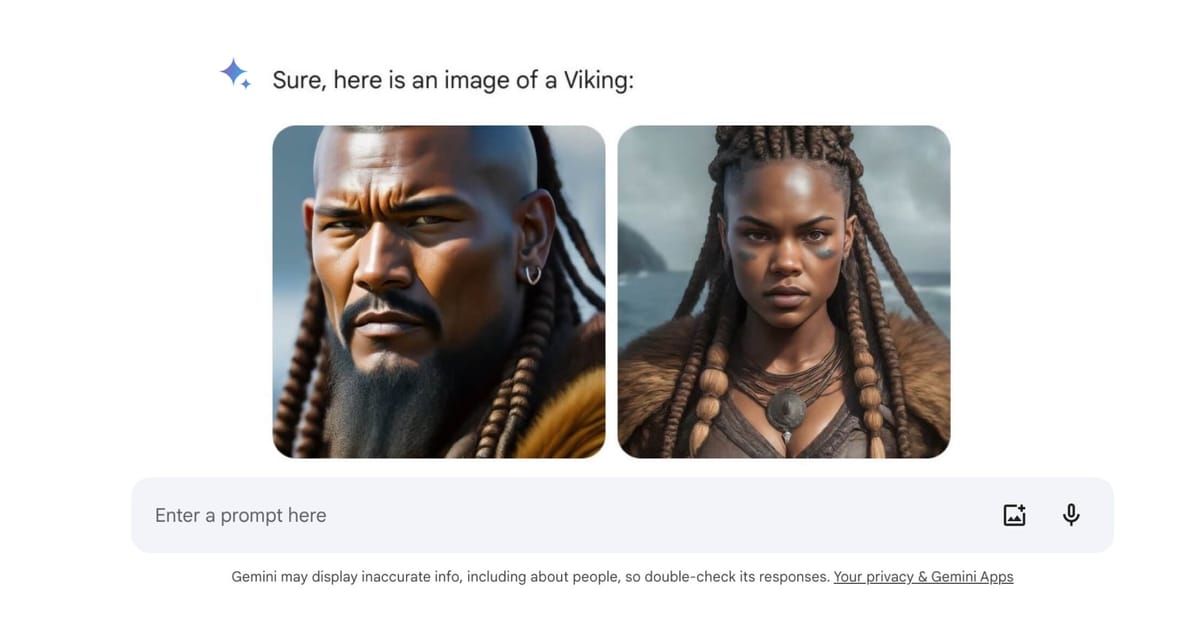

Last week, Google suspended parts of Gemini after users discovered it would generate images of minorities and women when asked to depict historical figures like Vikings, Nazis and popes. It also equated Elon Musk’s societal influence with Hitler’s.

The flawed responses drew criticism from conservatives claiming an anti-white agenda, while advocates warned it could perpetuate harmful stereotypes.

“Our teams have been working around the clock to address these issues,” said Pichai, who confirmed the tool’s guardrails have already improved across prompts. “No AI is perfect, especially at this emerging stage.”

Nonetheless, Pichai stressed Google’s “sacrosanct” mission demands unbiased information users can trust – a bar its AI must meet too.

Among the actions planned are updated guidelines, more oversight introducing new products and technical fixes to reinforce impartiality and historical accuracy.

Here is a copy of the memo in its entirety:

Hi everyone

I want to address the recent issues with problematic text and image responses in the Gemini app (formerly Bard). I know that some of its responses have offended our users and shown bias — to be clear, that’s completely unacceptable and we got it wrong.

Our teams have been working around the clock to address these issues. We’re already seeing a substantial improvement on a wide range of prompts. No Al is perfect, especially at this emerging stage of the industry’s development, but we know the bar is high for us and we will keep at it for however long it takes. And we’ll review what happened and make sure we fix it at scale.

Our mission to organize the world’s information and make it universally accessible and useful is sacrosanct. We’ve always sought to give users helpful, accurate, and unbiased information in our products. That’s why people trust them. This has to be our approach for all our products, including our emerging Al products.

We’ll be driving a clear set of actions, including structural changes, updated product guidelines, improved launch processes, robust evals and red-teaming, and technical recommendations. We are looking across all of this and will make the necessary changes.

Even as we learn from what went wrong here, we should also build on the product and technical announcements we’ve made in Al over the last several weeks. That includes some foundational advances in our underlying models e.g. our 1 million long-context window breakthrough and our open models, both of which have been well received.

We know what it takes to create great products that are used and beloved by billions of people and businesses, and with our infrastructure and research expertise we have an incredible springboard for the Al wave. Let’s focus on what matters most: building helpful products that are deserving of our users’ trust.

The stumble showcases challenges around mitigating bias in AI, though rivals like OpenAI have faced similar backlash over uneven societal depictions. It also threatens Google’s bid to lead an AI revolution it claims could boost productivity and personalize information.

But Pichai remains upbeat, citing recent advances that provide “an incredible springboard for the AI wave” and focusing engineers on building “helpful products that are deserving of our users’ trust.”