Google DeepMind has developed a new digital watermarking technique called SynthID that can imperceptibly label images as being created by artificial intelligence. The tool is being launched in beta for a limited number of Google Cloud customers using Imagen, Google's advanced text-to-image generator.

SynthID works by embedding an invisible watermark directly into the pixels of an AI-generated image. The watermark is engineered to be completely imperceptible to the human eye yet still detectable by software even if the image undergoes changes like cropping, filters, color adjustments or lossy compression. This allows users to tag their Imagen creations in a way that persists even as the images are shared or edited.

According to DeepMind, SynthID is the first tool of its kind offered by a major cloud provider. Pushmeet Kohli, Vice President of Research at Google DeepMind, emphasized the robustness of SynthID. "Our watermarking technology creates an embedded pattern that is not only invisible but also resistant to various manipulations. It's a leap beyond existing watermarking techniques which can be easily tampered with," said Kohli.

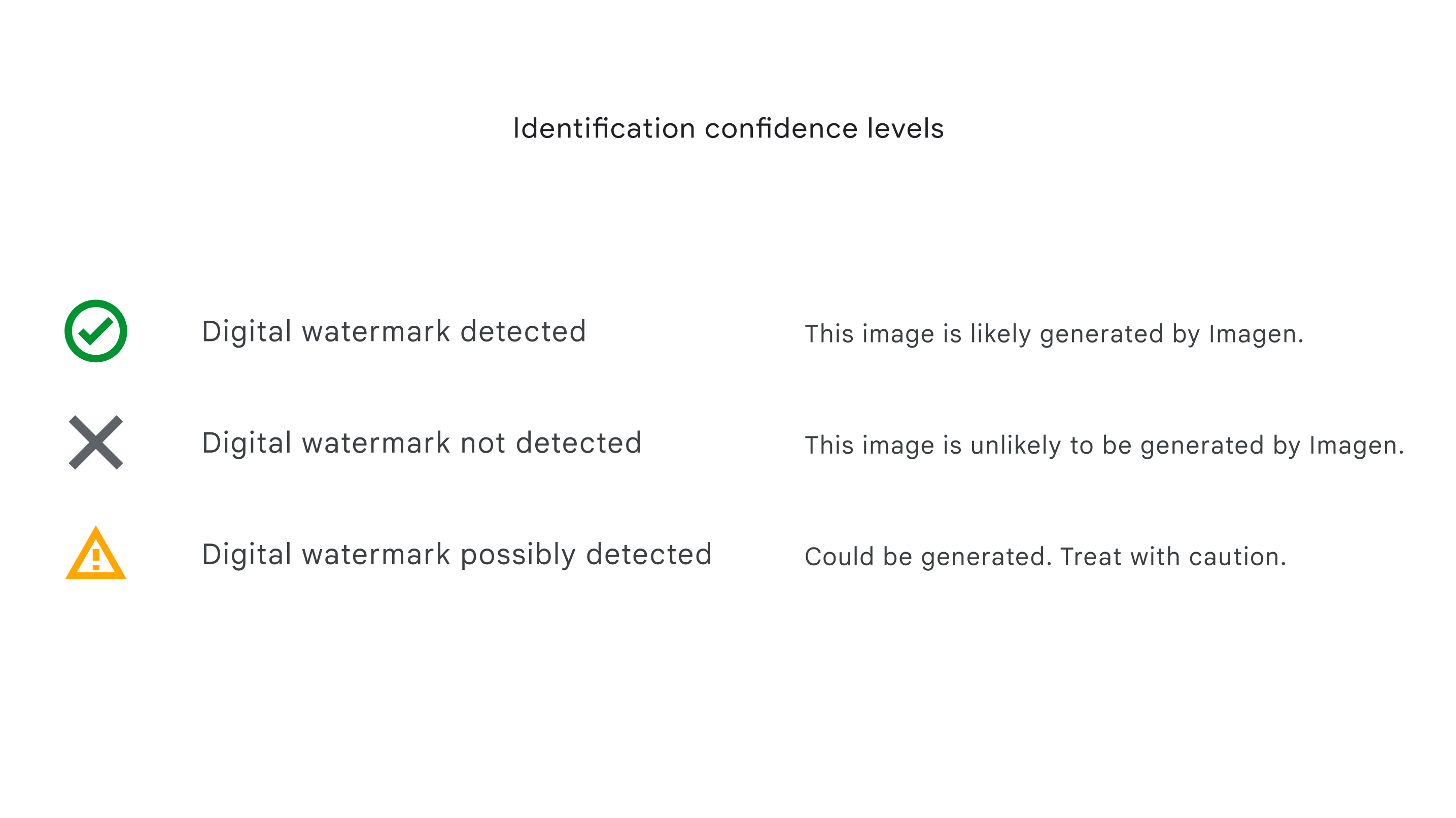

SynthID can scan the image for its digital watermark and help users assess whether the content was generated by Imagen. The tool provides three levels of confidence in watermark detection.

The goal of the technology is to provide a reliable way for people to identify synthetic media and combat potential misuse. As generative AI advances, being able to distinguish real from AI-created content is becoming increasingly critical.

"While generative AI can unlock huge creative potential, it also presents new risks, like enabling creators to spread false information — both intentionally or unintentionally," wrote Sven Gowal, Staff Research Engineer at Google DeepMind, in a blog post. "Being able to identify AI-generated content is critical to empowering people with knowledge of when they’re interacting with generated media, and for helping prevent the spread of misinformation."

DeepMind claims that unlike traditional visible watermarks or metadata tags, SynthID produces a robust, tamper-resistant identifier. However, the tech community has greeted SynthID with a blend of enthusiasm and skepticism. Experts note that no current watermarking technique is foolproof, and motivated attackers will likely find ways to neutralize marks.

"There are few or no watermarks that have proven robust over time," said Ben Zhao, professor at the University of Chicago studying AI authentication. "An attacker seeking to promote deepfake imagery as real, or discredit a real photo as fake, will have a lot to gain, and will not stop at cropping, or lossy compression or changing colors."

Claire Leibowicz, the head of the AI and Media Integrity Program at the Partnership on AI, expressed cautious optimism. "While not a silver bullet, DeepMind's watermarking tool is a meaningful step in the right direction. It's crucial that the tech community continues to share knowledge about what works and what doesn't," she said.

Still, SynthID represents an important step toward allowing reliable attribution of AI content. Google plans to gather feedback during the beta test before potentially expanding availability.

Yet, Google DeepMind's first foray into watermarking AI-generated content is not without its limitations. The proprietary nature of SynthID means only Google can both embed and detect these watermarks, which constrains its broader applicability. Sasha Luccioni, an AI researcher at startup Hugging Face, argues that a more open approach would amplify the technology's benefits.

"If this watermarking tech were integrated across all image generation systems, the chances of misuse could be significantly reduced," said Luccioni.

According to Kohli, this launch is just the "experimental" phase, and the tool’s efficacy will be assessed based on user interaction and feedback. Although he remained tight-lipped about whether SynthID would be expanded to other AI-generated content or opened up to third parties, he underscored Google DeepMind's commitment to responsible AI practices.

Google aims to integrate responsible AI practices like SynthID widely into its products. At the company's recent I/O conference, CEO Sundar Pichai expressed commitment to building watermarking and protections directly into models.

For now, DeepMind's technology provides an early working solution for tagging Imagen content. As synthetic media proliferates, robust tracking tools will only grow in importance. While not perfect, SynthID moves Google toward its goal of empowering responsible AI creation. But as with all things in the fast-paced realm of AI, it's a first step on a long and uncertain road.