Imagine typing “a rainforest during a thunderstorm” and stepping into it seconds later. That’s the premise of Genie 3, Google DeepMind’s new world model that can generate fully interactive, physics-aware environments from a few lines of text—and render them in real time at 24 FPS.

Key Points:

- Genie 3 builds dynamic, explorable worlds from text, with real-time navigation.

- It models physics, weather, plants, animals—even fantasy creatures.

- Environments remain consistent across minutes of interaction.

- Limited release for now, but implications span games, training, and AI agent research.

Genie 3 isn’t just another AI video generator—it’s a live simulator. You give it a scene—say, “walking through Venice during a festival” or “exploring the deep sea with glowing jellyfish”—and it outputs a navigable, reactive world. You can move through it like you’re playing a game, and the environment holds together with physical consistency, minute by minute.

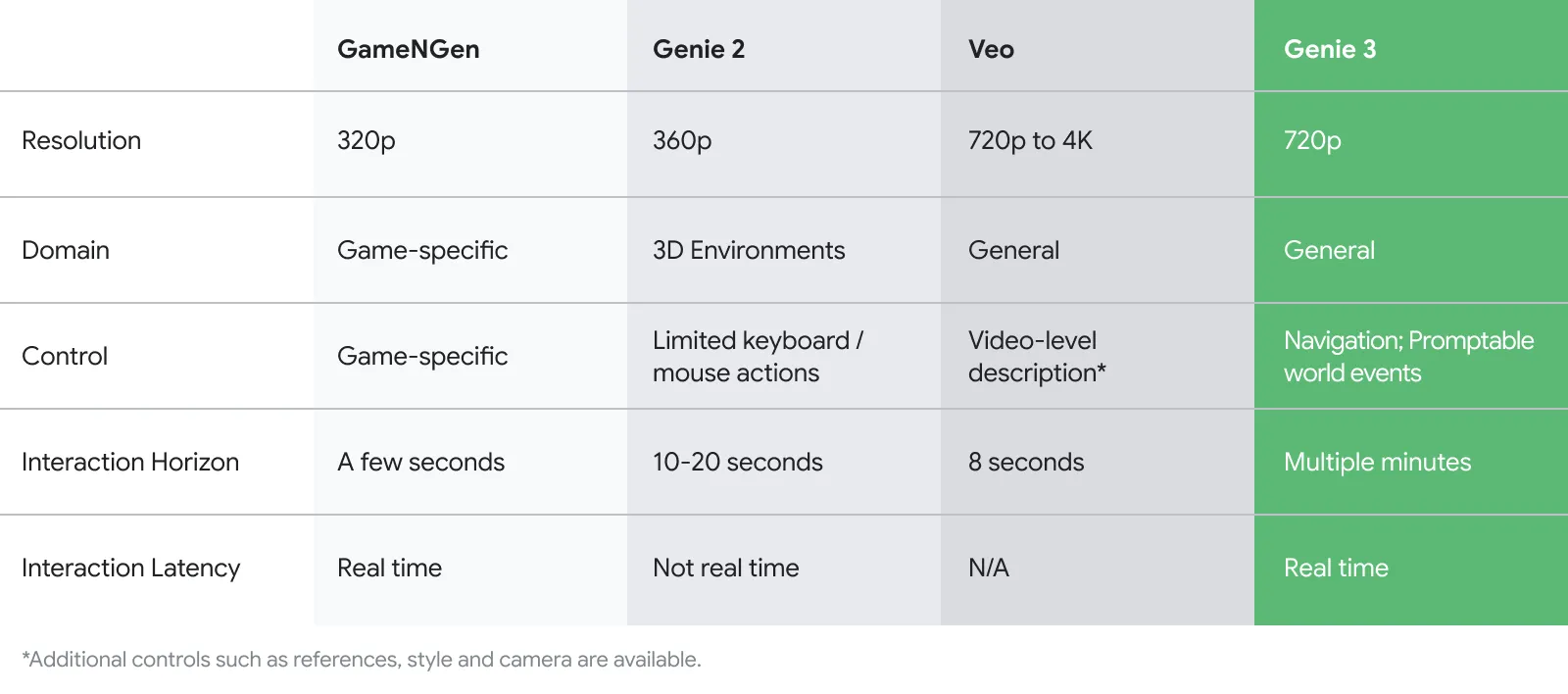

This isn't Google's first swing at world models. Last year they introduced the first foundation world models with Genie 1 and Genie 2, which could generate new environments for agents. But while Genie 2 could manage maybe 10-20 seconds of somewhat coherent interaction at 360p before things started falling apart, Genie 3 is playing in a different league entirely.

Genie 3 can generate multiple minutes of interactive 3D environments at 720p resolution at 24 frames per second — a significant jump from the 10 to 20 seconds Genie 2 could produce. DeepMind figured out how to make the model remember what it generated up to a minute ago — so if you're exploring a virtual Venice and circle back to where you started, the same gondolas are still there, the same buildings with their weathered plaster.

The tech behind it is wild. Unlike systems like NeRF or Gaussian Splatting that rely on fixed 3D reconstructions, Genie 3 generates frames on the fly, learning how the world should evolve based on your actions. This means it can simulate water physics, light shifts, wind-blown trees, and cascading lava—all from scratch.

But here's where it gets really interesting: promptable world events. You're skiing down a mountain, everything's normal, then you type "add a herd of deer" — boom, deer appear in the scene. It's like having god mode for reality, except the reality is being generated on the fly.

DeepMind isn't positioning this as a game engine killer, though that's what it looks like. They're laser-focused on using Genie 3 to train AI agents. The company said that while Genie 3 has implications for educational experiences, gaming or prototyping creative concepts, its real unlock will manifest in training agents for general-purpose tasks, which he said is essential to reaching AGI.

They've already tested it with their SIMA agent, giving it goals like "approach the industrial mixer" in a simulated bakery. The agent has to figure out how to navigate Genie 3's world to complete tasks — and it works, because the world stays consistent enough for planning multi-step actions.

This puts Google in direct competition with Meta, which has been pushing hard on its own world models. Meta's V-JEPA 2 is a 1.2 billion-parameter model that learns from video to help robots navigate unfamiliar environments. But while Meta's focused on robotics applications, Google's going broader — fantasy worlds, historical recreations, volcanic terrains, you name it.

It's still early days for the technology and Google was quick to point out known limitations. For example, text rendering is wonky unless you specifically describe it in your prompt. The model can only handle a few minutes of interaction before things start to break down. And while DeepMind showed off impressive demos of the SIMA agent completing tasks, the range of actions you can actually perform is still pretty limited compared to a real game engine.

For now, we wait. DeepMind says they're exploring ways to make Genie 3 more widely available, but they're taking their time. It is now in limited research preview, and accessible only to a select group of creators and academics.

But, the promise is tantalizing: type a description, explore a world. No modeling software, no game engine, no coding. Just pure imagination turned interactive.