Google and Jigsaw (their research unit focused on online threats) have published a comprehensive analysis of generative AI misuse, shedding light on how the technology is being exploited in the real world. The study, which examined nearly 200 media reports of public incidents between January 2023 and March 2024, reveals key patterns in how malicious actors are leveraging generative AI capabilities.

Key findings include:

- Most reported cases involve the exploitation of easily accessible AI capabilities, rather than sophisticated attacks on AI systems themselves.

- The most prevalent tactics center around manipulating human likeness, including impersonation, creating synthetic personas, and generating non-consensual intimate imagery.

- Common goals behind AI misuse include influencing public opinion, enabling scams and fraud, and monetizing content at scale.

- The increased accessibility of generative AI tools has lowered barriers to entry for malicious actors, democratizing access to powerful manipulation techniques.

- Novel forms of misuse blur ethical lines, such as undisclosed use of AI-generated media in political campaigns.

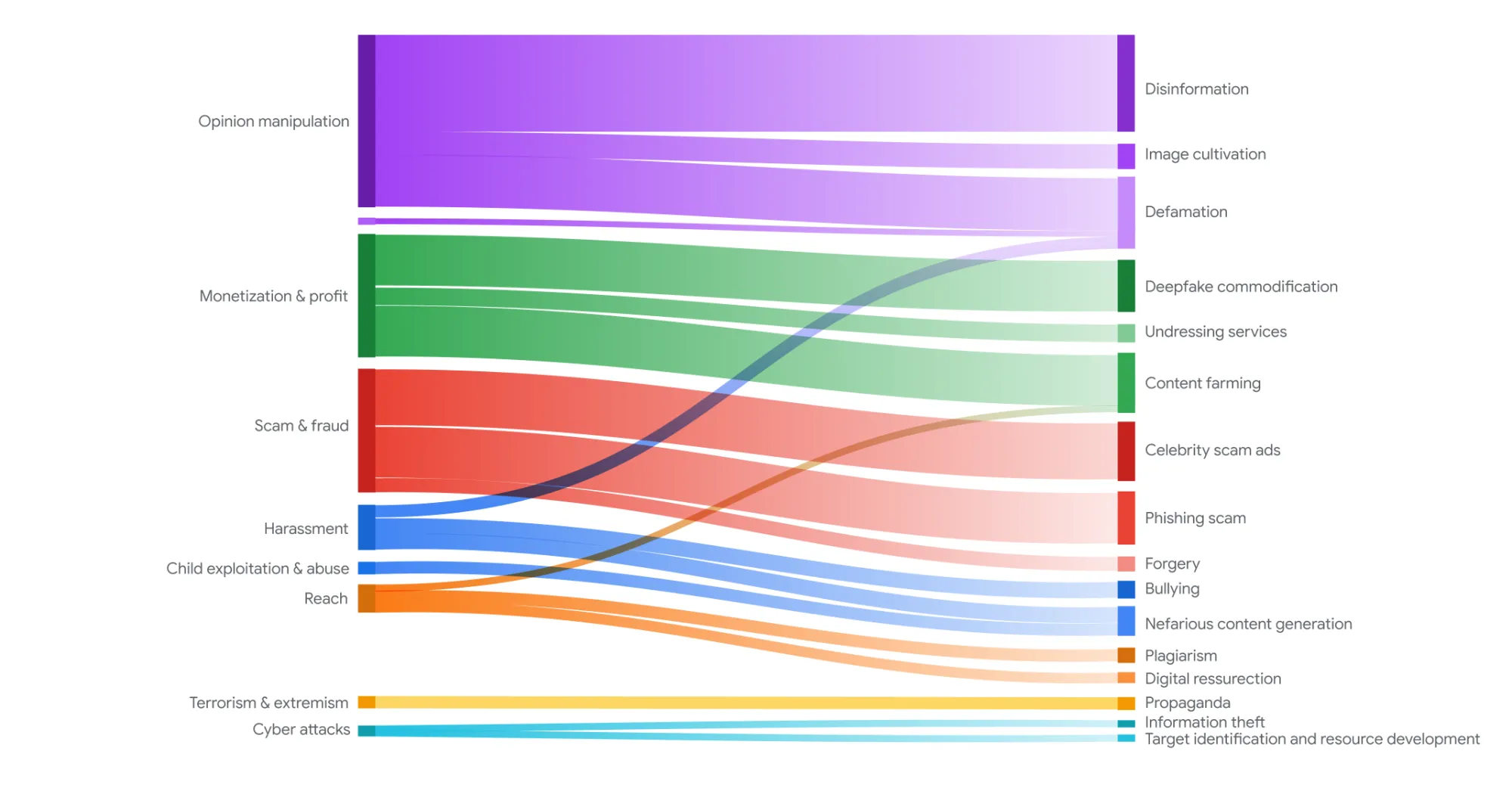

The researchers categorized common tactics and identified new patterns in the misuse of generative AI. The report highlights two main types of misuse: exploitation of AI capabilities and compromise of AI systems. Tactics such as impersonation, creating synthetic personas, and falsifying information were frequently observed, often with the intent to manipulate public opinion, commit fraud, or generate profit.

One notable case from February 2024 involved scammers using AI to impersonate corporate executives, resulting in a $26 million loss for an international company. This incident underscores the potential for significant financial damage through AI-generated deceptions.

While the study highlights potential threats, Google emphasizes that the research is part of a broader effort to proactively address AI misuse. The company is advocating for generative AI literacy campaigns to educate the public about these technologies and their potential for manipulation. Additionally, Google is developing tools like SynthID to help identify AI-generated content. The tech giant is also engaging in industry collaboration through initiatives such as the C2PA (Content for Coalition Provenance and Authenticity), recognizing that combating AI misuse requires a coordinated approach across the tech sector.

The research emphasizes the need for better safeguards and ethical guidelines as generative AI becomes more prevalent. `As these tools become more advanced and integrated into everyday applications, understanding and addressing potential misuse becomes increasingly critical for maintaining trust in digital information ecosystems.

By tracking, sharing, and addressing potential misuses head-on, Google aims to foster responsible use of generative AI and minimize its risks. Ultimately studies like this empowers researchers, policymakers, and tech companies to build safer technologies and combat future misuse.