Researchers at Lasso Security recently shared that they were able to uncovered over 1,500 exposed API tokens on Hugging Face, a prominent AI development platform. This breach, which allowed access to 723 organizations' accounts, including major players like Meta and Microsoft, highlights significant weaknesses in the security practices of both AI developers and platforms.

Hugging Face, known for its Transformers Library and accessible platform for open source AI models and datasets, is a crucial resource for developers working on large language models and generative AI. The platform hosts over 500,000 AI models and 250,000 datasets, making it a goldmine for developers but also a significant target for cyber threats.

The Lasso Security team's investigation into Hugging Face's security measures was driven by its popularity in the open-source AI community. Their research focused on identifying exposed API tokens, a key vulnerability for LLMs that are often hosted on platforms like Hugging Face and accessed remotely. The three primary risks they aimed to address were supply chain vulnerabilities, training data poisoning, and model theft.

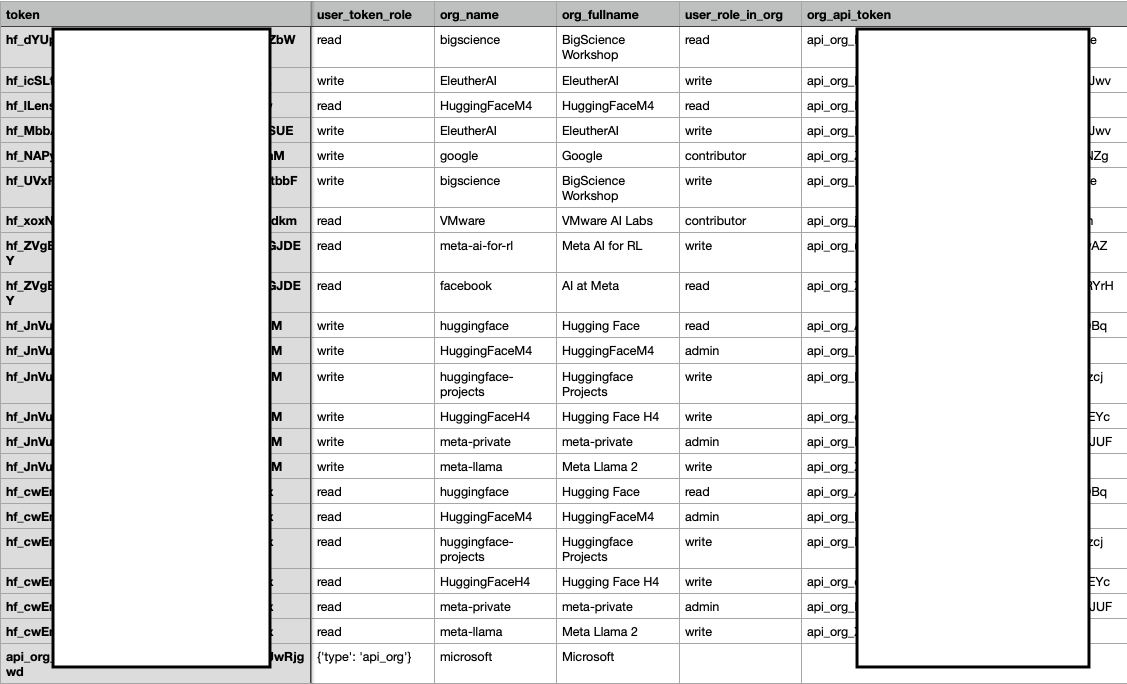

Using a combination of GitHub and Hugging Face searches and other methods, the team was able to find 1,681 valid tokens. These tokens provided access to high-profile organization accounts and enabled control over popular AI models like Meta-Llama, Bloom, and Pythia.

The implications of this breach are far-reaching. Full access to these APIs meant potential control over millions of downloads, posing a significant threat to the integrity of these models. The risk of training data poisoning and model theft was also heightened, given the access to datasets and private models. Furthermore, the Lasso team discovered that while Hugging Face had deprecated org_api tokens, it was still functional for "read" functionality.

Following their findings, Lasso Security took immediate steps to inform affected users and organizations, urging them to revoke exposed tokens and tighten security measures. They also informed Hugging Face of the vulnerability and suggested better security practices such as GitHub's approach of constantly scanning repos and revoking any OAuth token, GitHub App token, or personal access token, when it is pushed to public repository or public gist.

Many of the organizations (Meta, Google, Microsoft, VMware, and more) and users took very fast and responsible actions, they revoked the tokens and removed the public access token code on the same day of the report.

It is astonishing that API tokens granting privileged access were found hard-coded in public repositories, lacking basic protections. Organizations and developers working in AI must begin treating API tokens with the utmost caution, akin to digital identities, and to embrace best practices that have been used in software development for decades. The adoption of a zero-trust approach, focusing on rigorous identity authentication and lifecycle management, is imperative in safeguarding these transformative technologies.

The Lasso Security research underscores the necessity for a shift in mindset towards stronger, more proactive security behavior in the AI industry. In a digital world where AI is increasingly central, ensuring the safety and integrity of these systems is not just a technical challenge but a crucial responsibility for all stakeholders involved.