Twenty prominent technology companies, including Google, Microsoft, IBM, Meta, and OpenAI, signed an accord today agreeing to take concrete steps to prevent the spread of deceptive AI-generated content aimed at interfering with elections taking place this year.

The "Tech Accord to Combat Deceptive Use of AI in 2024 Elections" was announced at the annual Munich Security Conference. Signatories pledged to work together on developing tools to detect and address online distribution of fabricated audio, video and images related to elections.

The accord specifically focuses on AI-generated content that seeks to deceptively alter the appearance or words of political candidates and provides false voting information to deceive citizens. This type of manipulated media, often called "deepfakes," presents a threat to election integrity around the world, according to the companies.

"Elections are the beating heart of democracies. The Tech Accord to Combat Deceptive Use of AI in 2024 elections is a crucial step in advancing election integrity, increasing societal resilience, and creating trustworthy tech practices," said Ambassador Dr. Christoph Heusgen, Chairman of the Munich Security Conference, in a statement.

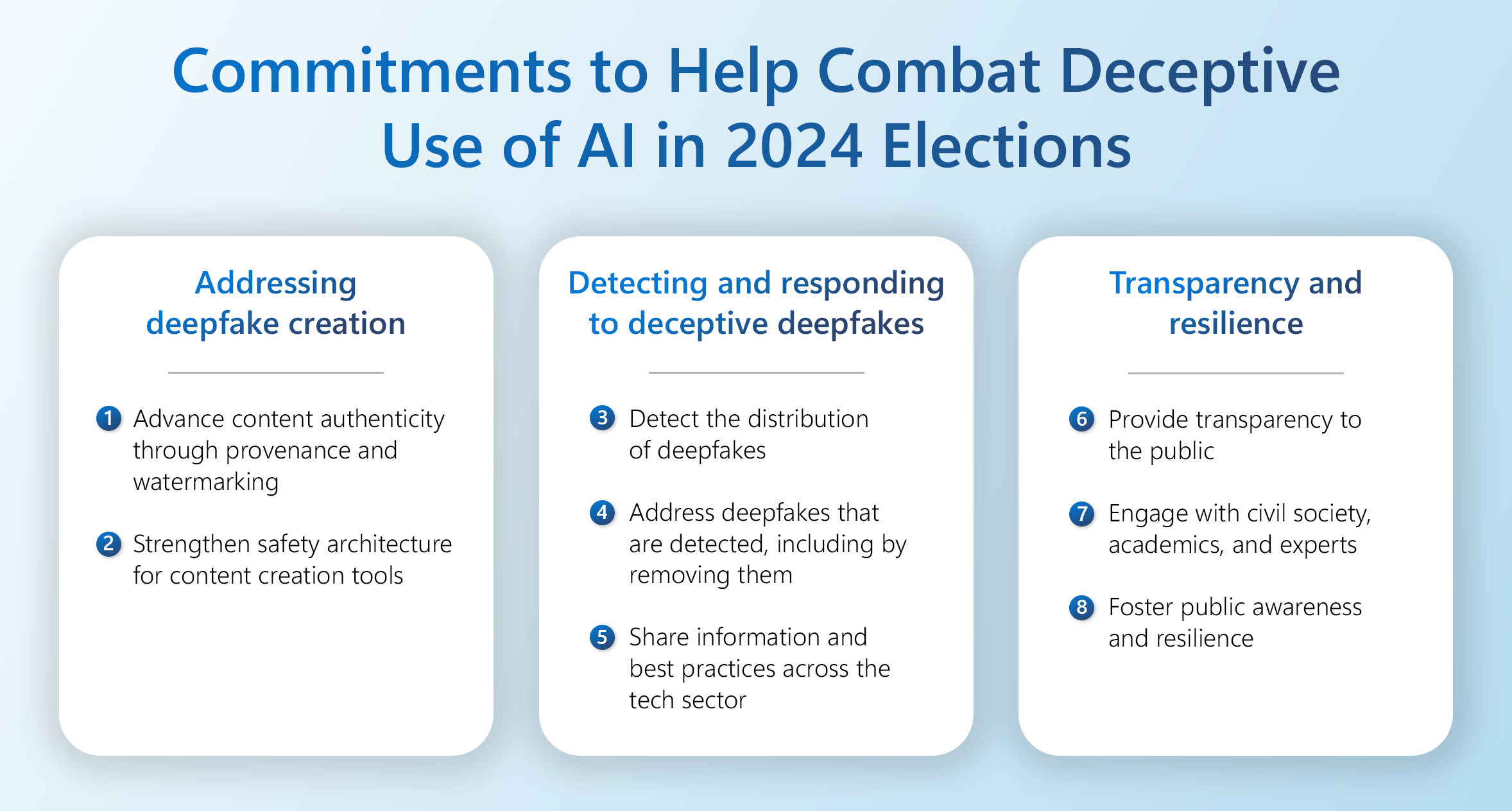

As part of the accord, companies agreed to eight commitments including assessing AI systems that could enable election deception campaigns, seeking to detect deepfakes on their platforms, providing transparency around policies, and supporting public awareness efforts.

The accord builds on work already underway by many of the companies to address manipulated media and election interference. For example, last year Microsoft launched a tool called Video Authenticator that analyzes an image or video to provide a percentage chance that it is artificially manipulated.

In a statement, OpenAI's VP of Global Affairs Anna Makanju said: "We're committed to protecting the integrity of elections by enforcing policies that prevent abuse and improving transparency around AI-generated content."

While a positive step, experts say the accord is limited because it relies on voluntary measures by private companies, many of whom have financial interests in AI development. Stronger regulations and government policies are likely needed to comprehensively address risks posed by AI manipulation tactics. The accord does, however, underscore growing recognition that artificial intelligence can threaten democracy if left ungoverned.