Meta's Facebook AI Research (FAIR) lab continues to push the envelope to make autonomous embodied AI agents smarter, more socially-aware, and interactive. Their ambitious vision is to enable collaborative robots and virtual assistants but ones that can understand, navigate, and interact with humans in home environments, and even cooperate with us in our day-to-day tasks.

To highlight their commitment to this vision, today FAIR has announced three major advancements:

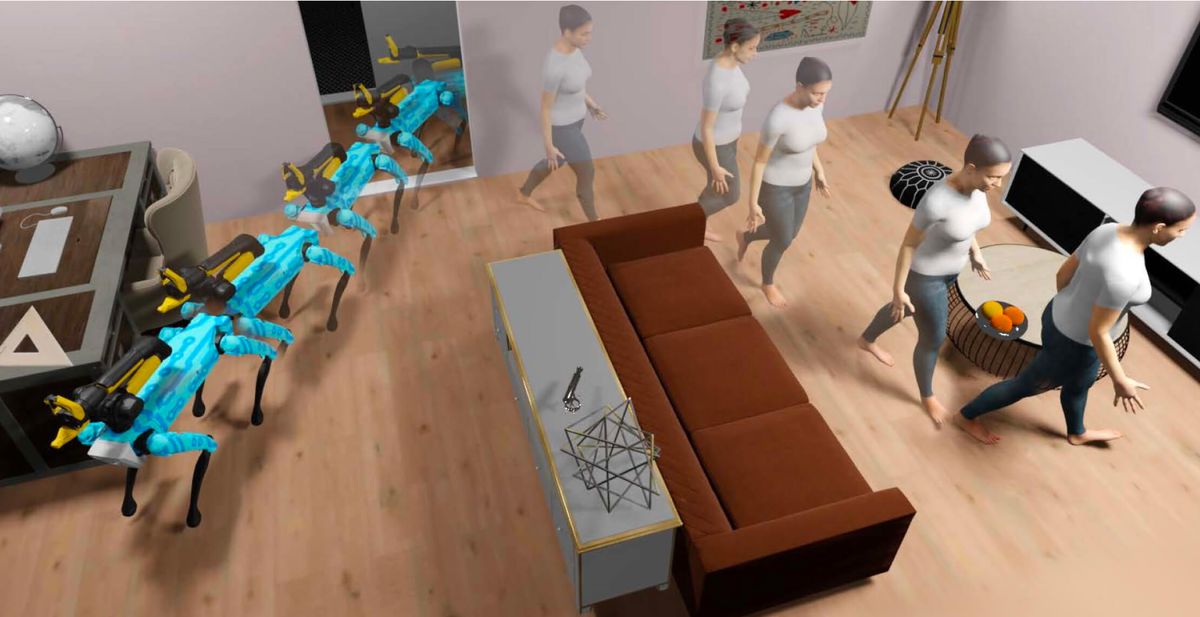

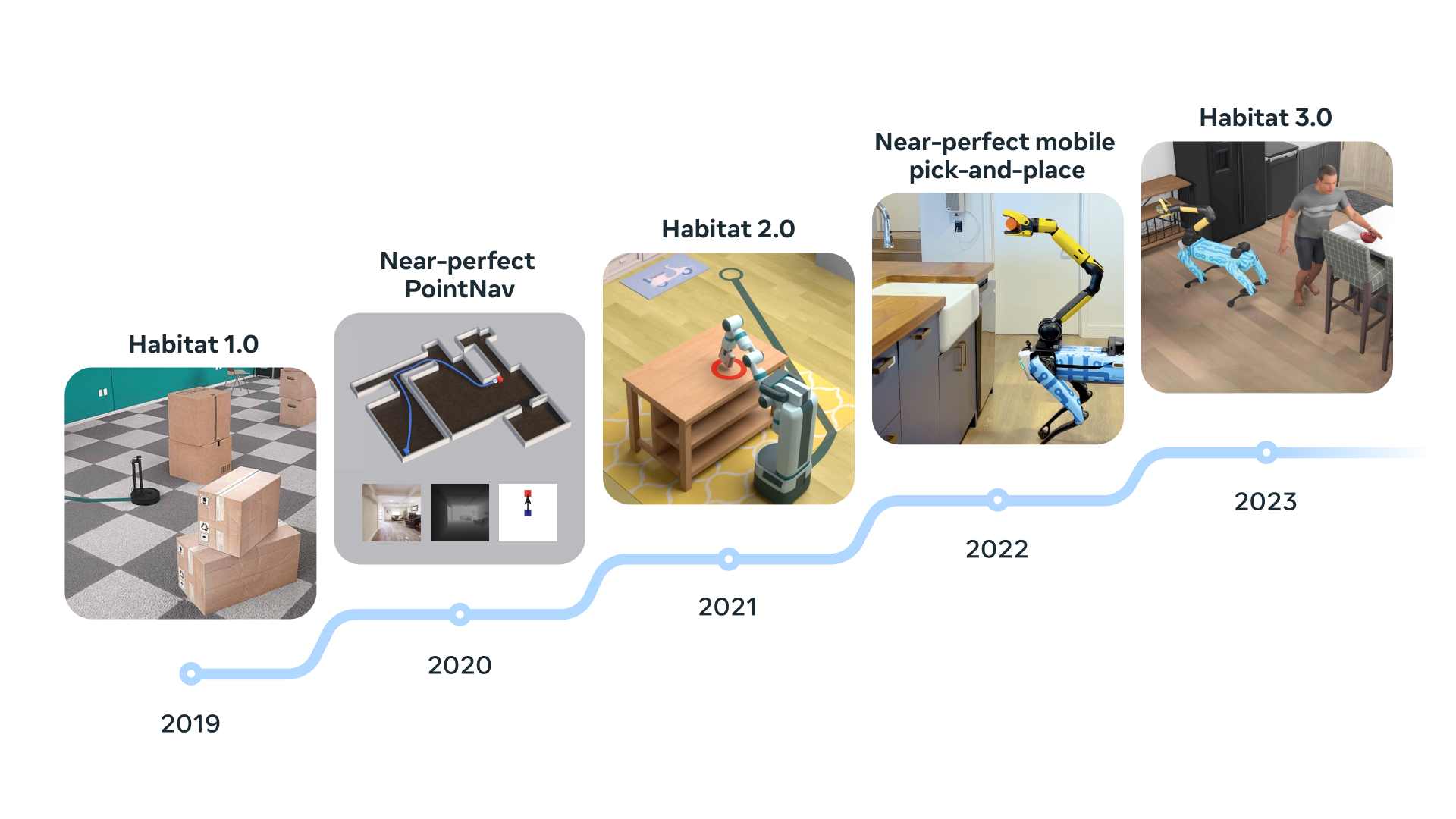

- Habitat 3.0: Beyond supporting robots, this advanced simulator also incorporates humanoid avatars, enhancing human-robot synergy within homes. Within this platform, AI entities acquire skills like house cleaning through genuine human interactions, all under the watchful eye of a comprehensive evaluation framework.

- Habitat Synthetic Scenes Dataset (HSSD-200): An unparalleled 3D artist-designed collection, HSSD-200 consists of over 18,000 objects across 466 categories within 211 distinct scenes. It's a game-changer, allowing for training navigation agents with higher precision and fewer resources than previous datasets.

- HomeRobot: This comprehensive platform melds hardware and software, permitting robots to undertake varied tasks across simulated and tangible environments.

A core challenge in embodied AI agents is safely training and testing models before real-world deployment. FAIR's Habitat simulator allows high-speed, low-risk development of social robotics. Habitat 3.0 introduces full-body avatars and tasks requiring human-robot teamwork, like tidying a room. The AI agents learn efficient coordination strategies like giving way in tight spaces. Habitat 3.0 provides a framework for evaluating policies with real humans "in-the-loop."

HSSD-200's smaller but more realistic scenes better reflect complex real interiors. Despite its compact nature, its realism, versatility, and efficiency overshadow preceding datasets.

With platforms like Google Cloud and Microsoft Azure revolutionizing machine learning's landscape, robotics remained relatively untouched due to hardware intricacies. To drive collaborative robotics forward, FAIR built the open source HomeRobot software stack for simulated and physical autonomous manipulation. It includes interfaces, baseline behaviors, and benchmarks centered around tasks like delivering requested objects. HomeRobot supports affordable platforms like Hello Robot's Stretch mobile arm as well as the Boston Dynamics' Spot.

The foundation of AI lies in the development of intelligent machines. However, the true potential is unleashed when these machines possess a tangible form, enabling them to perceive, learn from, and act within their environment. This realm of embodied AI is grounded in the embodiment hypothesis, asserting that genuine intelligence arises from an agent's active interaction with its surroundings.

Habitat platforms champion this philosophy by offering a unique simulation-first approach. This method transforms conventional machine learning paradigms, pivoting from static, internet-based AI models to dynamic embodied agents. These agents, with skills such as active perception, real-time planning, and grounded dialogues, exhibit heightened adaptability and intelligence.

FAIR's advancements mark substantial progress toward socially intelligent robots that can collaborate with humans. But much work remains to integrate Habitat 3.0's digital humans with HomeRobot's physical platforms for studying complex AI-human interactions.

More algorithms, data, and testing across diverse simulated environments are required before full-scale deployment. Still, by combining cutting-edge simulation, datasets, and robotics platforms, Meta edges closer to embodied AI agents that can perceive, learn, and assist people in the real world.