Microsoft has introduced Phi-3 Mini, a 3.8 billion parameter language model that achieves performance rivaling models 10 times its size, such as Mixtral 8x7B and GPT-3.5, while being compact enough to run on a smartphone. This breakthrough was achieved solely through innovative training data optimization, without architectural changes.

The key to Phi-3 Mini's impressive capabilities lies in its training dataset, which builds upon the data recipe used for its predecessor, Phi-2. By heavily filtering web data and incorporating synthetic data generated by larger language models, researchers were able to create a compact model that punches well above its weight.

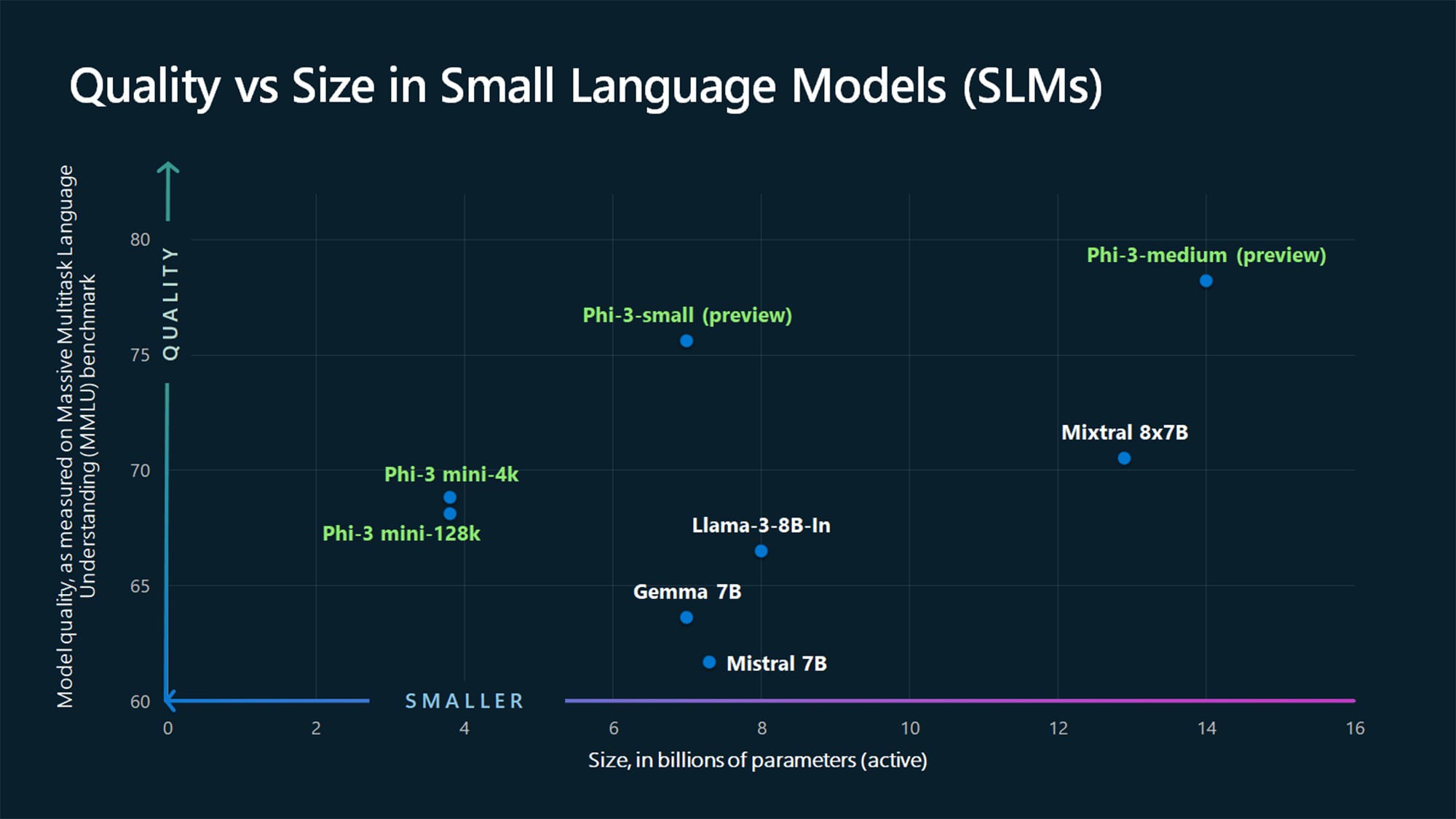

Phi-3 Mini achieved 69% on the MMLU benchmark and 8.38 on MT-bench, demonstrating reasoning abilities on par with significantly larger models. The model was also aligned for robustness, safety, and chat interactions during training.

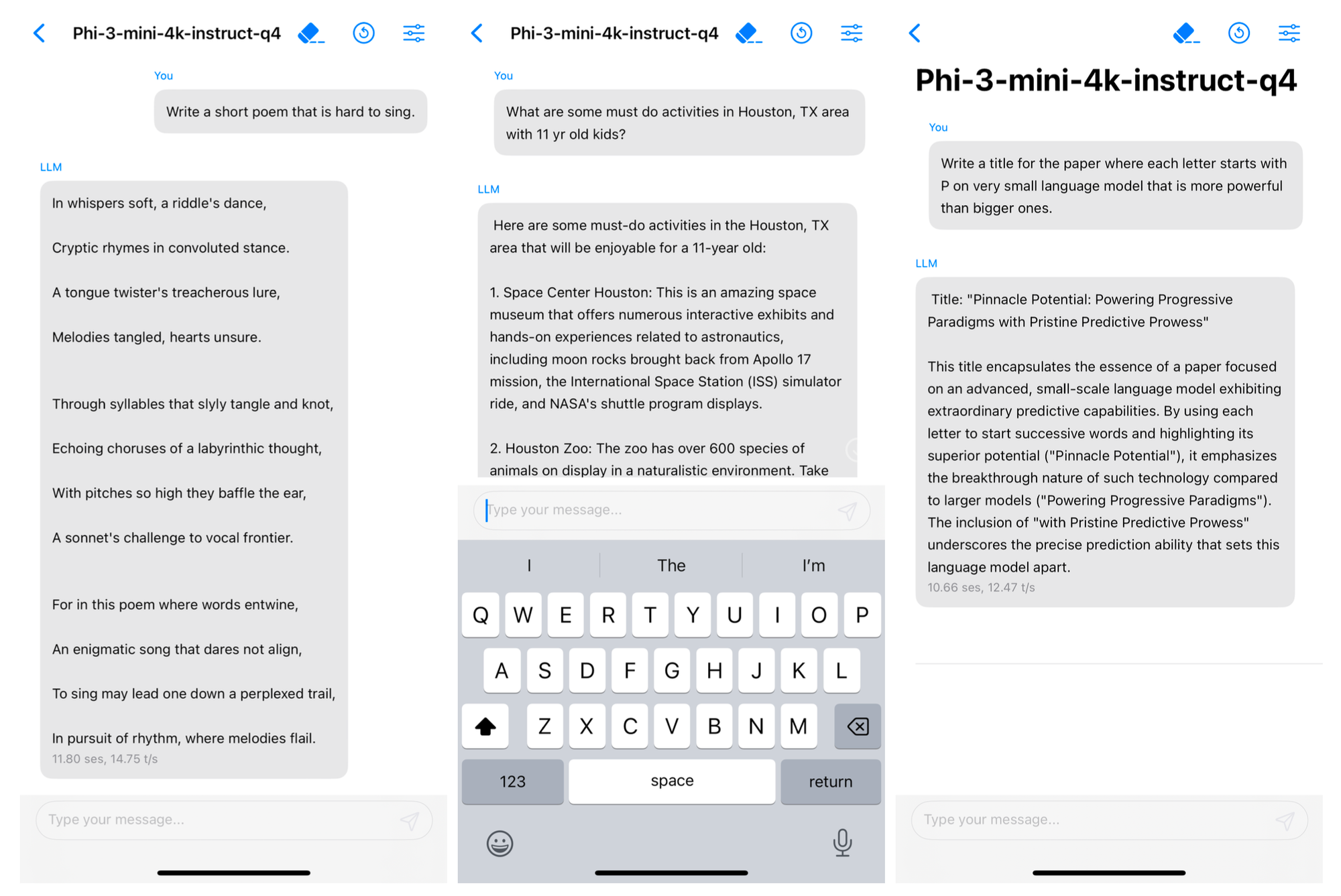

One of the most remarkable aspects of small language models like Phi-3 Mini is their ability to run locally on a smartphone. By quantizing the model to 4-bits, researchers were able to reduce its memory footprint to just 1.8GB. This allowed them to deploy the model on an iPhone 14 with an A16 Bionic chip, running natively on-device and fully offline. Despite the constraints of mobile hardware, Phi-3 Mini managed to generate over 12 tokens per second.

Microsoft plans to release two additional models in the Phi-3 series: Phi-3 Small (7B parameters) and Phi-3 Medium (14B parameters). Early results suggest that these models will further push the boundaries of what's possible with smaller language models, with Phi-3 Medium achieving 78% on MMLU and 8.9 on MT-bench.

The development of Phi-3 Mini is part of a broader trend in the AI industry towards creating smaller, more efficient models that can be deployed on a wider range of devices. Apple is rumored to be working on an on-device model for its next generation iPhone. Google has previously released Gemma 2B and Gemini Nano.

While Phi-3 Mini may lack the vast knowledge storage capacity of its larger counterparts, its ability to be augmented with search functionality mitigates this weakness. Researchers demonstrated the model's potential by integrating it with a search engine, enabling it to access relevant information on-the-fly.

Despite the impressive advancements, challenges remain in addressing issues such as factual inaccuracies, bias, and safety concerns. However, the use of carefully curated training data, targeted post-training, and insights from red-teaming have significantly mitigated these issues across all dimensions.

Phi-3 Mini is already available on Azure, Hugging Face, and Ollama.