Mistral AI, the Paris-based artificial intelligence startup, has launched two new language models designed to bring advanced AI capabilities to edge devices. Dubbed "les Ministraux," these compact models - Ministral 3B and Ministral 8B - pack surprising power into smaller packages, potentially revolutionizing on-device AI applications.

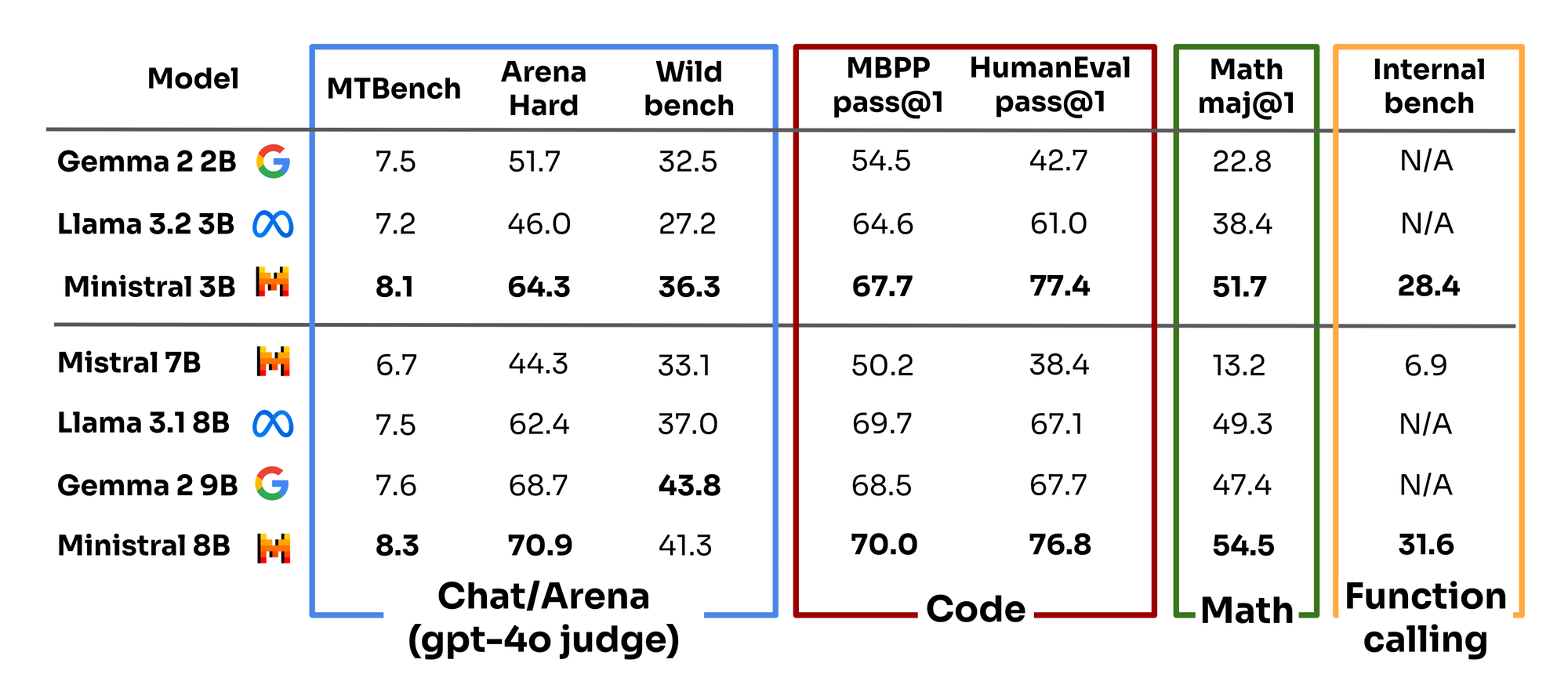

The smaller Ministral 3B, with just 3 billion parameters, outperforms Mistral's original 7 billion parameter model on most benchmarks. Its larger sibling, Ministral 8B, boasts performance rivaling models several times its size.

Both models support an impressive 128K token context window, allowing them to process about 50 pages of text at once. This extensive context enables more coherent and contextually aware responses in applications like on-device translation, offline smart assistants, and local analytics.

Mistral AI emphasized the growing demand for privacy-focused, local AI inference in critical applications. Les Ministraux aim to meet this need by offering compute-efficient, low-latency solutions for scenarios ranging from autonomous robotics to internet-less smart assistants.

The company also highlighted the models' potential as efficient intermediaries in multi-step AI workflows. When used alongside larger language models, les Ministraux can handle tasks like input parsing and API calling based on user intent, all while maintaining low latency and cost.

Ministral 8B is available for download today, but only for research purposes. Developers and companies interested in self-deployment will need to contact Mistral for commercial licensing. Both models are accessible through Mistral's cloud platform, La Platforme, priced at $0.04 per million tokens for Ministral 3B and $0.10 per million tokens for Ministral 8B.

This release aligns with an industry trend towards smaller, more efficient AI models. Tech giants like Google, Microsoft, and Meta have all recently introduced compact models optimized for edge devices. Mistral claims its new offerings outperform comparable models from these companies on several AI benchmarks.

As Mistral AI continues to expand its product portfolio, les Ministraux represent a significant step in bringing powerful AI capabilities to a wider range of devices and applications. The coming months will likely reveal how these compact models stack up in real-world use cases and whether they can deliver on the promise of efficient, privacy-preserving AI at the edge.