French AI company Mistral AI has once again shaking up the AI landscape with its latest release, Mistral Small 3.1. This 24-billion-parameter model is not just another open-source release—it outperforms proprietary small models from Google and OpenAI while running efficiently on consumer hardware. The model’s strong benchmark results, expanded 128K token context window, and multimodal capabilities make it one of the most competitive small AI models available today.

Key Points

- Mistral Small 3.1 beats Google’s Gemma 3 and OpenAI’s GPT-4o Mini in text, vision, and multilingual benchmarks.

- Runs locally on a single RTX 4090 or Mac with 32GB RAM.

- Handles both text and image inputs with up to 128K token context length.

- Released under an Apache 2.0 license, making it freely available for developers.

Mistral Small 3 was released at the end of January. The model is designed for text and multimodal understanding, boasts a long 128K token context window, and delivers top-tier efficiency in its weight class.

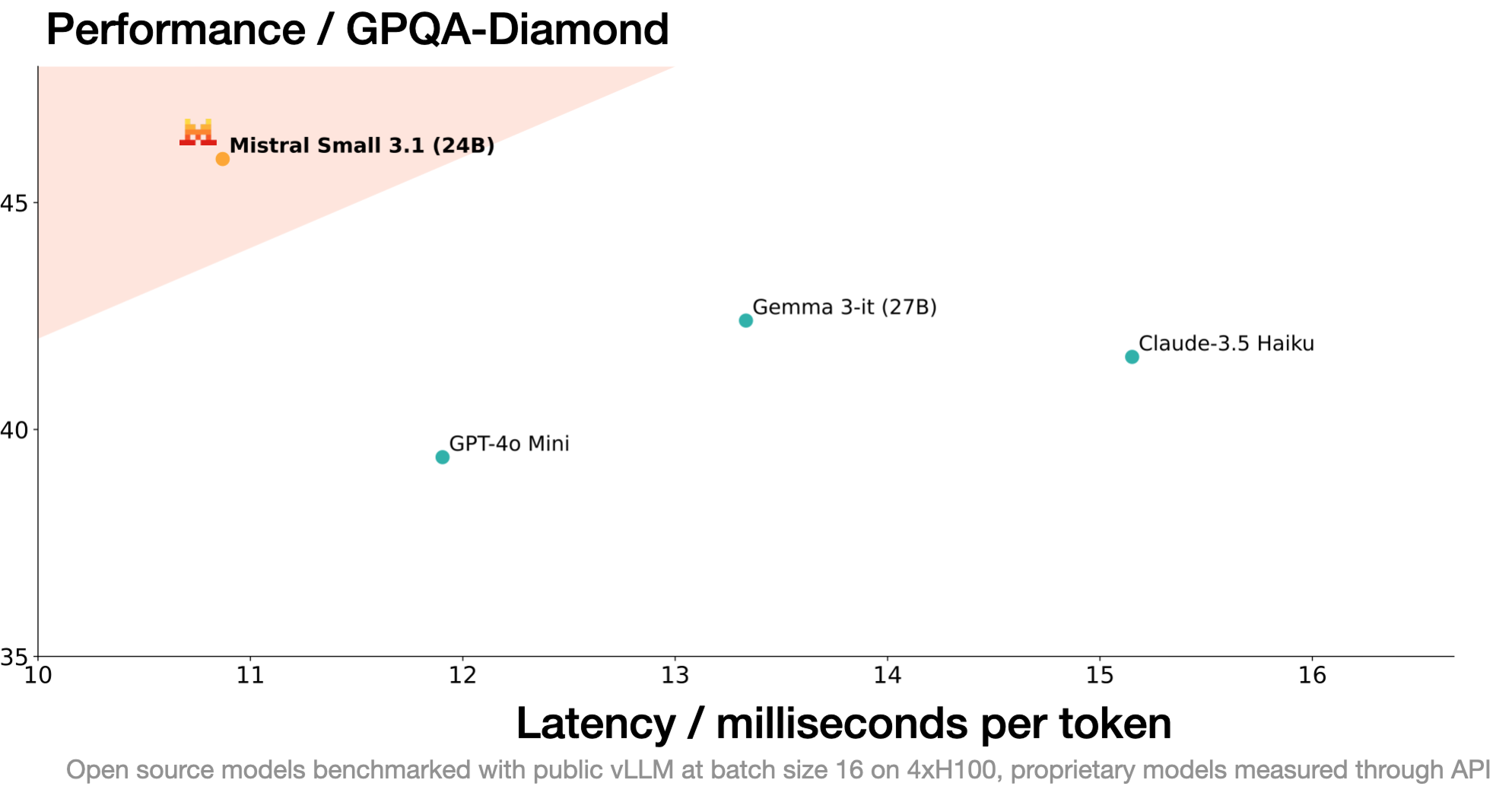

Mistral Small 3.1 positions itself as a powerful yet lightweight alternative to industry-leading models. According to benchmark evaluations, it surpasses Google’s Gemma 3 and OpenAI’s GPT-4o Mini in key areas, including text generation, vision tasks, and multilingual comprehension. The model’s performance in MMLU (general knowledge), MATH (reasoning), and GPQA (question answering) places it ahead of competing models with larger parameter counts.

Unlike many state-of-the-art AI models that require expensive cloud infrastructure, Mistral Small 3.1 can run efficiently on a single RTX 4090 GPU or a MacBook with 32GB RAM when quantized. This local deployment capability lowers costs for startups and enterprises looking to integrate AI without relying on cloud services.

The model’s expanded 128K token context window allows it to handle long-form reasoning and document analysis more effectively. It also features improved vision understanding, making it useful for applications such as document verification, medical diagnostics, and object recognition.

Mistral AI has committed to openness, releasing the model under an Apache 2.0 license, which allows for free use and modification. The company also provides both base and instruct checkpoints, enabling developers to fine-tune the model for specific domains, including legal, healthcare, and customer service applications.

Mistral’s aggressive push in open-source AI positions it as a growing competitor to OpenAI, Google, and Anthropic. By delivering models that match or exceed proprietary offerings while maintaining accessibility, the company is challenging the closed-source dominance of U.S. AI firms. The model is already available on Hugging Face and Google Cloud’s Vertex AI, with upcoming integrations into NVIDIA NIM and Microsoft Azure AI Foundry.

As AI landscape matures, Mistral’s Small 3.1 serves as another reminder that highly capable, open-source AI is advancing rapidly—reshaping the balance of power in the industry.