NVIDIA Research has unveiled SteerLM, a new technique that allows users to customize the responses of large language models (LLMs) like Llama 2 during inference. SteerLM provides more control over model outputs by letting users define key attributes to steer the model's behavior.

While foundation LLMs can generate human-like text, they often fail to provide helpful, nuanced responses tailored to users' needs. Existing approaches to improve LLMs like supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) have limitations. SFT leads to short, mechanical responses while RLHF has training complexity and lacks user control.

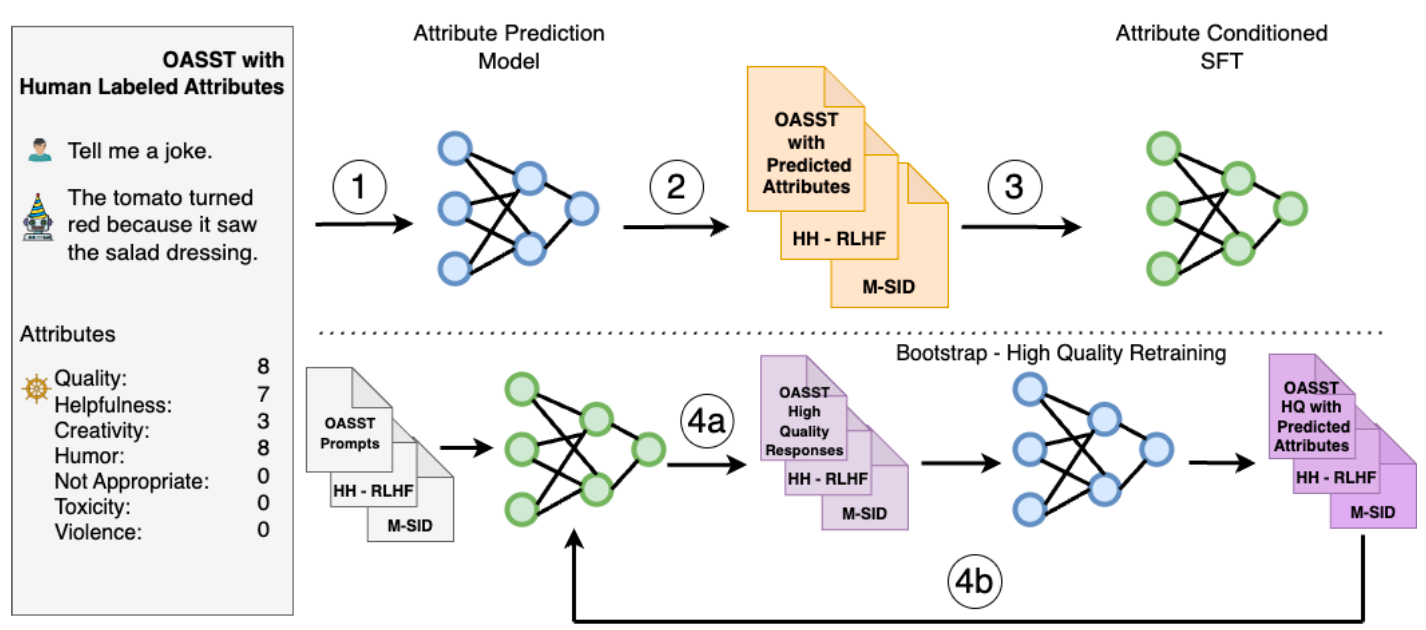

SteerLM aims to overcome these challenges through a four-step supervised fine-tuning method. This four-step technique not only simplifies LLM customization but also provides dynamic steering of model outputs based on specified attributes.

- Training an Attribute Prediction Model: This model is trained on human-annotated datasets to evaluate qualities such as helpfulness, humor, and creativity.

- Annotation of Diverse Datasets: This step uses the aforementioned model to predict attribute scores, enhancing the diversity of data accessible to the LLM.

- Attribute-Conditioned SFT: The LLM is trained to generate responses based on specified attributes, such as perceived quality.

- Bootstrap Training Through Model Sampling: This involves generating diverse responses based on maximum quality and then fine-tuning to improve alignment.

What sets SteerLM apart is the user's ability to adjust attributes at the time of inference allowing for real-time tailoring to specific needs, not just predetermined ones. This flexibility enables customizing models for diverse use cases like gaming, education, and accessibility. Companies could therefore serve multiple teams with personalized capabilities from one model.

Compared to complex RLHF training, SteerLM's straightforward fine-tuning simplifies state-of-the-art customization. By using standard techniques and necessitating minimal changes to infrastructure and code, SteerLM delivers optimal results with lesser hassle. Experiments showed SteerLM 43B outperforming existing RLHF models like ChatGPT-3.5 and LLaMA 30B RLHF on the Vicuna benchmark.

SteerLM's user-steerable responses promise more customizable AI systems tailored to individual needs. Rather than rebuild models for each application, developers can embed multiple attributes into one model and tune it dynamically during deployment. This democratizes advanced customization and unlocks a new generation of bespoke AI.

NVIDIA is releasing SteerLM as open source software in its NVIDIA NeMo framework. The code and a customized 13B Llama 2 model are available on Hugging Face to try out the technique. NVIDIA has also provided detailed instructions on how to train a SteerLM model.

As LLMs continue advancing, methods like SteerLM that simplify customizing models for real-world needs will be crucial for delivering helpful AI that aligns with user values.