During his GTC 2025 keynote, NVIDIA CEO Jensen Huang introduced DGX Spark and DGX Station, two new desktop AI workstations powered by the Grace Blackwell architecture. These systems—designed for developers, researchers, and AI startups—deliver data center-level AI capabilities in a compact form, allowing users to fine-tune and deploy AI models without cloud dependence.

Key Points:

- DGX Spark is the world’s smallest AI supercomputer, featuring the GB10 Grace Blackwell Superchip and delivering 1,000 TOPS of AI compute.

- DGX Station is a more powerful desktop system with the GB300 Blackwell Ultra Superchip with 784GB of unified memory

- Both systems allow AI models to run locally and integrate seamlessly with NVIDIA DGX Cloud and other cloud platforms.

- Available from major manufacturers like ASUS, Dell, HP, and Lenovo

DGX Spark, previously known as Project Digits, is the smallest AI supercomputer NVIDIA has ever built. It features the GB10 Grace Blackwell Superchip, delivering 1,000 trillion operations per second of AI compute. With 128GB of unified system memory, DGX Spark can handle AI models up to 200 billion parameters for inference and fine-tune models up to 70 billion parameters. Designed to fit into a compact form factor, it offers a power-efficient solution for AI development at just 170W power consumption.

For those needing even more horsepower, DGX Station is NVIDIA’s high-end desktop AI system. It runs on the GB300 Blackwell Ultra Superchip, which includes a massive 784GB of unified memory. This allows users to train and infer large-scale models beyond 200 billion parameters, making it suitable for heavy AI research workloads. DGX Station also includes NVIDIA’s ConnectX-8 SuperNIC, offering 800Gb/s networking, enabling seamless collaboration and multi-node setups.

Jensen Huang described these systems as a new class of AI-native computers:

“AI has transformed every layer of the computing stack. It stands to reason a new class of computers would emerge—designed for AI-native developers and to run AI-native applications.”

For developers working with edge applications, robotics, or computer vision solutions, these systems enable local development with NVIDIA frameworks like Isaac, Metropolis, and Holoscan before deploying to production environments.

This hardware release continues NVIDIA's strategy of vertical integration, offering not just chips but complete systems with their AI software stack pre-installed. Both systems come with NVIDIA's full AI software suite, ensuring compatibility with AI models from DeepSeek, Meta, Google, and others. Users can develop and test AI models locally and then scale them up by deploying to DGX Cloud or other accelerated data center infrastructure with minimal code changes.

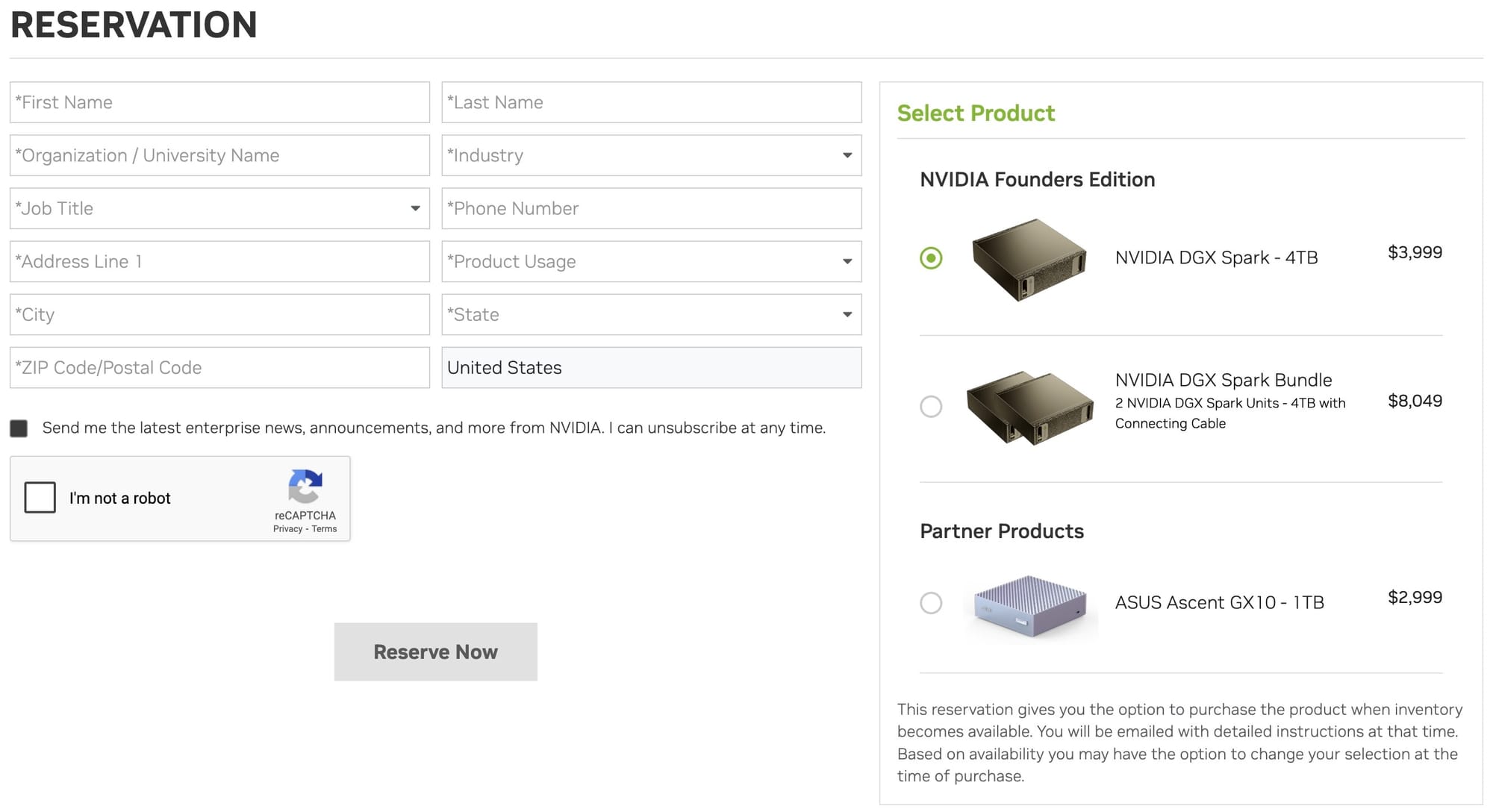

Reservations for DGX Spark start today at $3,999, with a dual-unit bundle at $8,049. DGX Station will be available later this year from ASUS, BOXX, Dell, HP, Lambda, and Supermicro. However, ASUS has already announced its own version called the Ascent GX10, a 1TB system based on the same architecture for $2,999, indicating we'll see various price points and configurations from different manufacturers in the coming months.

With the release of these systems, NVIDIA is betting that AI developers and enterprises will increasingly want on-prem AI compute rather than relying solely on cloud GPUs. Given the current demand for AI compute power, DGX Spark and DGX Station could become must-have tools for AI research labs, startups, and anyone building the next wave of generative AI applications.